Python 3.8.10, Pandas 1.4.4, Numpy 1.23.2

I'm receiving a data streaming from a machine every 10 seconds (in the database times are precisely stored every 10 seconds: no 9.5 nor 11). The machine goes sometimes in standby so the streaming stops. Occasionally it sends random data when in standby.

To eliminate the spurious data I use a parameter SECONDS: when set to 10, each line is considered valid if there was a stored line 10 seconds before.

import pandas as pd

df = pd.DataFrame(

{"DT": [ # two occasional readings

"2022-09-21 00:38:40", "2022-09-21 01:59:30",

# stable data flow

"2022-09-21 04:31:40", "2022-09-21 04:31:50",

"2022-09-21 04:32:00", "2022-09-21 04:32:10",

"2022-09-21 04:32:20", "2022-09-21 04:32:30",

"2022-09-21 04:32:40", "2022-09-21 04:32:50",

# missed one reading here

"2022-09-21 04:33:10", "2022-09-21 04:33:20",

"2022-09-21 04:33:30", "2022-09-21 04:33:40",

"2022-09-21 04:33:50", "2022-09-21 04:34:00",

"2022-09-21 04:34:10", "2022-09-21 04:34:20",

"2022-09-21 04:34:30"

],

"notes": [

"occasional mistake",

"occasional mistake",

"first stable reading",

"", "", "", "", "", "", "",

"one reading missing",

"", "", "", "", "", "", "", ""

] },

)

df.DT = df.DT.astype('datetime64[ns]')

SECONDS = 10 # <====== change here the accepted interval

df["deltaDT"] = (df["DT"] - df["DT"].shift(SECONDS // 10)).dt.total_seconds()

df["ok"] = np.where(df["deltaDT"]==SECONDS, "ok", "-")

print(df)

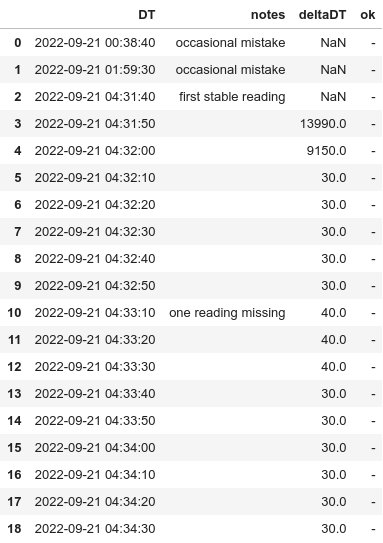

This is for me the most intuitive solution, and for 10 seconds it returns the correct answer:

(Note: The reading at 2022-09-21 04.33.10 is lost, not an issue here)

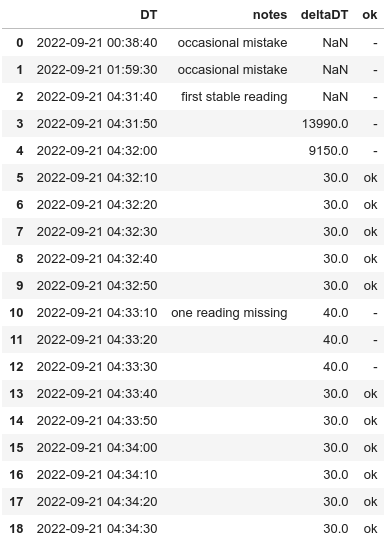

Problem arise with only some SECONDS values: 10, 20, 40, 50 are OK. Choosing 30 and 60, the error appears.

This is SECONDS = 30:

It is obviously a problem related to binary conversion. Quite difficult to spot because if I am working with floats that really represent only integer values or NaN, there shouldn't be an infinitesimal epsilon involved.

Changing the filter condition to

df["ok"] = np.where(df["deltaDT"]<=SECONDS EPSILON, "ok", "-")

it return the wrong result for EPSILON=1E-15, and the correct one for EPSILON=1E-14.

My question now (sorry I was longer than the Harry Potter saga):

How to convert

df["deltaDT"] = (df["DT"] - df["DT"].shift(SECONDS // 10)).dt.total_seconds() to integer?

Adding .astype("Int64") generates the error "TypeError: cannot safely cast non-equivalent float64 to int64"

CodePudding user response:

to convert your column that contains NaN to integer, it need to be nullable integer:

df["deltaDT"] = df["deltaDT"].astype(pd.Int64Dtype())

CodePudding user response:

The problem is wrongly addressed. It has nothing to do with NaN, but with some approximation when INTEGER seconds are not considered really integer:

df = pd.Series(["2022-09-21 04:32:00", "2022-09-21 04:32:30"]).astype('datetime64[ns]')

deltaT = (df-df.shift()).dt.total_seconds()[1]

print(deltaT)

# 30.000000000000004

That means the time binary representation cannot accomodate all int seconds, but in some case they are approximated.

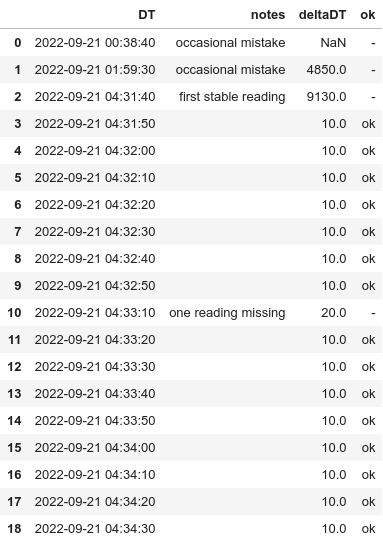

Rounding the column with np.round() will trunc the epsilon away:

SECONDS = 30 # <====== change here the accepted interval

df["deltaDT"] = (df["DT"] - df["DT"].shift(SECONDS // 10)).dt.total_seconds()

df["deltaDT"] = np.round(df["deltaDT"], 0)

df["ok"] = np.where(df["deltaDT"]==SECONDS, "ok", "-")

returns now the correct

Lesson learned: Don't assume anything when working with "obvious" time intervals.