I have a list of items that I scraped from Github. This is sitting in df_actionname ['ActionName']. Each ['ActionName'] can then be converted into a ['Weblink'] to create a website link. I am trying to loop through each weblink and scrape data from it.

My code:

#Code to create input data

import pandas as pd

actionnameListFinal = ['TruffleHog OSS','Metrics embed','Super-Linter',]

df_actionname = pd.DataFrame(actionnameListFinal, columns = ['ActionName'])

# Create dataframes

df_actionname = pd.DataFrame(actionnameListFinal, columns = ['ActionName'])

#Create new column for parsed action names

df_actionname['Parsed'] = df_actionname['ActionName'].str.replace( r'[^A-Za-z0-9] ','-', regex = True)

df_actionname['Weblink'] = 'https://github.com/marketplace/actions/' df_actionname['Parsed']

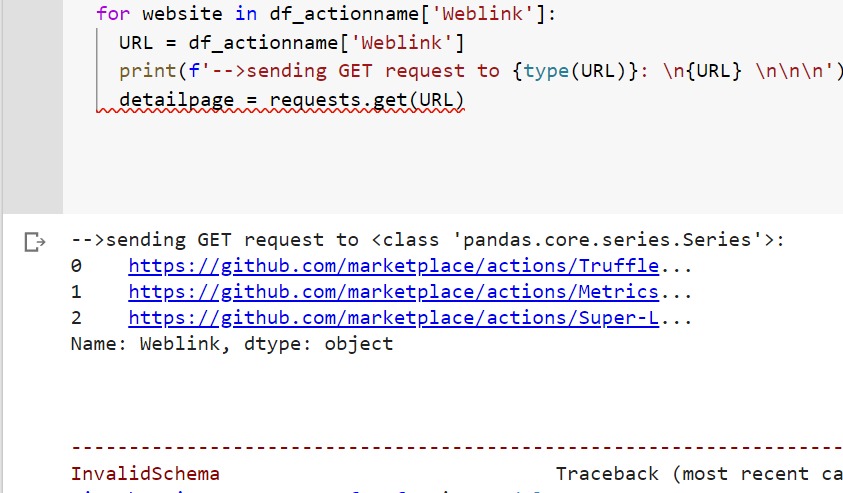

for website in df_actionname['Weblink']:

URL = df_actionname['Weblink']

detailpage = requests.get(URL)

My code is failing at " detailpage= requests.get(URL) " The error message I am getting is:

in get_adapter raise InvalidSchema(f"No connection adapters were found for {url!r}") requests.exceptions.InvalidSchema: No connection adapters were found for '0