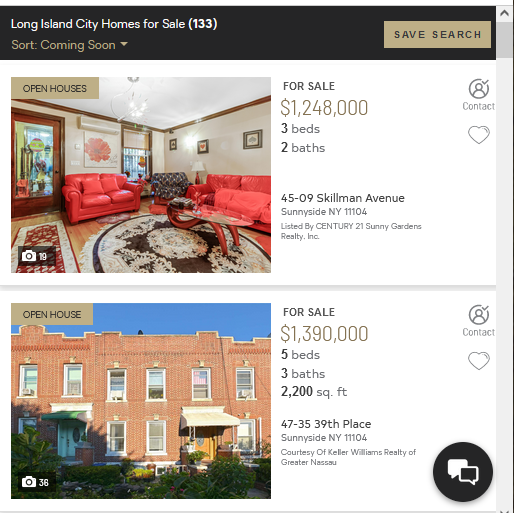

I'm looking at this URL.

Here's the sample code that i tried to hack together.

from selenium import webdriver

from bs4 import BeautifulSoup

import pandas as pd

from time import sleep

url='https://www.century21.com/real-estate/long-island-city-ny/LCNYLONGISLANDCITY/'

driver = webdriver.Chrome('C:\\Utility\\chromedriver.exe')

driver.get(url)

sleep(3)

content = driver.page_source

soup = BeautifulSoup(content, features='html.parser')

for element in soup.findAll('div', attrs={'class': 'infinite-item property-card clearfix property-card-C2183089596 initialized visited'}):

#print(element)

address = element.find('div', attrs={'class': 'property-card-primary-info'})

print(address)

price = element.find('a', attrs={'class': 'listing-price'})

print(price)

When I run this, I get no addresses and no prices. Not sure why.

CodePudding user response:

Web scraping is more of an art than a science. It's helpful to pull up the page source in chrome or browser of your choice so you can think about the DOM hierarchy and figure out how to get down into the elements that you need to scrape. Some websites have been built very cleanly and this isn't too much work, and others are scrapped together nonsense that are nightmares to dig data out of it.

This one, thankfully, is very clean.

This isn't perfect, but I think it will get you in the ballpark:

import requests

from bs4 import BeautifulSoup

url='https://www.century21.com/real-estate/long-island-city-ny/LCNYLONGISLANDCITY/'

page = requests.get(url)

soup = BeautifulSoup(page.content, features='html.parser')

for element in soup.findAll('div', attrs={'class': 'property-card'}):

address = element.find('div', attrs={'class': 'property-card-primary-info'}).find('div', attrs={'class': 'property-address-info'})

for address_item in address.children:

print(address_item.get_text().strip())

price = element.find('div',attrs={'class': 'property-card-primary-info'}).find('a', attrs={'class': 'listing-price'})

print(price.get_text().strip())