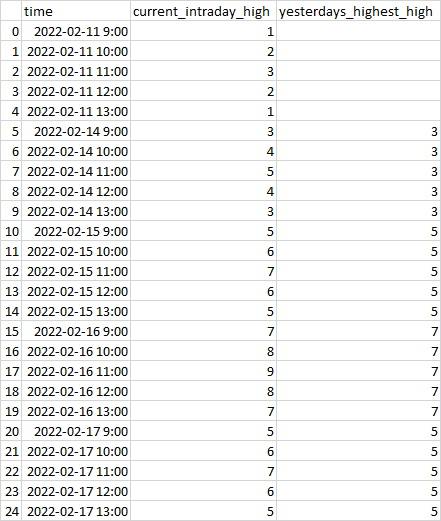

...how can I get this added column:

As you can see, when the current_intraday_high price becomes greater than it's yesterdays_highest_high price, the current_intraday_high becomes the new highest high for the current day. Even if the price drops for the rest of the day, the highest high is still "remembered" for that day, so a rolling(x).max() could work here, however that x is dynamic as you progress throughout the day.

If you were to somehow know the current bar's number since the beginning of the current day (like at 9:00, x would equal 0, at 10:00 x would equal 1, at 11:00 x would equal 2, etc. And then reset when the date changes, that could work for using rolling(x).max(), but not sure of what the solution is here.)

Another thing to notice is the added desired column only pertains to the current date/dat, so it will only ever have:

- The current date's

current_intraday_highrolling highest high, or - the value in

yesterdays_highest_high, whichever is bigger.

And if a new current_intraday_high is reached today, that becomes the rolling max to "beat" (so to speak) for the rest of that day. When a new day starts, it starts over again. Have to assume that each day will have a different number of rows, not just 5 for each day like I have here. Some may have 50 rows for the day, some may be 40, completely random.

Here is a reproducible code with dataset as shown:

import pandas as pd

import numpy as np

###################################################

# CREATE MOCK INTRADAY DATAFRAME

###################################################

intraday_date_time = [

"2022-02-11 09:00:00",

"2022-02-11 10:00:00",

"2022-02-11 11:00:00",

"2022-02-11 12:00:00",

"2022-02-11 13:00:00",

"2022-02-14 09:00:00",

"2022-02-14 10:00:00",

"2022-02-14 11:00:00",

"2022-02-14 12:00:00",

"2022-02-14 13:00:00",

"2022-02-15 09:00:00",

"2022-02-15 10:00:00",

"2022-02-15 11:00:00",

"2022-02-15 12:00:00",

"2022-02-15 13:00:00",

"2022-02-16 09:00:00",

"2022-02-16 10:00:00",

"2022-02-16 11:00:00",

"2022-02-16 12:00:00",

"2022-02-16 13:00:00",

"2022-02-17 09:00:00",

"2022-02-17 10:00:00",

"2022-02-17 11:00:00",

"2022-02-17 12:00:00",

"2022-02-17 13:00:00",

]

intraday_date_time = pd.to_datetime(intraday_date_time)

intraday_df = pd.DataFrame(

{

"time": intraday_date_time,

"current_intraday_high": [1,2,3,2,1,

3,4,5,4,3,

5,6,7,6,5,

7,8,9,8,7,

5,6,7,6,5,

],

"yesterdays_highest_high": [np.nan,np.nan,np.nan,np.nan,np.nan,

3,3,3,3,3,

5,5,5,5,5,

7,7,7,7,7,

5,5,5,5,5,

],

},

)

print(intraday_df)

# intraday_df.to_csv('intraday_df.csv', index=True)

###################################################

# CREATE THE ROLLING DAILY HIGHEST HIGH COLUMN

###################################################

# Attempt, not working obviously

intraday_df['current_highest_high'] = np.where(intraday_df['current_intraday_high'] > intraday_df['yesterdays_highest_high'],

intraday_df['current_intraday_high'], np.nan)

print(intraday_df)

I'll be back tomorrow night (11:00 PM Central-ish) to review.

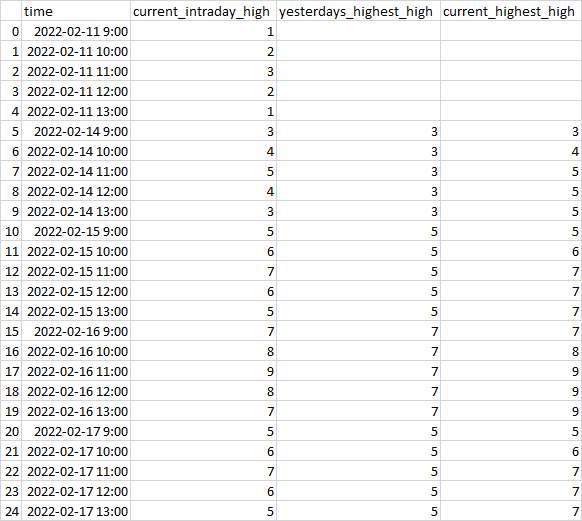

CodePudding user response:

You could try:

intraday_df.assign(current_highest_high=intraday_df

.groupby(intraday_df.time.dt.normalize()).cummax()

[["current_intraday_high","yesterdays_highest_high"]]

.max(axis=1, skipna=False))

Output:

---- --------------------- ------------------------- --------------------------- ------------------------

| | time | current_intraday_high | yesterdays_highest_high | current_highest_high |

|---- --------------------- ------------------------- --------------------------- ------------------------|

| 0 | 2022-02-11 09:00:00 | 1 | nan | nan |

| 1 | 2022-02-11 10:00:00 | 2 | nan | nan |

| 2 | 2022-02-11 11:00:00 | 3 | nan | nan |

| 3 | 2022-02-11 12:00:00 | 2 | nan | nan |

| 4 | 2022-02-11 13:00:00 | 1 | nan | nan |

| 5 | 2022-02-14 09:00:00 | 3 | 3 | 3 |

| 6 | 2022-02-14 10:00:00 | 4 | 3 | 4 |

| 7 | 2022-02-14 11:00:00 | 5 | 3 | 5 |

| 8 | 2022-02-14 12:00:00 | 4 | 3 | 5 |

| 9 | 2022-02-14 13:00:00 | 3 | 3 | 5 |

| 10 | 2022-02-15 09:00:00 | 5 | 5 | 5 |

| 11 | 2022-02-15 10:00:00 | 6 | 5 | 6 |

| 12 | 2022-02-15 11:00:00 | 7 | 5 | 7 |

| 13 | 2022-02-15 12:00:00 | 6 | 5 | 7 |

| 14 | 2022-02-15 13:00:00 | 5 | 5 | 7 |

| 15 | 2022-02-16 09:00:00 | 7 | 7 | 7 |

| 16 | 2022-02-16 10:00:00 | 8 | 7 | 8 |

| 17 | 2022-02-16 11:00:00 | 9 | 7 | 9 |

| 18 | 2022-02-16 12:00:00 | 8 | 7 | 9 |

| 19 | 2022-02-16 13:00:00 | 7 | 7 | 9 |

| 20 | 2022-02-17 09:00:00 | 5 | 5 | 5 |

| 21 | 2022-02-17 10:00:00 | 6 | 5 | 6 |

| 22 | 2022-02-17 11:00:00 | 7 | 5 | 7 |

| 23 | 2022-02-17 12:00:00 | 6 | 5 | 7 |

| 24 | 2022-02-17 13:00:00 | 5 | 5 | 7 |

---- --------------------- ------------------------- --------------------------- ------------------------