Python 3.11.0 . I read document at

Why not count 0 in bit count?

CodePudding user response:

Counting 1-bits is a somewhat common need. For example when you use an integer to represent a set, where 1-bits mark the elements in the set, and you want to know the size of the set.

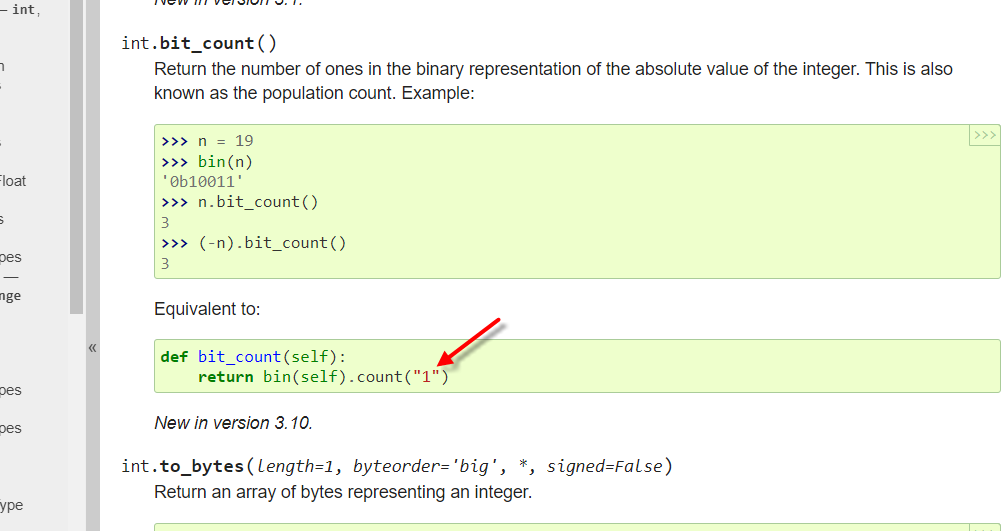

As its documentation says, it was added in Python 3.10. So you visit the What’s New In Python 3.10 page and search for it. You see it came from bpo-29882, where you can read the discussion about use cases, the name, and more.

CodePudding user response:

counting of zeros in positive number does not make much sense (the same goes for counting ones in negative 2complement numbers)

only ones are really significant, what to do with non significant zeroes on left?

- if you count non significant zeroes from left - number of zeroes depends on size of variable, how many zeroes in number 1? in 16 bit variable? in 32? in arbitrary long (like python uses)?

- if you don't count non significant zeroes from left - how many zeroes in 0 then? how would you code such function efficiently?

compare with:

- number of ones is clearly defined and understandable for everyone

- number of ones does not depend on size of variable and produces the same result even for arbitrary long numbers in any language

- it is very easy to implement in both software and hardware for any data width

so, by convention bit count indicates number of ones in number, and many languages use this convention

another common name is popcount (from population) and some languages use this as well