I have a dataframe like as shown below

df = pd.DataFrame({'subject_id':[1,1,1,1,1,1,1,2,2,2,2,2],

'invoice_id':[1,2,3,4,5,6,7,8,9,10,11,12],

'purchase_date' :['2017-04-03 12:35:00','2017-04-03 12:50:00','2018-04-05 12:59:00','2018-05-04 13:14:00','2017-05-05 13:37:00','2018-07-06 13:39:00','2018-07-08 11:30:00','2017-04-08 16:00:00','2019-04-09 22:00:00','2019-04-11 04:00:00','2018-04-13 04:30:00','2017-04-14 08:00:00'],

'val' :[5,5,5,5,1,6,5,5,8,3,4,6],

'Prod_id':['A1','A1','C1','A1','E1','Q1','G1','F1','G1','H1','J1','A1']})

df['purchase_date'] = pd.to_datetime(df['purchase_date'])

I would like to do the below

a) group the data by subject_id at 3 month intervals (using purchase date column)

b) Compute statistics such as mean, sum, nunique and count for each of the group based on their other variables such as prod_id, val etc

For ex: the earliest purchase date in df starts at 2017-04-03. Here starting month in the dataset is April. So, we count 3 months from Apr. So, APR, MAY and JUN will be M1 and July, Aug and Sept will be M2 and so on. We do this to create data at 3-month intervals.

whenever there is no data for in between for 3 months, we put that as zero (0)

So, I tried something like below based on online research

length_of_purchase_date = 10

date_month_map = {

str(x)[:length_of_purchase_date]: 'M_%s' % (i 1) for i, x in enumerate(

sorted(data_df.reset_index()['purchase_date'].unique(), reverse=True)

)

}

df.reset_index().groupby(['subject_id',

pd.Grouper(key='pruchase_date', freq='3M')

]).agg({

'val': [sum, mean,count],

})

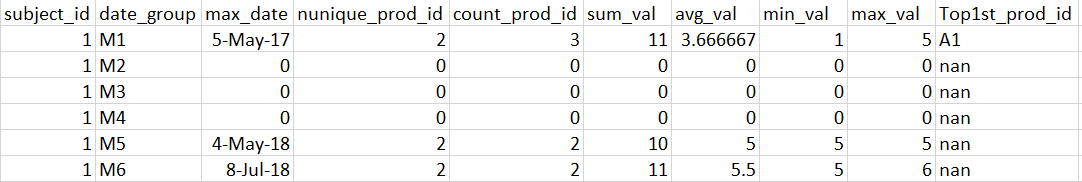

I expect my output to be like as shown below (shown for subject_id = 1). Please note that I have to do this on big data with millions of rows.

CodePudding user response:

Use:

#sorting per subject_id, purchase_date

df = df.sort_values(['subject_id','purchase_date'])

#create month groups by convert to month periods with subtract min values

per = df['purchase_date'].dt.to_period('m').astype('int')

df['date_group'] = (per.sub(per.min()) // 3 1)

#custom function

def f(x):

#get counts

y = x.value_counts()

#filter max values anf filter out if counts is 1

y = y[y.eq(y.max()) & y.ne(1)]

#if duplicates, empty y get NaN, value if exist one maximum non 1 value

return y.index[0] if len(y) == 1 else np.nan

#aggregate per both columns

df = df.groupby(['subject_id', 'date_group']).agg(max_date=('purchase_date','max'),

nunique=('Prod_id','nunique'),

count_prod_id=('Prod_id','count'),

sum_val=('val','sum'),

avg_val=('val','mean'),

min_val=('val','min'),

max_val=('val','max'),

Top1st_prod_id=('Prod_id',f))

#dictionary for fillna by 0

d = dict.fromkeys(df.columns.difference(['max_date','Top1st_prod_id']), 0)

#add missing date_group with reindex

df = (df.reset_index(level=0)

.groupby('subject_id')

.apply(lambda x: x.reindex(range(1, x.index.max() 1)))

.fillna(d))

#custom formating of columns

df['max_date'] = df['max_date'].dt.strftime('%d-%b-%y')

df = df.drop('subject_id', axis=1).reset_index()

df['date_group'] = 'M' df['date_group'].astype(str)

print (df)

subject_id date_group max_date nunique count_prod_id sum_val \

0 1 M1 05-May-17 2.0 3.0 11.0

1 1 M2 NaN 0.0 0.0 0.0

2 1 M3 NaN 0.0 0.0 0.0

3 1 M4 NaN 0.0 0.0 0.0

4 1 M5 04-May-18 2.0 2.0 10.0

5 1 M6 08-Jul-18 2.0 2.0 11.0

6 2 M1 14-Apr-17 2.0 2.0 11.0

7 2 M2 NaN 0.0 0.0 0.0

8 2 M3 NaN 0.0 0.0 0.0

9 2 M4 NaN 0.0 0.0 0.0

10 2 M5 13-Apr-18 1.0 1.0 4.0

11 2 M6 NaN 0.0 0.0 0.0

12 2 M7 NaN 0.0 0.0 0.0

13 2 M8 NaN 0.0 0.0 0.0

14 2 M9 11-Apr-19 2.0 2.0 11.0

avg_val min_val max_val Top1st_prod_id

0 3.666667 1.0 5.0 A1

1 0.000000 0.0 0.0 NaN

2 0.000000 0.0 0.0 NaN

3 0.000000 0.0 0.0 NaN

4 5.000000 5.0 5.0 NaN

5 5.500000 5.0 6.0 NaN

6 5.500000 5.0 6.0 NaN

7 0.000000 0.0 0.0 NaN

8 0.000000 0.0 0.0 NaN

9 0.000000 0.0 0.0 NaN

10 4.000000 4.0 4.0 NaN

11 0.000000 0.0 0.0 NaN

12 0.000000 0.0 0.0 NaN

13 0.000000 0.0 0.0 NaN

14 5.500000 3.0 8.0 NaN