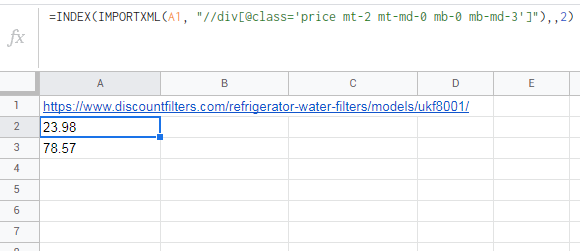

I'm having trouble pulling just the price for these sites onto a google sehet. Instead, I'm pulling multiple rows/currencies, etc. and I don't know how to fix it =/

Thank you all in advance!!

CodePudding user response:

regarding issues on multiple websites you are trying to scrape.. ImportXML is good for basic tasks, but won't get you too far if you are serious in scraping:

If the target website data requires some cleanup post-processing, it's getting very complicated since you are now "programming with excel formulas", rather painful process compared to regular code writing in conventional programming languages

There is no proper launch & cache control so the function can be triggered occasionally and if the HTTP request fails, cells will be populated with ERR! values

The approach only works with most basic websites (no SPAs rendered in browsers can be scraped this way, any basic web scraping protection or connectivity issue breaks the process, no control over HTTP request geo location, or number of retries)

When ImportXML() fails, the second approach to web scraping in Google Sheets is usually to write some custom Google Apps Script. This approach is much more flexible, just write Javascript code and deploy it as Google Sheets addon, but it takes a lot of time, and is not too easy to debug and iterate over - definitely not low code.

And the third approach is to use proper tools (automation framework scraping engine) and use Google Sheets just for storage purposes: https://youtu.be/uBC752CWTew

CodePudding user response:

Absolutely right Anthony about ImportXML limits. You mention the Google Apps Script alternative... and this is exactly what I did. I've built the importXML alternative: ImportFromWeb You can install it from Google workspace and it works as importXML but overpasses its limits. https://workspace.google.com/marketplace/app/importfromweb_web_scraping_in_google_she/278587576794

Proper tools is a good alternative obviously...