The outcome should be like this:

CodePudding user response:

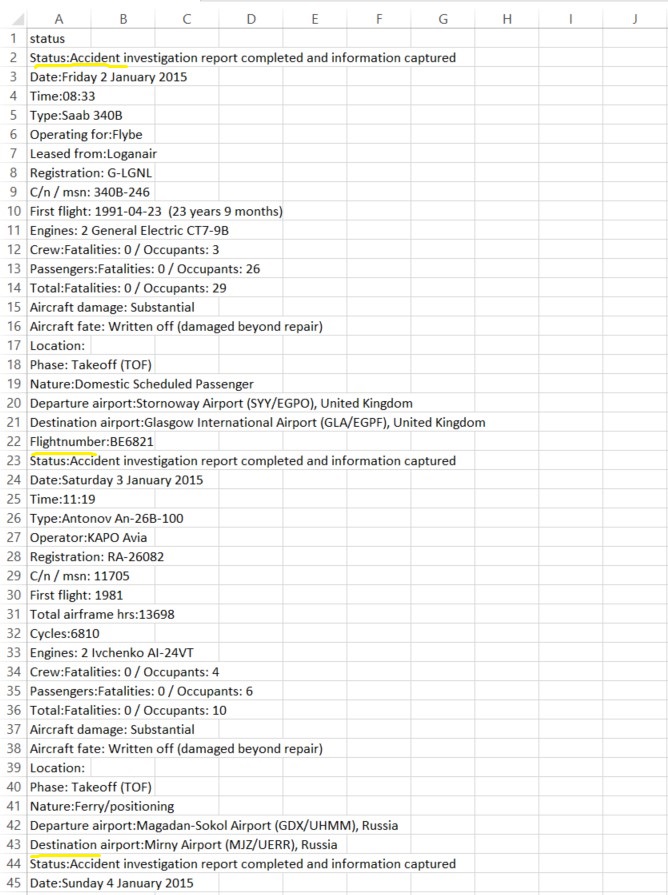

It looks the values of tl are strings, e.g. 'Status:Accident investigation report completed and information captured'.

Converting the list of strings into a pd.DataFrame gets you a single column with all the values in the list.

If you want to use the "name" of the string, e.g. Status as a column header, you'll need to separate it from the rest of the text.

# maxsplit of 1 so we don't accidentally split up the values, e.g. time

title, text = title.split(":", maxsplit=1)

This looks like

('Status', 'Accident investigation report completed and information captured')

Now we create a dictionary

row_dict[title] = text

Giving us

{'Status': 'Accident investigation report completed and information captured'}

We will add to this same dictionary in the last loop

# old

for i in table1.find_all('tr'):

title = i.text

tl.append(title)

# new

row_dict = {}

for i in table1.find_all('tr'):

title = i.text

title, text = title.split(":", maxsplit=1)

row_dict[title] = text

After we've gathered all the data from page, i.e. completed the row_dict loop, we append to tl.

row_dict = {}

for i in table1.find_all('tr'):

title = i.text

title, text = title.split(":", maxsplit=1)

row_dict[title] = text

tl.append(row_dict)

All together now

import requests

from bs4 import BeautifulSoup

import pandas as pd

from datetime import datetime

import re

import concurrent.futures

import itertools

from random import randint

from time import sleep

def scraping(year):

headers = {

'accept':'*/*',

'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36',

}

url = f'https://aviation-safety.net/database/dblist.php?Year={year}&sorteer=datekey&page=1'

#sleep(randint(1,3))

req = requests.get(url, headers=headers)

soup = BeautifulSoup(req.text,'html.parser')

page_container = soup.find('div',{'class':'pagenumbers'})

pages = max([int(page['href'].split('=')[-1]) for page in page_container.find_all('a')])

#info = []

tl = []

for page in range(1,pages 1):

new_url = f'https://aviation-safety.net/database/dblist.php?Year={year}&lang=&page={page}'

print(new_url)

#sleep(randint(1,3))

data = requests.get(new_url,headers=headers)

soup = BeautifulSoup(data.text,'html.parser')

table = soup.find('table')

for index,row in enumerate(table.find_all('tr')):

if index == 0:

continue

link_ = 'https://aviation-safety.net/' row.find('a')['href']

#sleep(randint(1,3))

new_page = requests.get(link_, headers=headers)

new_soup = BeautifulSoup(new_page.text, 'lxml')

table1 = new_soup.find('table')

# make changes here!!!!!!!

row_dict = {}

for i in table1.find_all('tr'):

title = i.text

title, text = title.split(":", maxsplit=1)

row_dict[title] = text

tl.append(row_dict)

df= pd.DataFrame(tl)

df.to_csv(f'{year}_aviation-safety_new.csv', encoding='utf-8-sig', index=False)

if __name__ == "__main__":

START = 2015

STOP = 2016

years = [year for year in range(START,STOP 1)]

print(f'Scraping {len(years)} years of data')

with concurrent.futures.ThreadPoolExecutor(max_workers=60) as executor:

final_list = executor.map(scraping,years)

CodePudding user response:

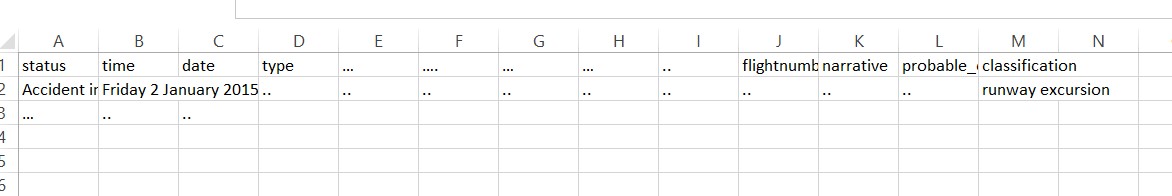

The read_html() method offers convenient access to such datasets.

>>> url = "https://web.archive.org/web/20221027040903/https://aviation-safety.net/database/dblist.php?Year=2015"

>>>

>>> dfs = pd.read_html(url)

>>>

>>> df = dfs[1].drop(columns="operator").dropna(axis=1, how="all")

>>> df["date"] = pd.to_datetime(df.date.str.replace("??-", "01-", regex=False), format="%d-%b-%Y")

>>> df.set_index("date")

type registration fat. location cat

date

2015-01-02 Saab 340B G-LGNL 0 Stornoway Ai... A1

2015-01-03 Antonov An-26B-100 RA-26082 0 Magadan-Soko... A1

2015-01-04 Fokker 50 5Y-SIB 0 Nairobi-Jomo... A1

2015-01-08 Bombardier Challenger 300 PR-YOU 0 São Paulo-Co... O1

2015-01-09 Cessna 208B Grand Caravan 8R-GAB 0 Matthews Rid... A2

... ... ... ... ... ..

2015-06-11 Eclipse 500 N508JA 0 Sacramento-E... A2

2015-06-11 Hawker 800XP N497AG 0 Port Harcour... A1

2015-06-12 Boeing 737-33A VH-NLK 0 near Kosrae Airpo... I2

2015-06-15 Antonov An-2R RA-84553 0 Tatsinsky di... A1

2015-06-16 Boeing 737-322 (WL) LY-FLB 0 Aktau Airpor... O1

[100 rows x 5 columns]

It's hard to control the user-agent header, so either use a cooperative site, or do a bit of extra work with requests or curl to obtain the html text beforehand.