I have 2 Data Frames with matched and unmatched column names, I want to compare the column names of the both the frames and print a table/dataframe with unmatched column names. Please someone help me on this

I have no idea how can i achieve this

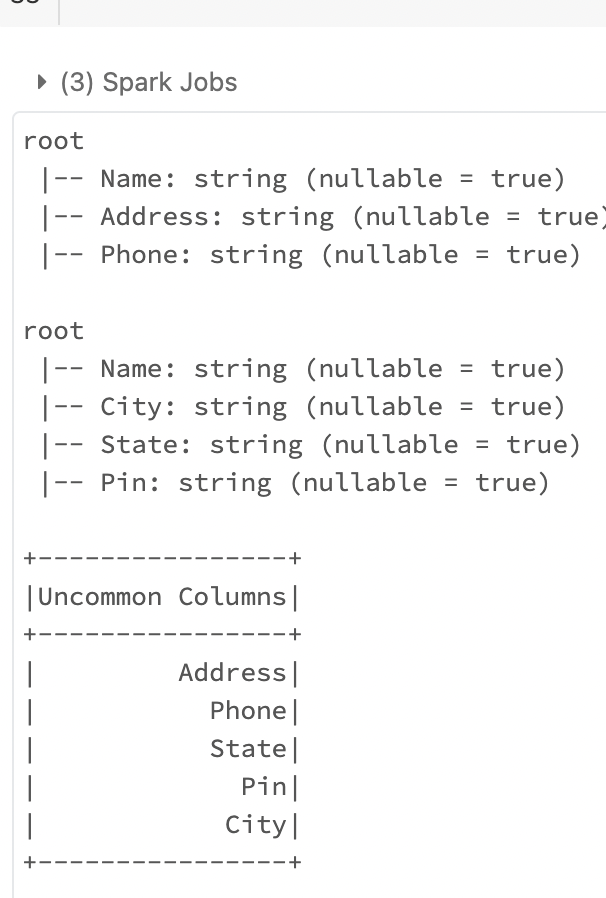

Below is the expectation

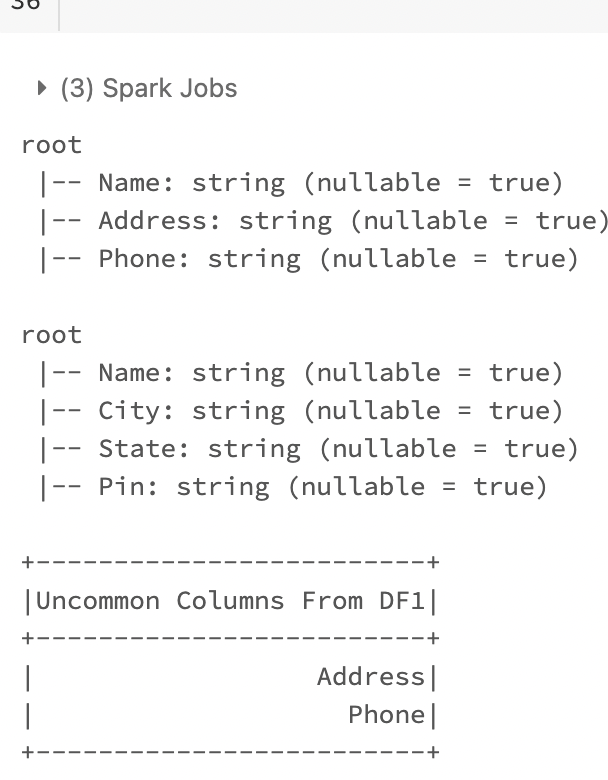

Update: As per comments the requirement is column names from One data frame only (which are not in the second dataframe)

df3 = spark.createDataFrame([Row(x) for x in list(set(df1.schema.names) - set(df2.schema.names))]).toDF("Uncommon Columns From DF1");

CodePudding user response:

You can easily do this by using sets operations

Data Preparation

s1 = StringIO("""

firstName,lastName,age,city,country

Alex,Smith,19,SF,USA

Rick,Mart,18,London,UK

""")

df1 = pd.read_csv(s1,delimiter=',')

sparkDF1 = sql.createDataFrame(df1)

s2 = StringIO("""

firstName,lastName,age

Alex,Smith,21

""")

df2 = pd.read_csv(s2,delimiter=',')

sparkDF2 = sql.createDataFrame(df2)

sparkDF1.show()

--------- -------- --- ------ -------

|firstName|lastName|age| city|country|

--------- -------- --- ------ -------

| Alex| Smith| 19| SF| USA|

| Rick| Mart| 18|London| UK|

--------- -------- --- ------ -------

sparkDF2.show()

--------- -------- ---

|firstName|lastName|age|

--------- -------- ---

| Alex| Smith| 21|

--------- -------- ---

Columns - Intersections & Difference

common = set(sparkDF1.columns) & set(sparkDF2.columns)

diff = set(sparkDF1.columns) - set(sparkDF2.columns)

print("Common - ",common)

## Common - {'lastName', 'age', 'firstName'}

print("Difference - ",diff)

## Difference - {'city', 'country'}

Additionally you can create tables/dataframes using the above variable values