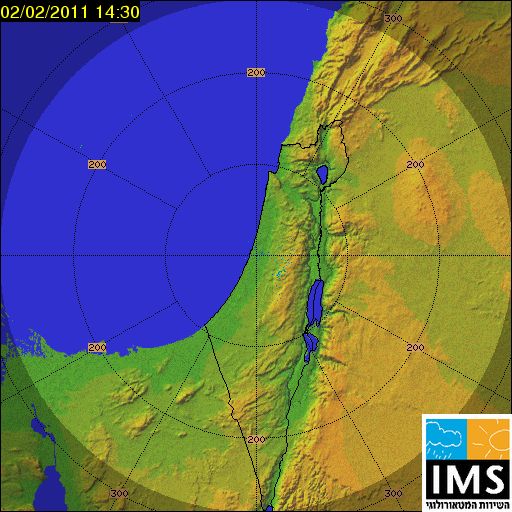

I have two images the first one is with white background and the size is 512x512 type png Bit depth 32 : from this image i want to read the pixels that are not white(clouds) and put them on another image.

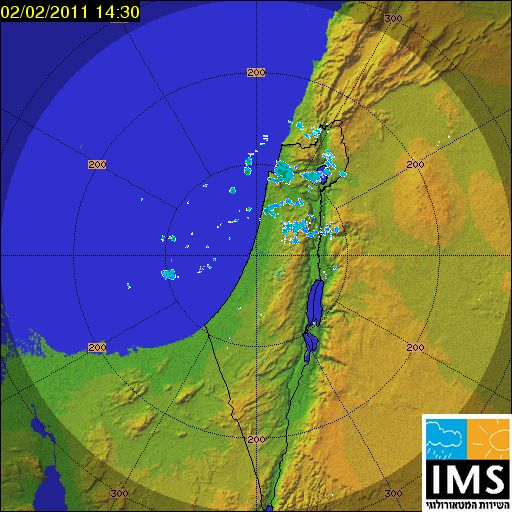

the second image is the one i want to put the pixels over : this image size is also 512x512 but type jpg and Bith depth 24.

this class is what i'm using for reading and setting the pixels :

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace Weather

{

public class RadarPixels

{

public RadarPixels(Bitmap image1, Bitmap image2)

{

ReadSetPixels(image1, image2);

}

private void ReadSetPixels(Bitmap image1 , Bitmap image2)

{

for(int x = 0; x < image1.Width; x )

{

for(int y = 0; y < image1.Height; y )

{

Color pixelColor = image1.GetPixel(x, y);

if (pixelColor != Color.FromArgb(255,255,255,255))

{

image2.SetPixel(x, y, pixelColor);

}

}

}

image2.Save(@"d:\mynewbmp.bmp");

image1.Dispose();

image2.Dispose();

}

}

}

using it in form1 :

public Form1()

{

InitializeComponent();

RadarPixels rp = new RadarPixels(

new Bitmap(Image.FromFile(@"D:\1.png")),

new Bitmap(Image.FromFile(@"D:\2.jpg")));

}

where 1.png is the image with the white background.

the result of the image mynewbmp.bmp is

not sure why it's have some white borders around the set pixels and if it copied all the pixels colors right.

if i'm changing the alpha of Color.FromArgb(255,255,255,255) from 255 to 1 or to 100 it will make mynewbmp.bmp same like 1.png only if the alpha is 255 it's getting closer to what i wanted.

CodePudding user response:

As stated in the comments, the white pixels is not exactly white. You should therefore calculate the distance to the white color and check if the calculated distance is greater than some tolerance value.

To get the desired result, you should adjust the tolerance value below.

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace Weather

{

public class RadarPixels

{

public RadarPixels(Bitmap image1, Bitmap image2)

{

ReadSetPixels(image1, image2);

}

private void ReadSetPixels(Bitmap image1 , Bitmap image2)

{

double tolerance = 46; // Don't forget adjust it.

for(int x = 0; x < image1.Width; x )

{

for(int y = 0; y < image1.Height; y )

{

Color pixelColor = image1.GetPixel(x, y);

if (GetDistance(pixelColor, Color.White) > tolerance)

{

image2.SetPixel(x, y, pixelColor);

}

}

}

image2.Save(@"d:\mynewbmp.bmp");

image1.Dispose();

image2.Dispose();

}

}

private double GetDistance(Color color1, Color color2)

{

double dr = color1.R - color2.R;

double dg = color1.G - color2.G;

double db = color1.B - color2.B;

return Math.Sqrt(dr * dr dg * dg db * db);

}

}

CodePudding user response:

As stated by others, some pixels are not pure white so they are included in the final image. Jtxkopt's solution to set a tolerance is great, but depending on the quality of the input images, and the smoothness of the edges, the tolarance may need to be constantly adjusted to get good results.

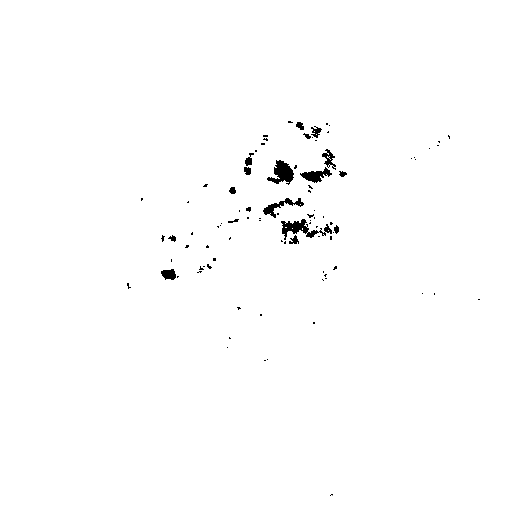

In the end, your solution and Jtxkopt's solution are both simulating a binary mask. That means you are compositing two images together based on a mask image, and that mask image only has 2 values, black and white. If a pixel is black draw image1, and if a pixel is white draw image2. Only in your case your 2 values are white and not-white. This is your theoretical binary mask image that has only black and white pixels:

Binary masks have very rough edges and are sometimes referred to as "cut out" masks because its like cutting things out with sizzors. When creating a binary mask the main issue is trying to determine which pixels to turn white, and which pixels to turn black, and the issue is almost always the edges of things. Jtxkopt's tolerance solution just helps make that determination and draw that final line. The user Andy suggested to use

Here is a simple solution which takes each pixel, converts to a simple gray pixel, and uses that gray pixel to set the alpha channel (transparency) of image1 so that it can be composited on top of image2.

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace Weather

{

public class RadarPixels

{

public RadarPixels(Bitmap image1, Bitmap image2)

{

ReadSetPixels(image1, image2);

}

private void ReadSetPixels(Bitmap image1 , Bitmap image2)

{

for(int x = 0; x < image1.Width; x )

{

for(int y = 0; y < image1.Height; y )

{

Color pixelColor = image1.GetPixel(x, y);

// just average R, G, and B values to get gray. Then invert by 255.

int invertedGrayValue = 255 - (int)((pixelColor.R pixelColor.G pixelColor.B) / 3);

// this keeps the original pixel color but sets the alpha value

image1.SetPixel(x, y, Color.FromArgb(invertedGrayValue, pixelColor));

}

}

// composite image1 on top of image2

using (Graphics g = Graphics.FromImage(image2))

{

g.CompositingMode = CompositingMode.SourceOver;

g.CompositingQuality = CompositingQuality.HighQuality;

g.DrawImage(image1, new Point(0, 0));

}

image2.Save(@"d:\mynewbmp.bmp");

image1.Dispose();

image2.Dispose();

}

}

}

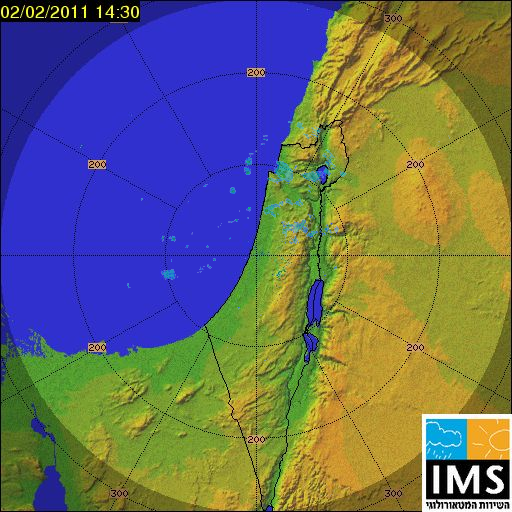

The result:

This approach has pros and cons. Pros being that you don't have to adjust a tolerance value, and your resulting image isn't just pixels from image1 and some pixels from image2, the pixels are blended from both images. Con being that the clouds are slightly transparent and not as pronounced. That can be resolved by adjusting the contrast of the grayscale mask so that light areas are lighter and dark areas are darker. Or you can composite image1 onto image2 multiple times for "additive" effect. Or you can add another variable to decrease or increase the final alpha value.

I hope this alternative solution is useful.