I have a workflow file which has 3 similar jobs which are running health check on a website but all of them are behaving differently and the behavior seems to be fully random and can't seem to find a valid reason. I have tried all possible combinations of wget and curl commands.

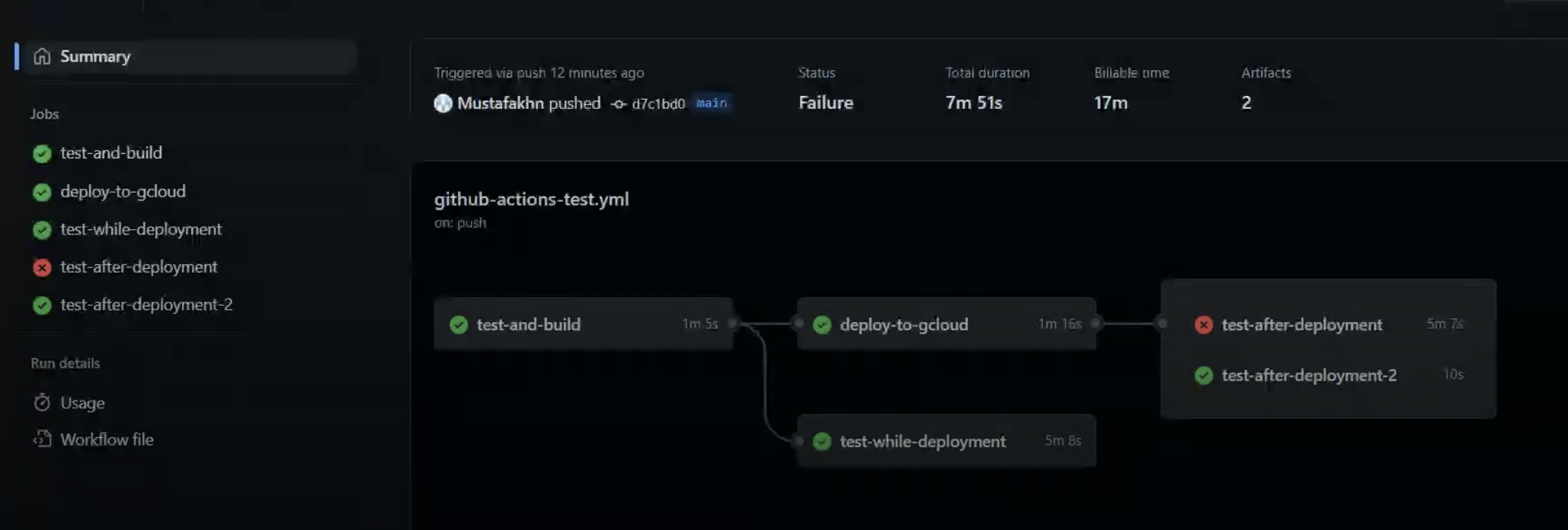

Here's a snapshot of the workflow jobs:

and here's the test.sh file

#!/bin/bash

while [ "$status" != "0" ]; do

sleep 5

# curl <WEBSITE GOES HERE>

wget -S --spider <WEBSITE GOES HERE> 2>&1 | grep HTTP/

# curl -v --silent <WEBSITE GOES HERE> 2>&1 | grep -Po $1

wget <WEBSITE GOES HERE> -q -O - | grep -Po $1

status=$(echo $?)

done

which I am triggering with the workflow

workflow file

deploy-to-gcloud:

needs: [ test-and-build ]

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Download a Build Artifact

uses: actions/[email protected]

with:

name: build

path: packages/client/

- id: 'auth'

uses: 'google-github-actions/auth@v1'

with:

credentials_json: '${{ secrets.GCP_SERVICE_ACCOUNT }}'

- name: 'Set up Cloud SDK'

uses: 'google-github-actions/setup-gcloud@v1'

- name: 'Use gcloud CLI'

run: |

cd packages/client/

mkdir ../deploy

sudo mv build ../deploy/

sudo mv app.yaml ../deploy/

cd ../deploy

gcloud info

gcloud app deploy --version=${{ github.sha }}

gcloud app browse --version=${{ github.sha }}

test-after-deployment:

runs-on: ubuntu-latest

needs: [ deploy-to-gcloud ]

steps:

- uses: actions/checkout@v3

- name: installing dependencies

run: |

sudo apt-get install wget

wget -S <WEBSITE GOES HERE>

- name: check website

run: |

# This the area that is not returning the expected output

sudo chmod u x .github/workflows/test.sh

timeout 300 .github/workflows/test.sh ${{ github.sha }}

test-while-deployment:

runs-on: ubuntu-latest

needs: [ test-and-build ]

steps:

- uses: actions/checkout@v3

- name: installing dependencies

run: |

sudo apt-get install wget

wget -S <WEBSITE GOES HERE>

- name: check website

run: |

sudo chmod u x .github/workflows/test.sh

timeout 600 .github/workflows/test.sh ${{ github.sha }}

test-after-deployment-2:

runs-on: ubuntu-latest

needs: [ deploy-to-gcloud ]

steps:

- uses: actions/checkout@v3

- name: installing dependencies

run: |

sudo apt-get install wget

wget -S <WEBSITE GOES HERE>

- name: check website

run: |

sudo chmod u x .github/workflows/test.sh

timeout 600 .github/workflows/test.sh ${{ github.sha }}

I ran three similar jobs [test-while-deployment and test-after-deployment-2 are the jobs created for troubleshooting] the main job is test-after-deployment

I tried running it in different ways but it seems to fail and does not have any repeating pattern

I ran it 10 times from my computer and the github codespaces and these are the results that i recorded manually

these tests were pushing from local pc :

- Test 1 failed with (1st : wget & 2nd : curl)

- Test 2 passed with (all 4 commands)

- Test 3 passed with (both wget commands)

- Test 4 passed with (both wget commands)

- Test 5 failed with (wget,curl,wget)

- Test 6 passed with (both wget commands)

- Test 7 failed with (1st : wget & 2nd : curl)

- Test 8 passed with (1st : curl & 2nd: wget)

- Test 9 passed with (both curl commands)

- Test 10 passed with (both wget commands)

these tests are from github codespaces :

- Test 1 failed with (1st : wget & 2nd : curl)

- Test 2 failed with (wget ,curl,wget)

- Test 3 failed with (both wget commands)

- Test 4 passed with (all 4 commands)

- Test 5 passed with (both curl commands)

- Test 6 passed with (both wget commands)

- Test 7 failed with (1st : wget & 2nd : curl)

- Test 8 failed with (1st : curl & 2nd : wget)

- Test 9 failed with (both curl commands)

- Test 10 failed with (both wget commands)

I expected it to work properly and not fail most of the time

UPDATE

It never finds the html content and returns the error when it fails

#!/bin/bash

while [ "$status" != "0" ]; do

sleep 5

# curl <WEBSITE GOES HERE>

wget -S --spider <WEBSITE GOES HERE> 2>&1 | grep HTTP/

# curl -v --silent <WEBSITE GOES HERE> 2>&1 | grep -Po d7c1bd01d1ccdfe9357b534458c9cc59594796af

wget <WEBSITE GOES HERE> -q -O - | grep -Po d7c1bd01d1ccdfe9357b534458c9cc59594796af

status=$(echo $?)

done

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

.

.

.

.

.

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

Error: Process completed with exit code 124.

It returns the html content whenever it succeed

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

.

.

.

.

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

HTTP/1.1 200 OK

d7c1bd01d1ccdfe9357b534458c9cc59594796af

CodePudding user response:

Update / Solution

It seems like the curl and wget commands were caching in between the client side and the server side somewhere which was the reason why the commands were returning the same repetitive results

I got through this article then I realized that caching may be the reason for this behavior

I used

curl -H 'Cache-Control: no-cache, no-store' -v --silent <WEBSITE GOES HERE> 2>&1 | grep -Po d7c1bd01d1ccdfe9357b534458c9cc59594796af

wget --no-cache <WEBSITE GOES HERE> -q -O - | grep -Po d7c1bd01d1ccdfe9357b534458c9cc59594796af

These commands instead of the ones I was using before.

So basically,

You can use 'Cache-Control: no-cache, no-store' with the curl command

and --no-cache flag with the wget command.

Hopefully, this might help you all if you got stuck in something similar to this problem.