I have two dataframes that are given below

multi_df = pd.DataFrame({'multi_project_ID': ["Combo_1","Combo_2","Combo_3","Combo_4"],

'multi_items':[['Chips','Biscuits','Chocolates'],['Alcoholic Drinks','Juices','Fruits'],['Plants','Veggies','Chips'],['Cars']],

'multi_labels':[[1,2,3],[4,5,6],[8,9,10],[11]]})

single_df = pd.DataFrame({'single_project_ID': ["ABC_1","DEF_2","JKL_3","MNO_3"],

'single_items':[['Chips'],['Alcoholic Drinks'],['Biscuits'],['Smoking']],

'single_labels':[[1],[4],[8],[9]]})

I would like to do the below

a) Check whether items of single_items list is present under the items of multi_items list.

b) If yes, then extract the multi_labels and multi_project_id for the corresponding matching item

c) If no item is present/matching, then put NA

So, I tried the below but it doesn't work. I don't know how and where to start.

print(single_df.groupby('single_labels').sum()['single_items'].apply(lambda x: list(set(x))).reset_index())

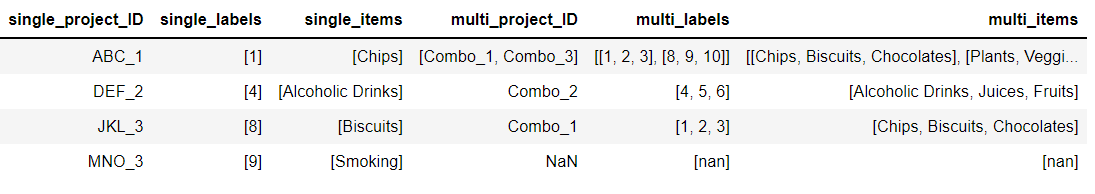

I expect my output to be like as below

CodePudding user response:

Create helper column tmp and use DataFrame.explode for remove lists, then aggregate lists with custom function - only for repeated values (length is greater like 1):

f = lambda x: list(x) if len(x) > 1 else x

df = multi_df.assign(tmp=multi_df['multi_items']).explode('tmp').groupby('tmp').agg(f)

print (df)

multi_project_ID \

tmp

Alcoholic Drinks Combo_2

Biscuits Combo_1

Cars Combo_4

Chips [Combo_1, Combo_3]

Chocolates Combo_1

Fruits Combo_2

Juices Combo_2

Plants Combo_3

Veggies Combo_3

multi_items \

tmp

Alcoholic Drinks [Alcoholic Drinks, Juices, Fruits]

Biscuits [Chips, Biscuits, Chocolates]

Cars [Cars]

Chips [[Chips, Biscuits, Chocolates], [Plants, Veggi...

Chocolates [Chips, Biscuits, Chocolates]

Fruits [Alcoholic Drinks, Juices, Fruits]

Juices [Alcoholic Drinks, Juices, Fruits]

Plants [Plants, Veggies, Chips]

Veggies [Plants, Veggies, Chips]

multi_labels

tmp

Alcoholic Drinks [4, 5, 6]

Biscuits [1, 2, 3]

Cars [11]

Chips [[1, 2, 3], [8, 9, 10]]

Chocolates [1, 2, 3]

Fruits [4, 5, 6]

Juices [4, 5, 6]

Plants [8, 9, 10]

Veggies [8, 9, 10]

Then convert single_items to scalars by str[0] and assign to index, so possible use DataFrame.join:

out = single_df.set_index(single_df['single_items'].str[0]).join(df).reset_index(drop=True)

print (out)

single_project_ID single_items single_labels multi_project_ID \

0 ABC_1 [Chips] [1] [Combo_1, Combo_3]

1 DEF_2 [Alcoholic Drinks] [4] Combo_2

2 JKL_3 [Biscuits] [8] Combo_1

3 MNO_3 [Smoking] [9] NaN

multi_items multi_labels

0 [[Chips, Biscuits, Chocolates], [Plants, Veggi... [[1, 2, 3], [8, 9, 10]]

1 [Alcoholic Drinks, Juices, Fruits] [4, 5, 6]

2 [Chips, Biscuits, Chocolates] [1, 2, 3]

3 NaN NaN