I've created a Kubernetes cluster in Azure using the following Terraform

# Locals block for hardcoded names

locals {

backend_address_pool_name = "appgateway-beap"

frontend_port_name = "appgateway-feport"

frontend_ip_configuration_name = "appgateway-feip"

http_setting_name = "appgateway-be-htst"

listener_name = "appgateway-httplstn"

request_routing_rule_name = "appgateway-rqrt"

app_gateway_subnet_name = "appgateway-subnet"

}

data "azurerm_subnet" "aks-subnet" {

name = "aks-subnet"

virtual_network_name = "np-dat-spoke-vnet"

resource_group_name = "ipz12-dat-np-connect-rg"

}

data "azurerm_subnet" "appgateway-subnet" {

name = "appgateway-subnet"

virtual_network_name = "np-dat-spoke-vnet"

resource_group_name = "ipz12-dat-np-connect-rg"

}

# Create Resource Group for Kubernetes Cluster

module "resource_group_kubernetes_cluster" {

source = "./modules/resource_group"

count = var.enable_kubernetes == true ? 1 : 0

#name_override = "rg-aks-spoke-dev-westus3-001"

app_or_service_name = "aks" # var.app_or_service_name

subscription_type = var.subscription_type # "spoke"

environment = var.environment # "dev"

location = var.location # "westus3"

instance_number = var.instance_number # "001"

tags = var.tags

}

resource "azurerm_user_assigned_identity" "identity_uami" {

location = var.location

name = "appgw-uami"

resource_group_name = module.resource_group_kubernetes_cluster[0].name

}

# Application Gateway Public Ip

resource "azurerm_public_ip" "test" {

name = "publicIp1"

location = var.location

resource_group_name = module.resource_group_kubernetes_cluster[0].name

allocation_method = "Static"

sku = "Standard"

}

resource "azurerm_application_gateway" "network" {

name = var.app_gateway_name

resource_group_name = module.resource_group_kubernetes_cluster[0].name

location = var.location

sku {

name = var.app_gateway_sku

tier = "Standard_v2"

capacity = 2

}

identity {

type = "UserAssigned"

identity_ids = [

azurerm_user_assigned_identity.identity_uami.id

]

}

gateway_ip_configuration {

name = "appGatewayIpConfig"

subnet_id = data.azurerm_subnet.appgateway-subnet.id

}

frontend_port {

name = local.frontend_port_name

port = 80

}

frontend_port {

name = "httpsPort"

port = 443

}

frontend_ip_configuration {

name = local.frontend_ip_configuration_name

public_ip_address_id = azurerm_public_ip.test.id

}

backend_address_pool {

name = local.backend_address_pool_name

}

backend_http_settings {

name = local.http_setting_name

cookie_based_affinity = "Disabled"

port = 80

protocol = "Http"

request_timeout = 1

}

http_listener {

name = local.listener_name

frontend_ip_configuration_name = local.frontend_ip_configuration_name

frontend_port_name = local.frontend_port_name

protocol = "Http"

}

request_routing_rule {

name = local.request_routing_rule_name

rule_type = "Basic"

http_listener_name = local.listener_name

backend_address_pool_name = local.backend_address_pool_name

backend_http_settings_name = local.http_setting_name

priority = 100

}

tags = var.tags

depends_on = [azurerm_public_ip.test]

lifecycle {

ignore_changes = [

backend_address_pool,

backend_http_settings,

request_routing_rule,

http_listener,

probe,

tags,

frontend_port

]

}

}

# Create the Azure Kubernetes Service (AKS) Cluster

resource "azurerm_kubernetes_cluster" "kubernetes_cluster" {

count = var.enable_kubernetes == true ? 1 : 0

name = "aks-prjx-${var.subscription_type}-${var.environment}-${var.location}-${var.instance_number}"

location = var.location

resource_group_name = module.resource_group_kubernetes_cluster[0].name # "rg-aks-spoke-dev-westus3-001"

dns_prefix = "dns-aks-prjx-${var.subscription_type}-${var.environment}-${var.location}-${var.instance_number}" #"dns-prjxcluster"

private_cluster_enabled = false

local_account_disabled = true

default_node_pool {

name = "npprjx${var.subscription_type}" #"prjxsyspool" # NOTE: "name must start with a lowercase letter, have max length of 12, and only have characters a-z0-9."

vm_size = "Standard_B8ms"

vnet_subnet_id = data.azurerm_subnet.aks-subnet.id

# zones = ["1", "2", "3"]

enable_auto_scaling = true

max_count = 3

min_count = 1

# node_count = 3

os_disk_size_gb = 50

type = "VirtualMachineScaleSets"

enable_node_public_ip = false

enable_host_encryption = false

node_labels = {

"node_pool_type" = "npprjx${var.subscription_type}"

"node_pool_os" = "linux"

"environment" = "${var.environment}"

"app" = "prjx_${var.subscription_type}_app"

}

tags = var.tags

}

ingress_application_gateway {

gateway_id = azurerm_application_gateway.network.id

}

# Enabled the cluster configuration to the Azure kubernets with RBAC

azure_active_directory_role_based_access_control {

managed = true

admin_group_object_ids = var.active_directory_role_based_access_control_admin_group_object_ids

azure_rbac_enabled = true #false

}

network_profile {

network_plugin = "azure"

network_policy = "azure"

outbound_type = "userDefinedRouting"

}

identity {

type = "SystemAssigned"

}

oms_agent {

log_analytics_workspace_id = module.log_analytics_workspace[0].id

}

timeouts {

create = "20m"

delete = "20m"

}

depends_on = [

azurerm_application_gateway.network

]

}

and provided the necessary permissions

# Get the AKS Agent Pool SystemAssigned Identity

data "azurerm_user_assigned_identity" "aks-identity" {

name = "${azurerm_kubernetes_cluster.kubernetes_cluster[0].name}-agentpool"

resource_group_name = "MC_${module.resource_group_kubernetes_cluster[0].name}_aks-prjx-spoke-dev-eastus-001_eastus"

}

# Get the AKS SystemAssigned Identity

data "azuread_service_principal" "aks-sp" {

display_name = azurerm_kubernetes_cluster.kubernetes_cluster[0].name

}

# Provide ACR Pull permission to AKS SystemAssigned Identity

resource "azurerm_role_assignment" "acrpull_role" {

scope = module.container_registry[0].id

role_definition_name = "AcrPull"

principal_id = data.azurerm_user_assigned_identity.aks-identity.principal_id

skip_service_principal_aad_check = true

depends_on = [

data.azurerm_user_assigned_identity.aks-identity

]

}

resource "azurerm_role_assignment" "aks_id_network_contributor_subnet" {

scope = data.azurerm_subnet.aks-subnet.id

role_definition_name = "Network Contributor"

principal_id = data.azurerm_user_assigned_identity.aks-identity.principal_id

depends_on = [data.azurerm_user_assigned_identity.aks-identity]

}

resource "azurerm_role_assignment" "akssp_network_contributor_subnet" {

scope = data.azurerm_subnet.aks-subnet.id

role_definition_name = "Network Contributor"

principal_id = data.azuread_service_principal.aks-sp.object_id

depends_on = [data.azuread_service_principal.aks-sp]

}

resource "azurerm_role_assignment" "aks_id_contributor_agw" {

scope = data.azurerm_subnet.appgateway-subnet.id

role_definition_name = "Network Contributor"

principal_id = data.azurerm_user_assigned_identity.aks-identity.principal_id

depends_on = [data.azurerm_user_assigned_identity.aks-identity]

}

resource "azurerm_role_assignment" "akssp_contributor_agw" {

scope = data.azurerm_subnet.appgateway-subnet.id

role_definition_name = "Network Contributor"

principal_id = data.azuread_service_principal.aks-sp.object_id

depends_on = [data.azuread_service_principal.aks-sp]

}

resource "azurerm_role_assignment" "aks_ingressid_contributor_on_agw" {

scope = azurerm_application_gateway.network.id

role_definition_name = "Contributor"

principal_id = azurerm_kubernetes_cluster.kubernetes_cluster[0].ingress_application_gateway[0].ingress_application_gateway_identity[0].object_id

depends_on = [azurerm_application_gateway.network,azurerm_kubernetes_cluster.kubernetes_cluster]

skip_service_principal_aad_check = true

}

resource "azurerm_role_assignment" "aks_ingressid_contributor_on_uami" {

scope = azurerm_user_assigned_identity.identity_uami.id

role_definition_name = "Contributor"

principal_id = azurerm_kubernetes_cluster.kubernetes_cluster[0].ingress_application_gateway[0].ingress_application_gateway_identity[0].object_id

depends_on = [azurerm_application_gateway.network,azurerm_kubernetes_cluster.kubernetes_cluster]

skip_service_principal_aad_check = true

}

resource "azurerm_role_assignment" "uami_contributor_on_agw" {

scope = azurerm_application_gateway.network.id

role_definition_name = "Contributor"

principal_id = azurerm_user_assigned_identity.identity_uami.principal_id

depends_on = [azurerm_application_gateway.network,azurerm_user_assigned_identity.identity_uami]

skip_service_principal_aad_check = true

}

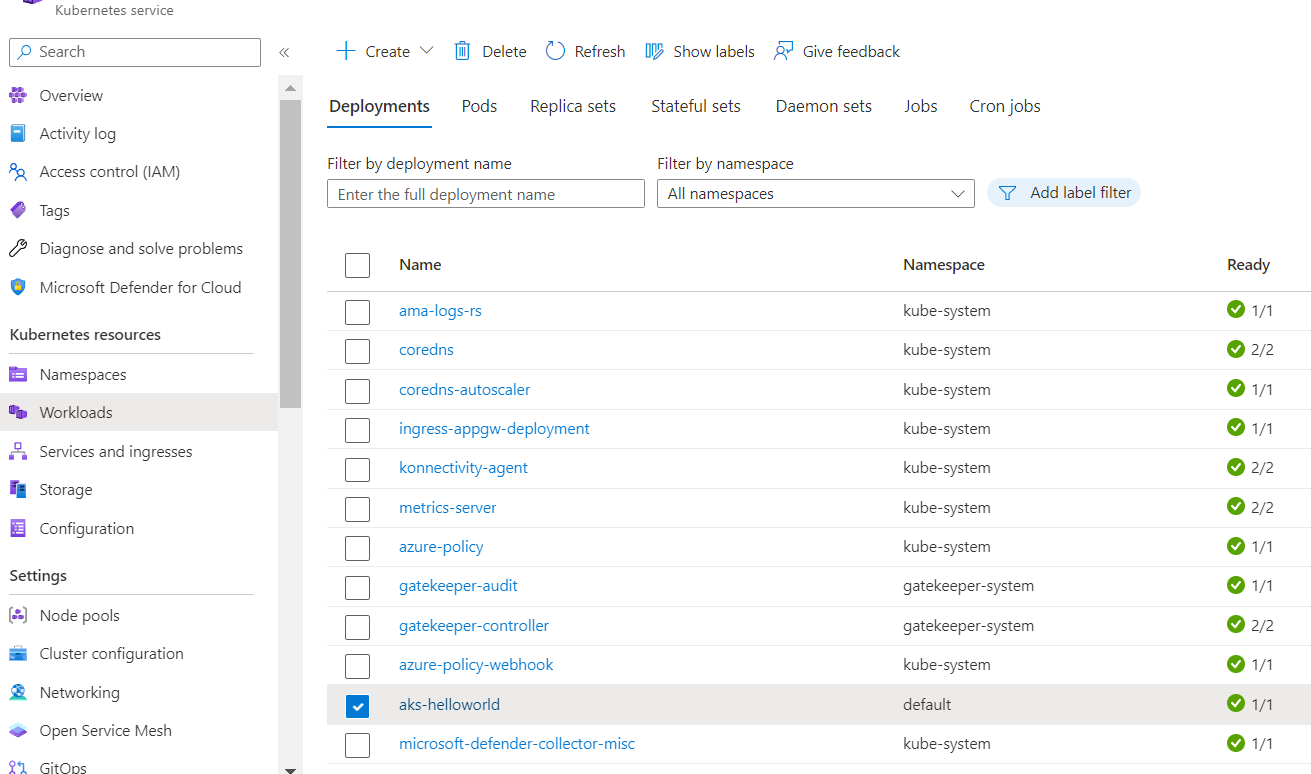

and deployed the below mentioned application

apiVersion: apps/v1

kind: Deployment

metadata:

name: aks-helloworld

spec:

replicas: 1

selector:

matchLabels:

app: aks-helloworld-two

template:

metadata:

labels:

app: aks-helloworld-two

spec:

containers:

- name: aks-helloworld-two

image: mcr.microsoft.com/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "AKS Ingress Demo"

---

apiVersion: v1

kind: Service

metadata:

name: aks-helloworld

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: aks-helloworld-two

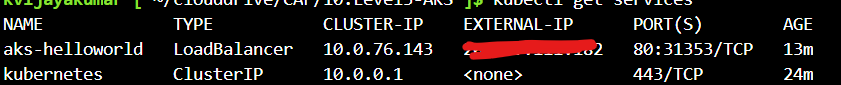

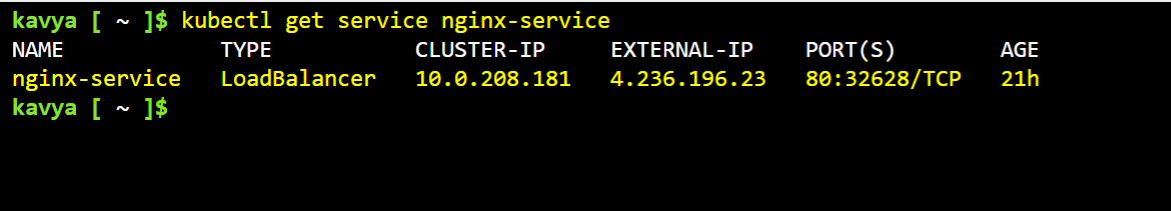

External IP got assigned

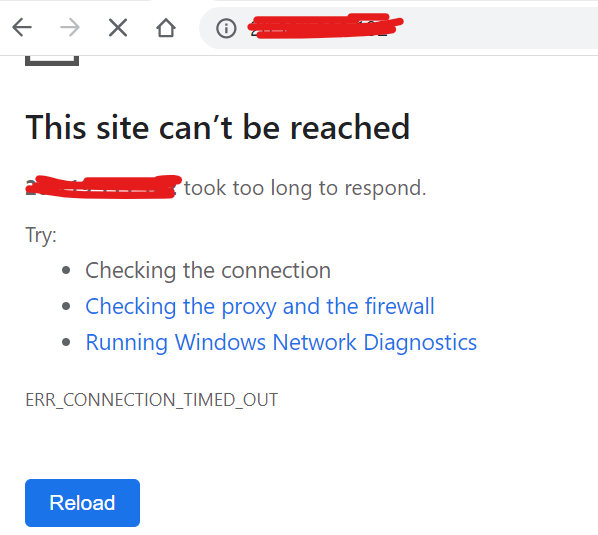

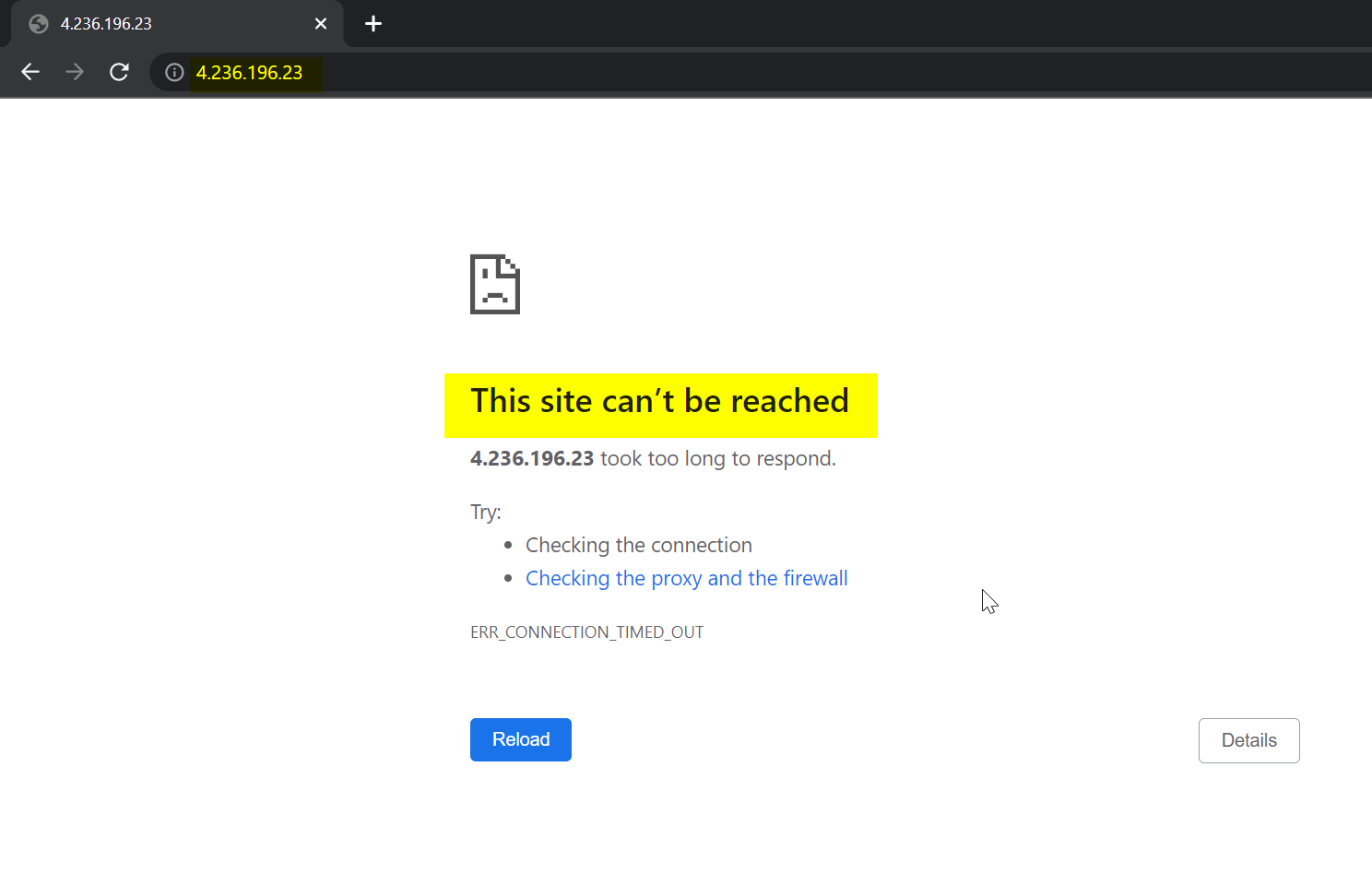

however I am not able to access the External IP

Note: I have not deployed any Ingress controller separately like mentioned in the Microsoft Article as I am not sure this is required

CodePudding user response:

I tried to reproduce the same in my environment to create Kubernetes Service Cluster with Application Gateway:

Follow the Stack link to create Kubernetes Service Cluster with Ingress Application Gateway.

If you are unable to access your application using external Load balancer IP after deployment in Azure Kubernetes Service (AKS), Verify the below setting in AKS Cluster.

1.check the status of the load balancer using the below cmd.

kubectl get service <your service name>

Make sure that the External -IP field is not set to Pending state.

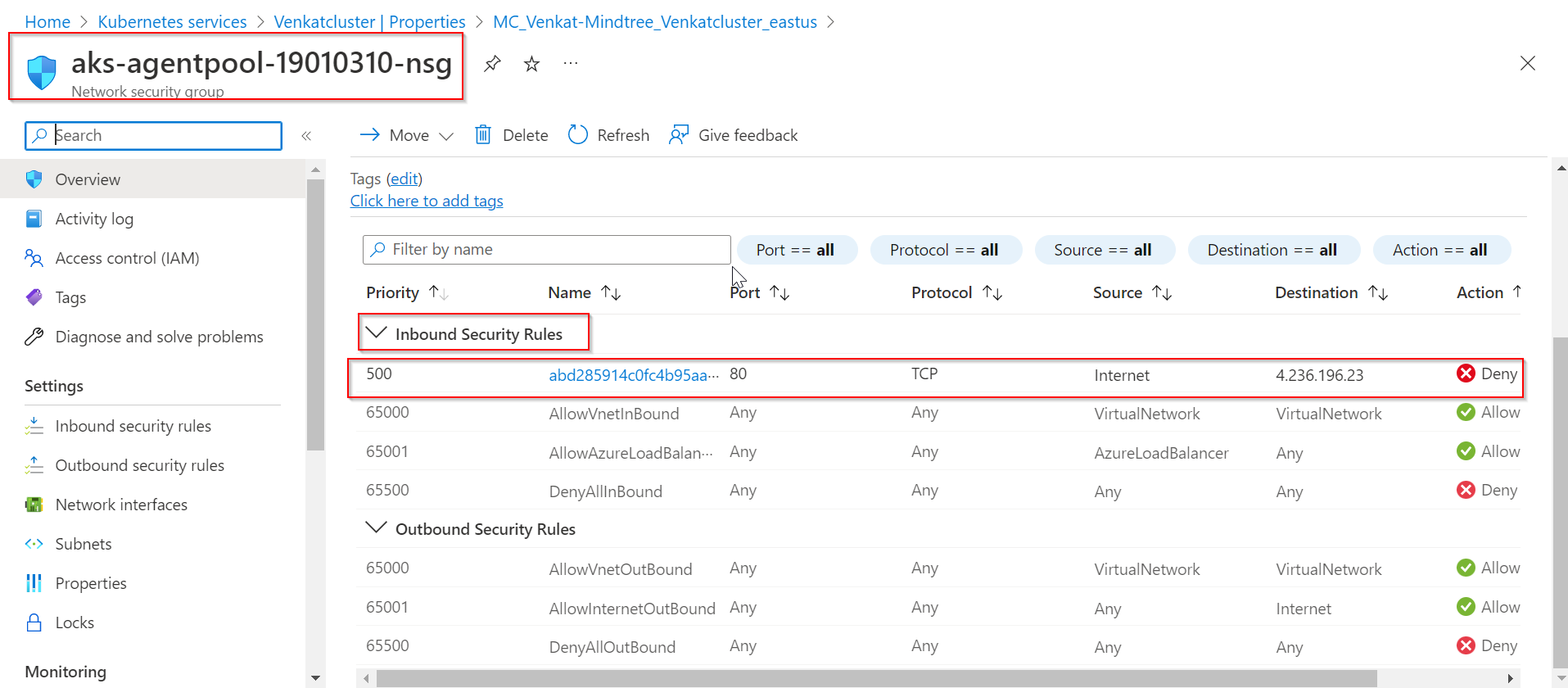

- Verify the security group associated with the load balancer. Make sure that the security group allows traffic on the desired port.

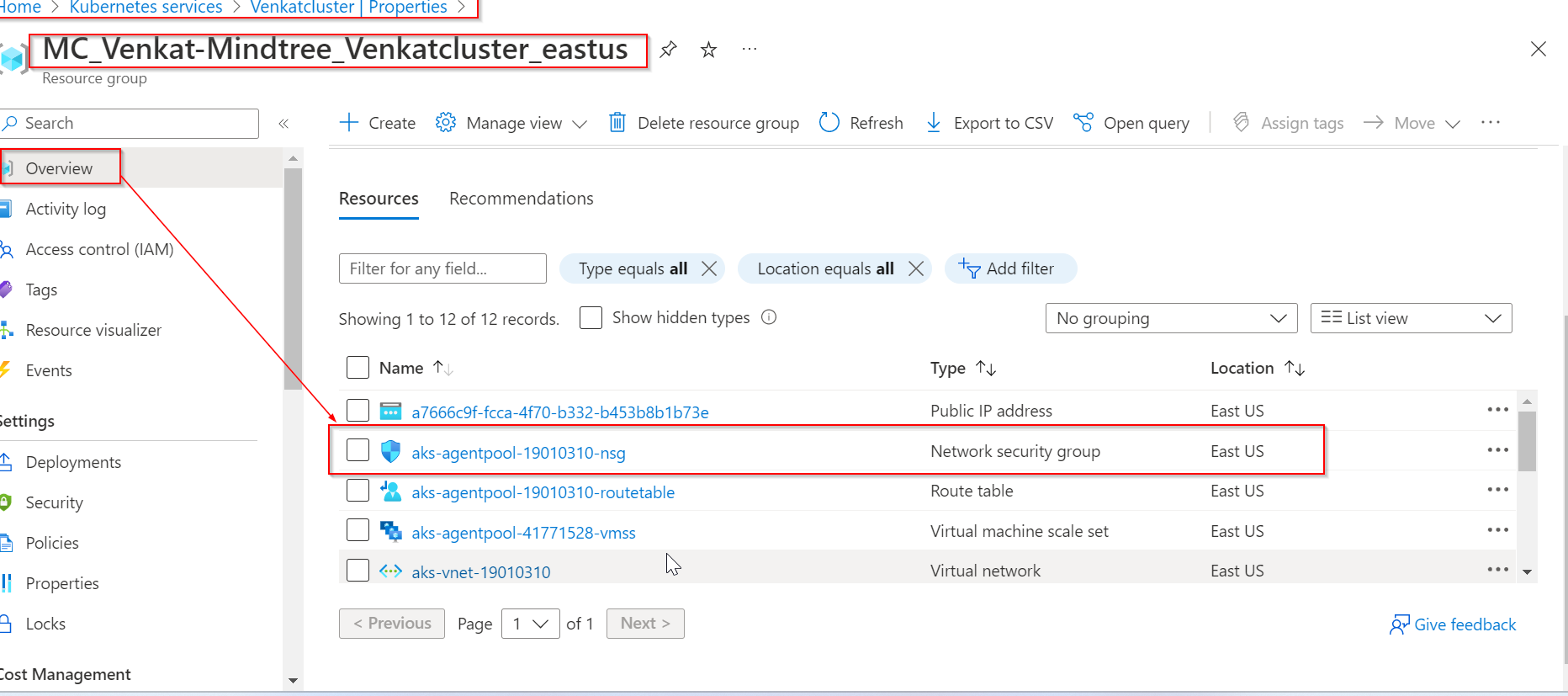

Kindly follow the below steps to check NSG Security rules in AKS cluster.

Go to Azure Portal > Kubernetes services > Select your Kubernetes services> Properties > Select your resource group under Infrastructure resource group > overview > Select your NSG Group.

I have disabled inbound http rule in Network Security Group for testing, got the same error.

Application status, once disable the Port 80 in NSG.

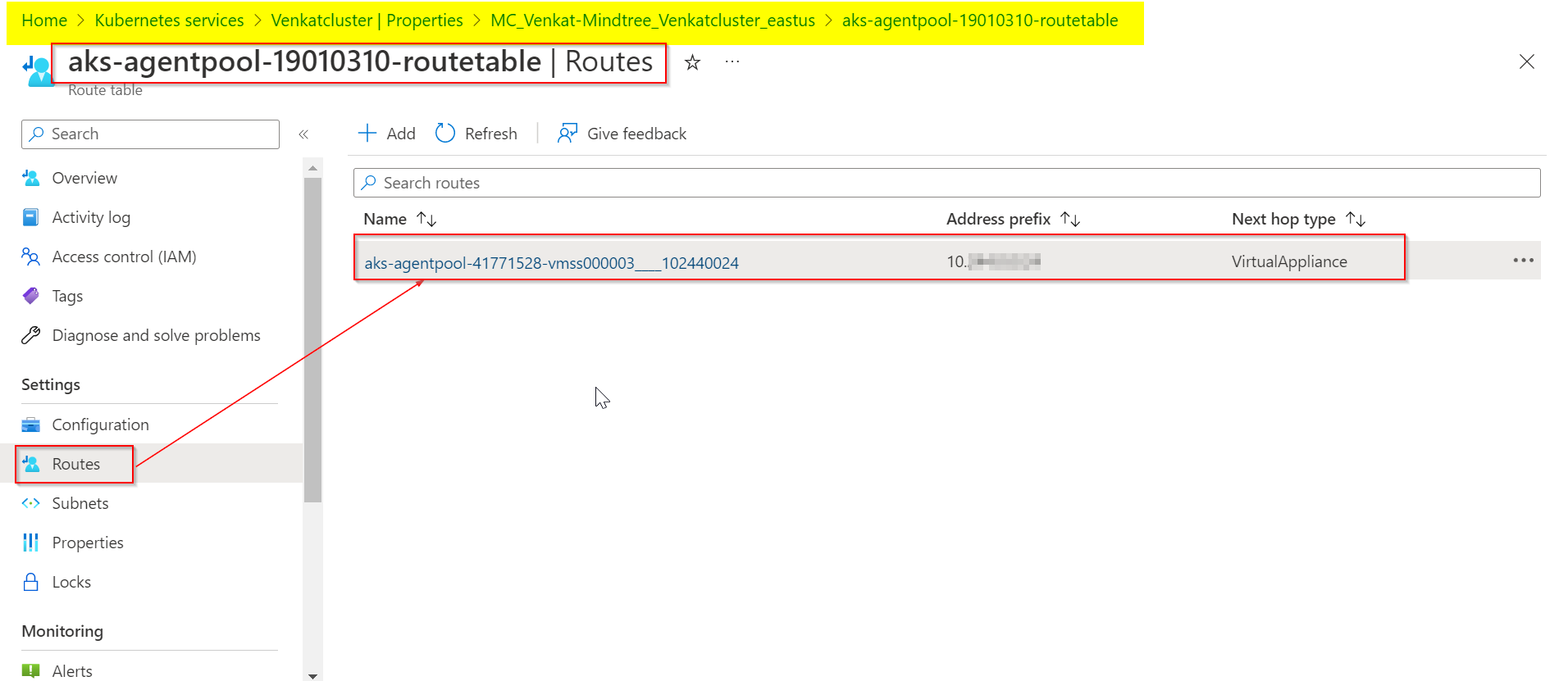

- check the routing rules on your virtual network. Make sure that traffic is being forwarded from the load balancer.