I am trying to create a python program in which the user inputs a set of data and the program spits out an output in which it creates a graph with a line/polynomial which best fits the data.

This is the code:

from matplotlib import pyplot as plt

import numpy as np

x = []

y = []

x_num = 0

while True:

sequence = int(input("Input 1 number in the sequence, type 9040321 to stop"))

if sequence == 9040321:

poly = np.polyfit(x, y, deg=2, rcond=None, full=False, w=None, cov=False)

plt.plot(poly)

plt.scatter(x, y, c="blue", label="data")

plt.legend()

plt.show()

break

else:

y.append(sequence)

x.append(x_num)

x_num = 1

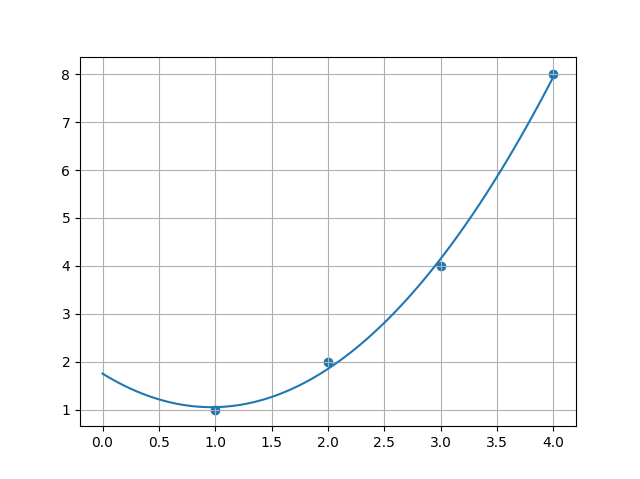

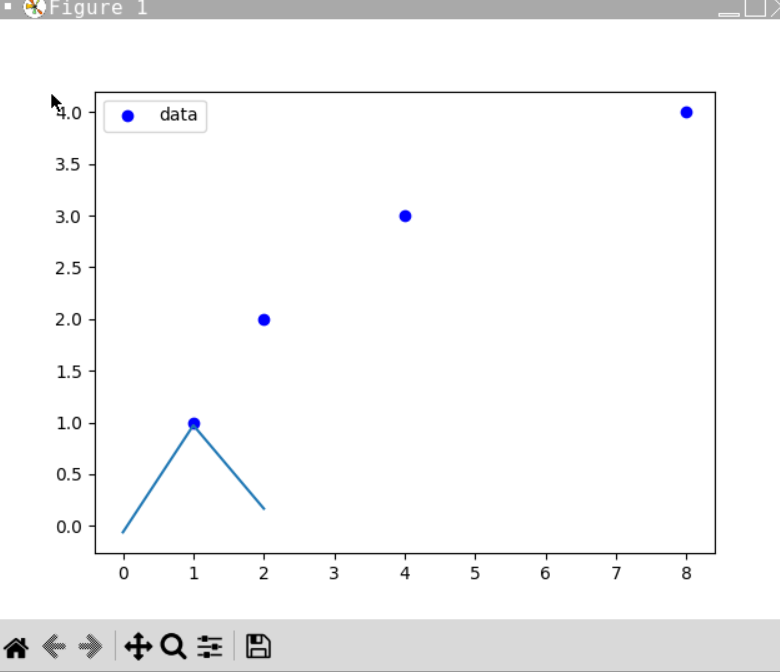

I used the polynomial where I inputed 1, 2, 4, 8 each in separate inputs. MatPlotLib graphed it properly, however, for the degree of 2, the output was the following image:

This is clearly not correct, however I am unsure what the problem is. I think it has something to do with the degree, however when I change the degree to 3, it still does not fit. I am looking for a graph like y=sqrt(x) to go over each of the points and when that is not possible, create the line that fits the best.

Edit: I added a print(poly) feature and for the selected input above, it gives [0.75 0.05 1.05]. I do not know what to make of this.

CodePudding user response:

import numpy as np

import matplotlib.pyplot as plt

x = [1, 2, 3, 4]

y = [1, 2, 4, 8]

coeffs = np.polyfit(x, y, 2)

print(coeffs)

poly = np.poly1d(coeffs)

print(poly)

x_cont = np.linspace(0, 4, 81)

y_cont = poly(x_cont)

plt.scatter(x, y)

plt.plot(x_cont, y_cont)

plt.grid(1)

plt.show()

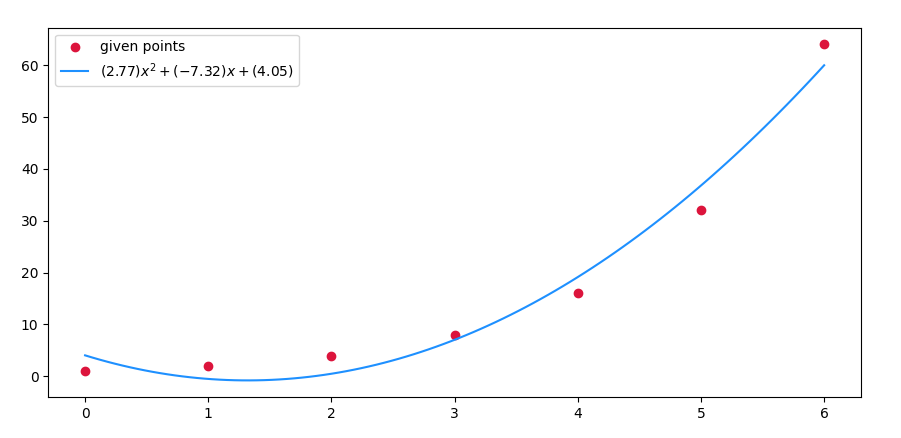

Executing the code, you have the graph above and this is printed in the terminal:

[ 0.75 -1.45 1.75]

2

0.75 x - 1.45 x 1.75

It seems to me that you had false expectations about the output of polyfit.

CodePudding user response: