It's now quite a few days that I'm trying to configure the cluster on AKS but I keep jumping between parts of the docs, various questions here on SO, articles on Medium.. all to keep failing at it.

The goal is get a static ip with a dns that I can use to connect my apps to the server deployed on AKS.

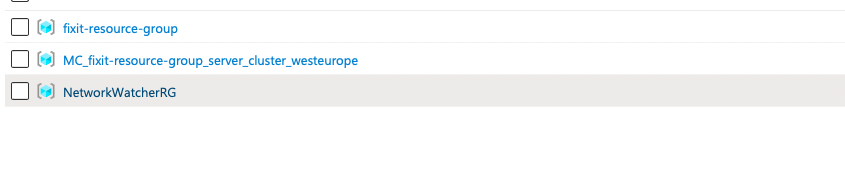

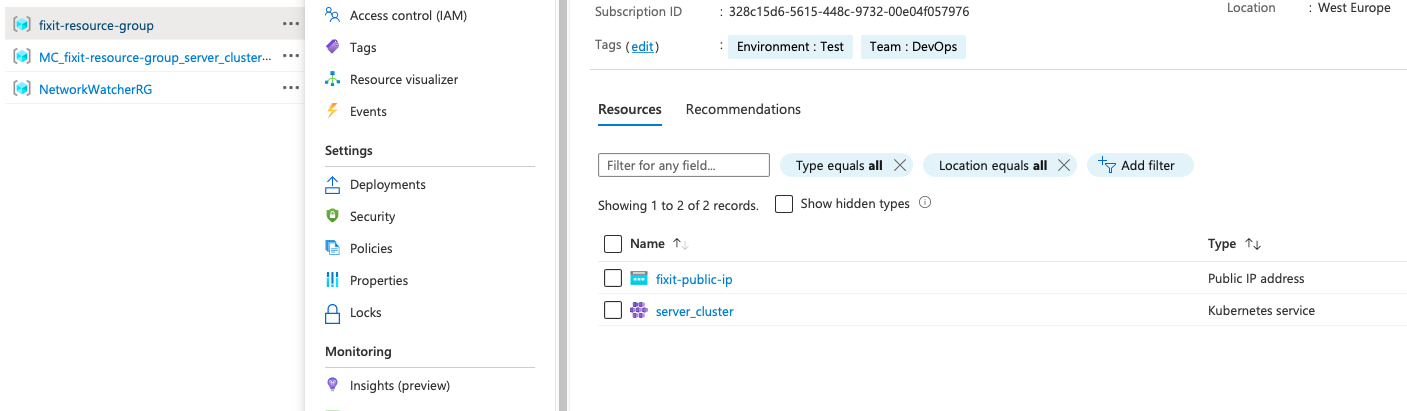

I have created via terraform the infrastructure which consists of a resource group in which I created a Public IP and the AKS cluster, so far so good.

After trying to use the ingress controller that gets installed when you use the option http_application_routing_enabled = true on cluster creation which the docs are discouraging for production https://learn.microsoft.com/en-us/azure/aks/http-application-routing, I'm trying the recommended way and install the ingress-nginx controller via Helm https://learn.microsoft.com/en-us/azure/aks/ingress-basic?tabs=azure-cli.

In terraform I'm installing it all like this

resource group and cluster

resource "azurerm_resource_group" "resource_group" {

name = var.resource_group_name

location = var.location

tags = {

Environment = "Test"

Team = "DevOps"

}

}

resource "azurerm_kubernetes_cluster" "server_cluster" {

name = "server_cluster"

location = azurerm_resource_group.resource_group.location

resource_group_name = azurerm_resource_group.resource_group.name

dns_prefix = "fixit"

kubernetes_version = var.kubernetes_version

# sku_tier = "Paid"

default_node_pool {

name = "default"

node_count = 1

min_count = 1

max_count = 3

# vm_size = "standard_b2s_v5"

# vm_size = "standard_e2bs_v5"

vm_size = "standard_b4ms"

type = "VirtualMachineScaleSets"

enable_auto_scaling = true

enable_host_encryption = false

# os_disk_size_gb = 30

# enable_node_public_ip = true

}

service_principal {

client_id = var.sp_client_id

client_secret = var.sp_client_secret

}

tags = {

Environment = "Production"

}

linux_profile {

admin_username = "azureuser"

ssh_key {

key_data = var.ssh_key

}

}

network_profile {

network_plugin = "kubenet"

load_balancer_sku = "standard"

# load_balancer_sku = "basic"

}

# http_application_routing_enabled = true

http_application_routing_enabled = false

}

public ip

resource "azurerm_public_ip" "public-ip" {

name = "fixit-public-ip"

location = var.location

resource_group_name = var.resource_group_name

allocation_method = "Static"

domain_name_label = "fixit"

sku = "Standard"

}

load balancer

resource "kubernetes_service" "cluster-ingress" {

metadata {

name = "cluster-ingress-svc"

annotations = {

"service.beta.kubernetes.io/azure-load-balancer-resource-group" = "fixit-resource-group"

# Warning SyncLoadBalancerFailed 2m38s (x8 over 12m) service-controller Error syncing load balancer:

# failed to ensure load balancer: findMatchedPIPByLoadBalancerIP: cannot find public IP with IP address 52.157.90.236

# in resource group MC_fixit-resource-group_server_cluster_westeurope

# "service.beta.kubernetes.io/azure-load-balancer-resource-group" = "MC_fixit-resource-group_server_cluster_westeurope"

# kubernetes.io/ingress.class: addon-http-application-routing

}

}

spec {

# type = "Ingress"

type = "LoadBalancer"

load_balancer_ip = var.public_ip_address

selector = {

name = "cluster-ingress-svc"

}

port {

name = "cluster-port"

protocol = "TCP"

port = 3000

target_port = "80"

}

}

}

ingress controller

resource "helm_release" "nginx" {

name = "ingress-nginx"

repository = "https://kubernetes.github.io/ingress-nginx"

chart = "ingress-nginx"

namespace = "default"

set {

name = "rbac.create"

value = "false"

}

set {

name = "controller.service.externalTrafficPolicy"

value = "Local"

}

set {

name = "controller.service.loadBalancerIP"

value = var.public_ip_address

}

set {

name = "controller.service.annotations.service.beta.kubernetes.io/azure-load-balancer-internal"

value = "true"

}

# --set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-health-probe-request-path"=/healthz

set {

name = "controller.service.annotations.service\\.beta\\.kubernetes\\.io/azure-load-balancer-health-probe-request-path"

value = "/healthz"

}

}

but the installation fails with this message from terraform

Warning: Helm release "ingress-nginx" was created but has a failed status. Use the `helm` command to investigate the error, correct it, then run Terraform again.

│

│ with module.ingress_controller.helm_release.nginx,

│ on modules/ingress_controller/controller.tf line 2, in resource "helm_release" "nginx":

│ 2: resource "helm_release" "nginx" {

│

╵

╷

│ Error: timed out waiting for the condition

│

│ with module.ingress_controller.helm_release.nginx,

│ on modules/ingress_controller/controller.tf line 2, in resource "helm_release" "nginx":

│ 2: resource "helm_release" "nginx" {

the controller print out

vincenzocalia@vincenzos-MacBook-Air helm_charts % kubectl describe svc ingress-nginx-controller

Name: ingress-nginx-controller

Namespace: default

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.5.1

helm.sh/chart=ingress-nginx-4.4.2

Annotations: meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: default

service: map[beta:map[kubernetes:map[io/azure-load-balancer-internal:true]]]

service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path: /healthz

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.0.173.243

IPs: 10.0.173.243

IP: 52.157.90.236

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 31709/TCP

Endpoints:

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 30045/TCP

Endpoints:

Session Affinity: None

External Traffic Policy: Local

HealthCheck NodePort: 32500

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal EnsuringLoadBalancer 32s (x5 over 108s) service-controller Ensuring load balancer

Warning SyncLoadBalancerFailed 31s (x5 over 107s) service-controller Error syncing load balancer: failed to ensure load balancer: findMatchedPIPByLoadBalancerIP: cannot find public IP with IP address 52.157.90.236 in resource group mc_fixit-resource-group_server_cluster_westeurope

vincenzocalia@vincenzos-MacBook-Air helm_charts % az aks show --resource-group fixit-resource-group --name server_cluster --query nodeResourceGroup -o tsv

MC_fixit-resource-group_server_cluster_westeurope

Why is it looking in the MC_fixit-resource-group_server_cluster_westeurope resource group and not in the fixit-resource-group I created for the Cluster, Public IP and Load Balancer?

If I change the controller load balancer ip to the public ip in MC_fixit-resource-group_server_cluster_westeurope then terraform still outputs the same error, but the controller prints out to be correctly assigned to the ip and load balancer

set {

name = "controller.service.loadBalancerIP"

value = "20.73.192.77" #var.public_ip_address

}

vincenzocalia@vincenzos-MacBook-Air helm_charts % kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cluster-ingress-svc LoadBalancer 10.0.110.114 52.157.90.236 3000:31863/TCP 104m

ingress-nginx-controller LoadBalancer 10.0.106.201 20.73.192.77 80:30714/TCP,443:32737/TCP 41m

ingress-nginx-controller-admission ClusterIP 10.0.23.188 <none> 443/TCP 41m

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 122m

vincenzocalia@vincenzos-MacBook-Air helm_charts % kubectl describe svc ingress-nginx-controller

Name: ingress-nginx-controller

Namespace: default

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.5.1

helm.sh/chart=ingress-nginx-4.4.2

Annotations: meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: default

service: map[beta:map[kubernetes:map[io/azure-load-balancer-internal:true]]]

service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path: /healthz

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.0.106.201

IPs: 10.0.106.201

IP: 20.73.192.77

LoadBalancer Ingress: 20.73.192.77

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 30714/TCP

Endpoints:

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 32737/TCP

Endpoints:

Session Affinity: None

External Traffic Policy: Local

HealthCheck NodePort: 32538

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal EnsuringLoadBalancer 39m (x2 over 41m) service-controller Ensuring load balancer

Normal EnsuredLoadBalancer 39m (x2 over 41m) service-controller Ensured load balancer

vincenzocalia@vincenzos-MacBook-Air helm_charts %

Reading here https://learn.microsoft.com/en-us/azure/aks/faq#why-are-two-resource-groups-created-with-aks

To enable this architecture, each AKS deployment spans two resource groups: You create the first resource group. This group contains only the Kubernetes service resource. The AKS resource provider automatically creates the second resource group during deployment. An example of the second resource group is MC_myResourceGroup_myAKSCluster_eastus. For information on how to specify the name of this second resource group, see the next section. The second resource group, known as the node resource group, contains all of the infrastructure resources associated with the cluster. These resources include the Kubernetes node VMs, virtual networking, and storage. By default, the node resource group has a name like MC_myResourceGroup_myAKSCluster_eastus. AKS automatically deletes the node resource group whenever the cluster is deleted, so it should only be used for resources that share the cluster's lifecycle.

Should I pass the first or the second group depending of what kind of resource I'm creating?

E.g. kubernetes_service needs 1st rg, while azurerm_public_ip needs the 2nd rg?

What is it that I'm missing out here? Please explain it like I was 5 years old because I'm feeling like right now..

Many thanks

CodePudding user response:

Finally found what the problem was.

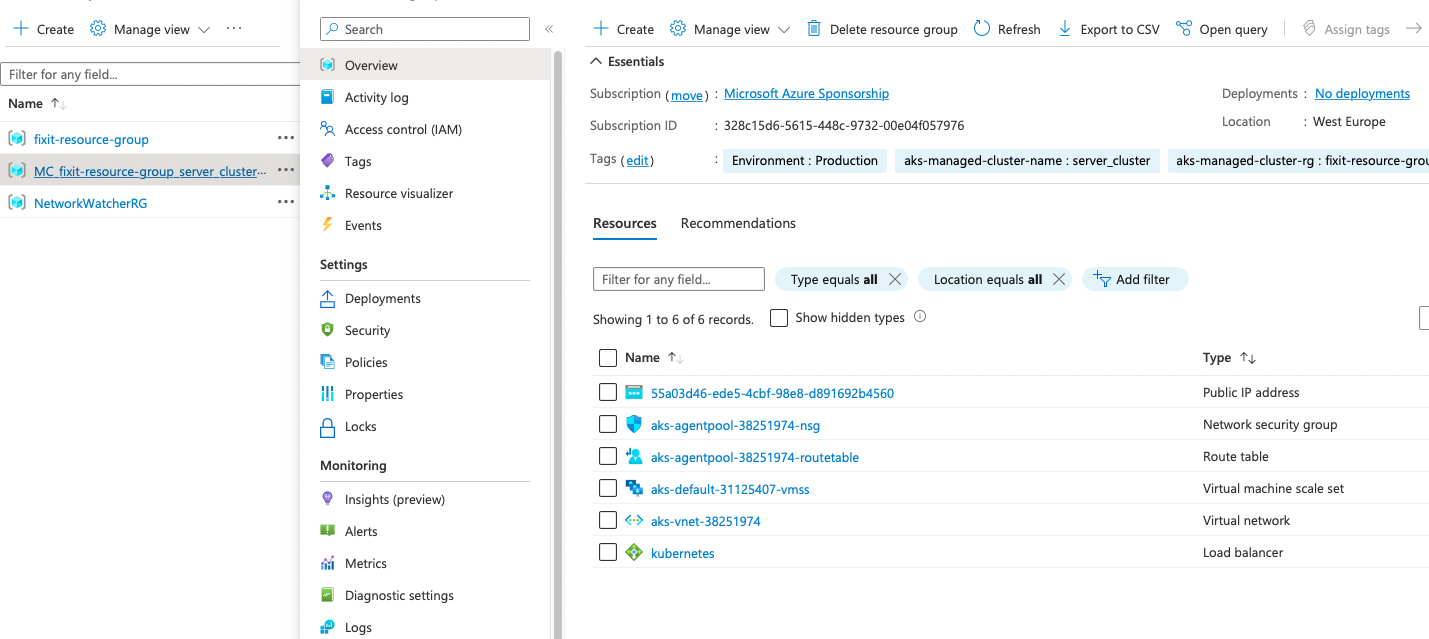

Indeed the Public IP needs to be created in the node resource group because the ingress controller, with the loadBalancerIP assigned to the Public IP address, is going to look for it in the node resource group so if you create it in the resource group fails with the error I was getting.

The node resource group name is assigned at cluster creation eg. MC_myResourceGroup_myAKSCluster_eastus, but you can name it as you wish using the parameter node_resource_group = var.node_resource_group_name.

Also, the Public IP sku "Standard" (to be specified) or "Basic" ( default), and the cluster load_balancer_sku "standard" or "basic"(no default value her, it needs to be specified) have to match.

I also put the Public IP in the cluster module so it can depend on it, to avoid being created before it and failing as the node resource group has not been created yet, couldn't set that dependency correctly in main.tf file.

So the working configuration is now:

main

terraform {

required_version = ">=1.1.0"

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 3.0.2"

}

}

}

provider "azurerm" {

features {

resource_group {

prevent_deletion_if_contains_resources = false

}

}

subscription_id = var.azure_subscription_id

tenant_id = var.azure_subscription_tenant_id

client_id = var.service_principal_appid

client_secret = var.service_principal_password

}

provider "kubernetes" {

host = "${module.cluster.host}"

client_certificate = "${base64decode(module.cluster.client_certificate)}"

client_key = "${base64decode(module.cluster.client_key)}"

cluster_ca_certificate = "${base64decode(module.cluster.cluster_ca_certificate)}"

}

provider "helm" {

kubernetes {

host = "${module.cluster.host}"

client_certificate = "${base64decode(module.cluster.client_certificate)}"

client_key = "${base64decode(module.cluster.client_key)}"

cluster_ca_certificate = "${base64decode(module.cluster.cluster_ca_certificate)}"

}

}

module "cluster" {

source = "./modules/cluster"

location = var.location

vm_size = var.vm_size

resource_group_name = var.resource_group_name

node_resource_group_name = var.node_resource_group_name

kubernetes_version = var.kubernetes_version

ssh_key = var.ssh_key

sp_client_id = var.service_principal_appid

sp_client_secret = var.service_principal_password

}

module "ingress-controller" {

source = "./modules/ingress-controller"

public_ip_address = module.cluster.public_ip_address

depends_on = [

module.cluster.public_ip_address

]

}

cluster

resource "azurerm_resource_group" "resource_group" {

name = var.resource_group_name

location = var.location

tags = {

Environment = "test"

Team = "DevOps"

}

}

resource "azurerm_kubernetes_cluster" "server_cluster" {

name = "server_cluster"

### choose the resource goup to use for the cluster

location = azurerm_resource_group.resource_group.location

resource_group_name = azurerm_resource_group.resource_group.name

### decide the name of the cluster "node" resource group, if unset will be named automatically

node_resource_group = var.node_resource_group_name

dns_prefix = "fixit"

kubernetes_version = var.kubernetes_version

# sku_tier = "Paid"

default_node_pool {

name = "default"

node_count = 1

min_count = 1

max_count = 3

vm_size = var.vm_size

type = "VirtualMachineScaleSets"

enable_auto_scaling = true

enable_host_encryption = false

# os_disk_size_gb = 30

}

service_principal {

client_id = var.sp_client_id

client_secret = var.sp_client_secret

}

tags = {

Environment = "Production"

}

linux_profile {

admin_username = "azureuser"

ssh_key {

key_data = var.ssh_key

}

}

network_profile {

network_plugin = "kubenet"

load_balancer_sku = "basic"

}

http_application_routing_enabled = false

depends_on = [

azurerm_resource_group.resource_group

]

}

resource "azurerm_public_ip" "public-ip" {

name = "fixit-public-ip"

location = var.location

# resource_group_name = var.resource_group_name

resource_group_name = var.node_resource_group_name

allocation_method = "Static"

domain_name_label = "fixit"

# sku = "Standard"

depends_on = [

azurerm_kubernetes_cluster.server_cluster

]

}

ingress controller

resource "helm_release" "nginx" {

name = "ingress-nginx"

repository = "ingress-nginx"

chart = "ingress-nginx/ingress-nginx"

namespace = "default"

set {

name = "controller.service.externalTrafficPolicy"

value = "Local"

}

set {

name = "controller.service.annotations.service.beta.kubernetes.io/azure-load-balancer-internal"

value = "true"

}

set {

name = "controller.service.loadBalancerIP"

value = var.public_ip_address

}

set {

name = "controller.service.annotations.service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path"

value = "/healthz"

}

}

ingress service

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-service

# namespace: default

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2$3$4

spec:

ingressClassName: nginx

rules:

# - host: fixit.westeurope.cloudapp.azure.com #dns from Azure PublicIP

### Node.js server

- http:

paths:

- path: /(/|$)(.*)

pathType: Prefix

backend:

service:

name: server-clusterip-service

port:

number: 80

- http:

paths:

- path: /server(/|$)(.*)

pathType: Prefix

backend:

service:

name: server-clusterip-service

port:

number: 80

...

other services omitted

Hope this can help others having difficulties in getting the setup right. Cheers.