I have a df,the code is:

df = """

ValOption RB test contrat

0 SLA 4 3 23

1 AC 5 4 12

2 SLA 5 5 23

3 AC 2 4 39

4 SLA 5 5 26

5 AC 3 4 52

6 SLA 4 3 64

0 SLA 4 3 23

1 AC 5 4 12

2 SLA 5 5 23

3 AC 2 4 39

4 SLA 5 5 26

5 AC 5 4 52

6 SLA 4 3 64

"""

df = pd.read_csv(StringIO(df.strip()), sep='\s ')

output:

ValOption RB test contrat

0 SLA 4 3 23

1 AC 5 4 12

2 SLA 5 5 23

3 AC 2 4 39

4 SLA 5 5 26

5 AC 3 4 52

6 SLA 4 3 64

0 SLA 4 3 23

1 AC 5 4 12

2 SLA 5 5 23

3 AC 2 4 39

4 SLA 5 5 26

5 AC 5 4 52

6 SLA 4 3 64

Now I group it and get the first by:

df_u=df.groupby(['RB','test']).first()

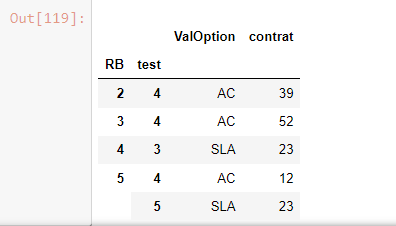

output:

Then I want to apply a function to each row,and for some reason I have to use pd.apply() function:

def func(row):

v1=row['RB']*3

v2=row['test']-1

return v1 v2

df_u['new_col']=df_u.apply(lambda row:func(row), axis=1)

Notice:In real business the function is very complicated and long,so I need to use pd.apply()

Then I get an error:

KeyError: ('RB', 'occurred at index (2, 4)')

CodePudding user response:

You have to reset_index to access the row 'RB' & 'test'. Use .values to set values to new_col:

df_u['new_col'] = df_u.reset_index().apply(func, axis=1).values

print(df_u)

# Output:

ValOption contrat new_col

RB test

2 4 AC 39 9

3 4 AC 52 12

4 3 SLA 23 14

5 4 AC 12 18

5 SLA 23 19

Update

How to return the new_col to the original df?

df = df.merge(df.drop_duplicates(['RB', 'test'])

.assign(new_col=func)[['RB', 'test', 'new_col']],

on=['RB', 'test'], how='left')

# Output

ValOption RB test contrat new_col

0 SLA 4 3 23 14

1 AC 5 4 12 18

2 SLA 5 5 23 19

3 AC 2 4 39 9

4 SLA 5 5 26 19

5 AC 3 4 52 12

6 SLA 4 3 64 14

7 SLA 4 3 23 14

8 AC 5 4 12 18

9 SLA 5 5 23 19

10 AC 2 4 39 9

11 SLA 5 5 26 19

12 AC 5 4 52 18

13 SLA 4 3 64 14

Update2

The reason I drop_duplicates is for saving time,make it faster because the length of row is 60k,if I apply to each row,it spend lots of time,instead if I drop the duplicated first,I don't need to apply to each row,I directly assign the value to the same column value row

Apply is a for-loop like, use vectorization:

df['new_col'] = (df['RB']*3) (df['test']-1)

Performance

For 140,000 records, the operation above took 361 µs:

%timeit (df1['RB']*3) (df1['test']-1)

361 µs ± 9.02 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

For 14 records (it's not a mistake), the previous operation took 935 µs:

%timeit df.drop_duplicates(['RB', 'test']).apply(func, axis=1)

935 µs ± 5.44 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)