is it possible to setup a prometheus/grafana running on centos to monitor several K8S clusters in the lab? the architecture can be similar to the bellow one, although not strictly required. Right now the kubernetes clusters we have, do not have prometheus and grafana installed. The documentation is not very much clear if an additional component/agent remote-push is required or not and how the central prometheus and the K8S need to be configured to achieve the results? Thanks.

CodePudding user response:

You have different solution in order to implement your use case :

- You can use prometheus federation. This will allow you to have a central prometheus server that will scrape samples from other prometheus servers.

- You can use remote_write configuration. This will allow you to send your samples to a remote endpoint (and then eventually scrape that central endpoint). You'll also be able to apply relabeling rules with this configuration.

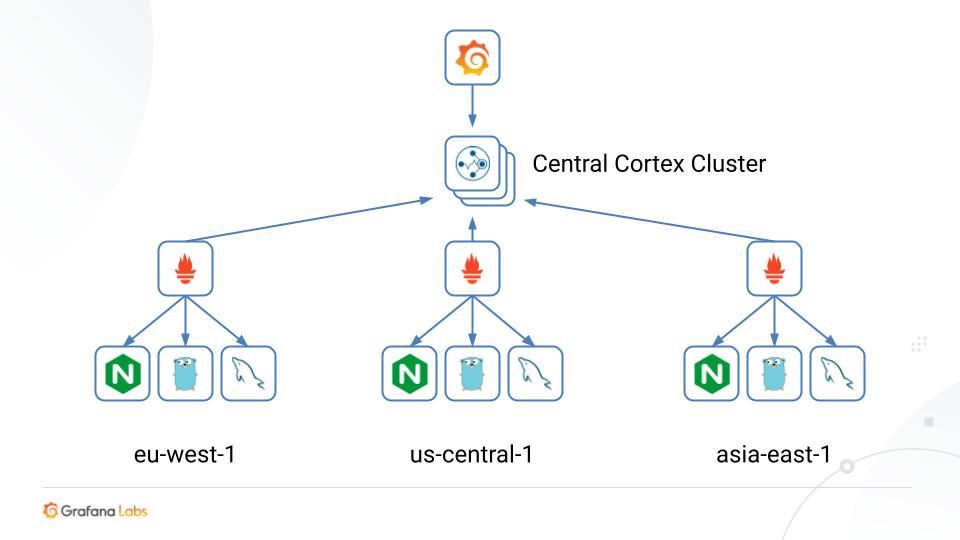

- As @JulioHM said in the comment, you can use another tool like thanos or Cortex. Those tools are great and allow you to do more stuff than just writing to a remote endpoint. You'll be able to implement horizontal scalling of your prometheus servers, long-term storage, etc.

CodePudding user response:

Thanks a lot for all the comments. The federation or the remote_write were the option I was considering. The only annoying point is the prometheus on the K8S servers is running in pods, with internal IPs and have no external IP reachable from the outside world. The grafana port forwarding does not look a very reliable solution. So I am looking to a way to configure the prometheus "clients" to send notifications or to be reachable from the "master" one. Any idea? right now I see the grafana IP as "localhost" with the port number 3000.