I have that format of date Tue Dec 31 07:14:22 0000 2013 in string and I need to convert it to Date object where the timestamp field is to be indexed in scala spark

CodePudding user response:

You can do this by splitting the string column by space and converting that column to array type and then creating a new string column with any of the supported date format.

//Creating sample data

import org.apache.spark.sql.functions._

val df = Seq(("Tue Dec 31 07:14:22 0000 2013"),("Thu Dec 09 09:14:42 0000 2017")).toDF("DateString")

//creating new column of type array from string column

val df1 = df.withColumn("DateArray", split($"DateString", " "))

//Getting the required elements from the array column and combining them to get the date

val df2 = df1.withColumn("DateTime" , concat($"DateArray".getItem(5), lit("-"), $"DateArray".getItem(1), lit("-"),$"DateArray".getItem(2))).withColumn("Date",to_date($"DateTime","yyyy-MMM-dd"))

//Using display to show the content of the dataframe. you can also use .show method.

display(df2)

You can drop the columns that you don't require as per your output requirement.

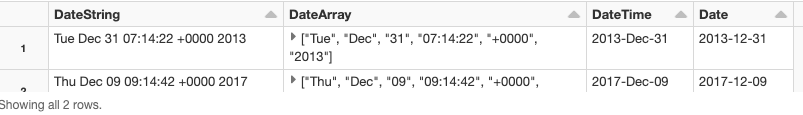

you can see the output as below :

CodePudding user response:

to create an index for dates:

collection.createIndex(Indexes.ascending("date_column"))

CodePudding user response:

TO CONVERT STRING TO DATETIME IN SPARK SCALA https://sparkbyexamples.com/spark/convert-string-to-date-format-spark-sql/ :

package com.sparkbyexamples.spark.dataframe.functions.datetime

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions.{col, to_date}

object StringToDate extends App {

val spark:SparkSession = SparkSession.builder()

.master("local")

.appName("SparkByExamples.com")

.getOrCreate()

spark.sparkContext.setLogLevel("ERROR")

import spark.sqlContext.implicits._

Seq(("06-03-2009"),("07-24-2009")).toDF("Date").select(

col("Date"),

to_date(col("Date"),"MM-dd-yyyy").as("to_date")

).show()

}