I had checked for this issue but didn't found the solution that fits my need.

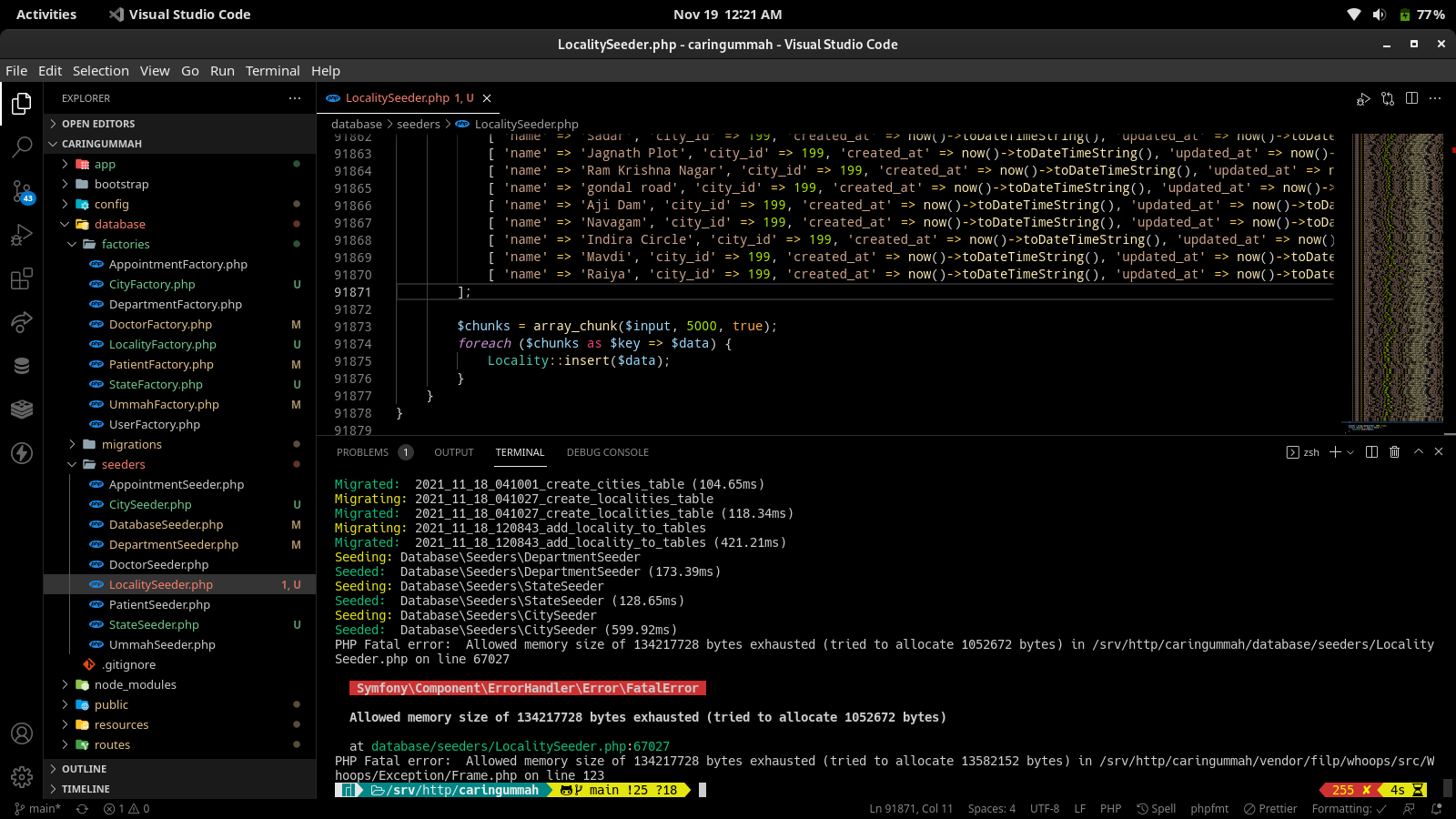

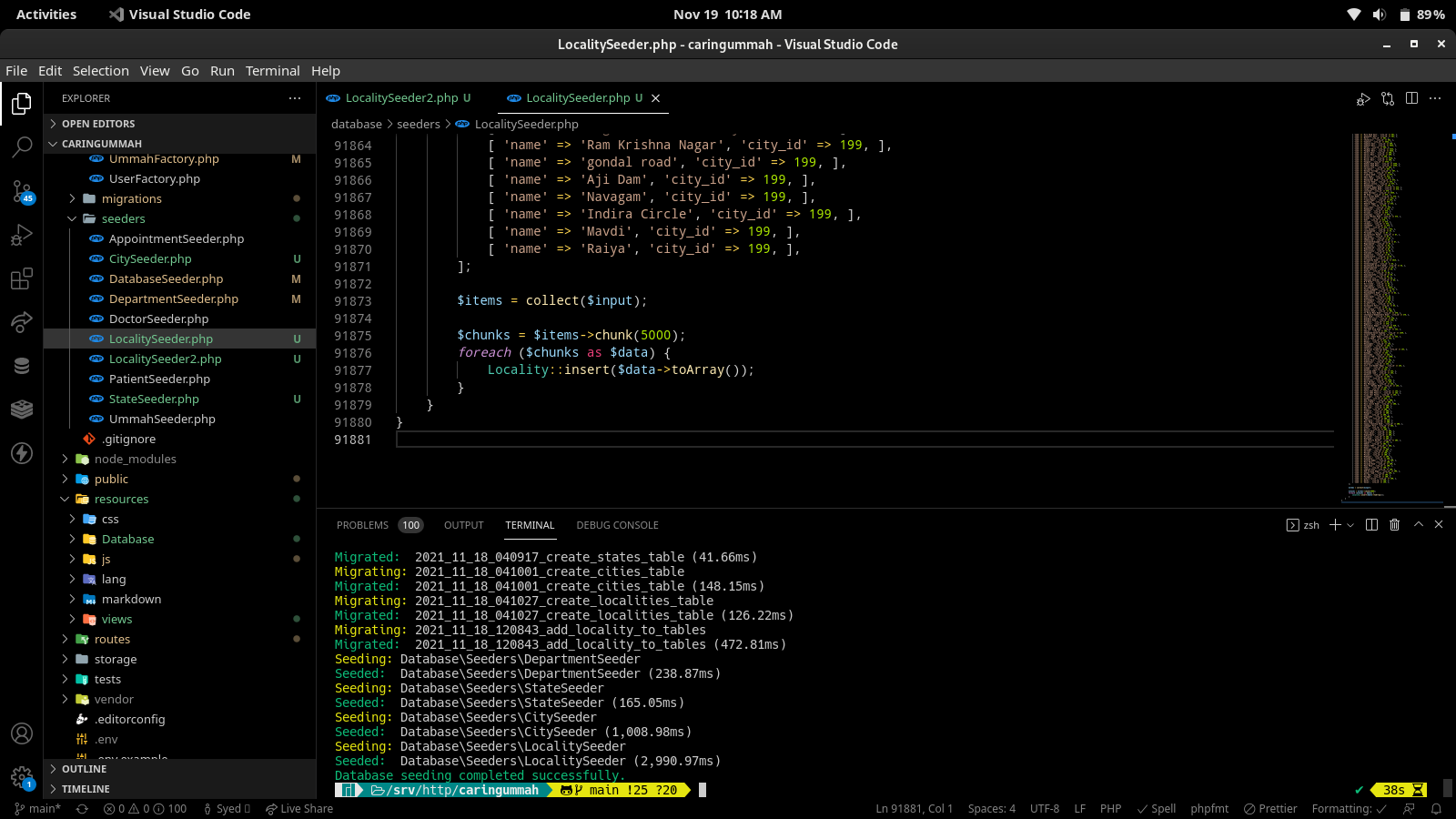

I had created the table for State, City & Locality with 37, 7431 & 91853 records are available.

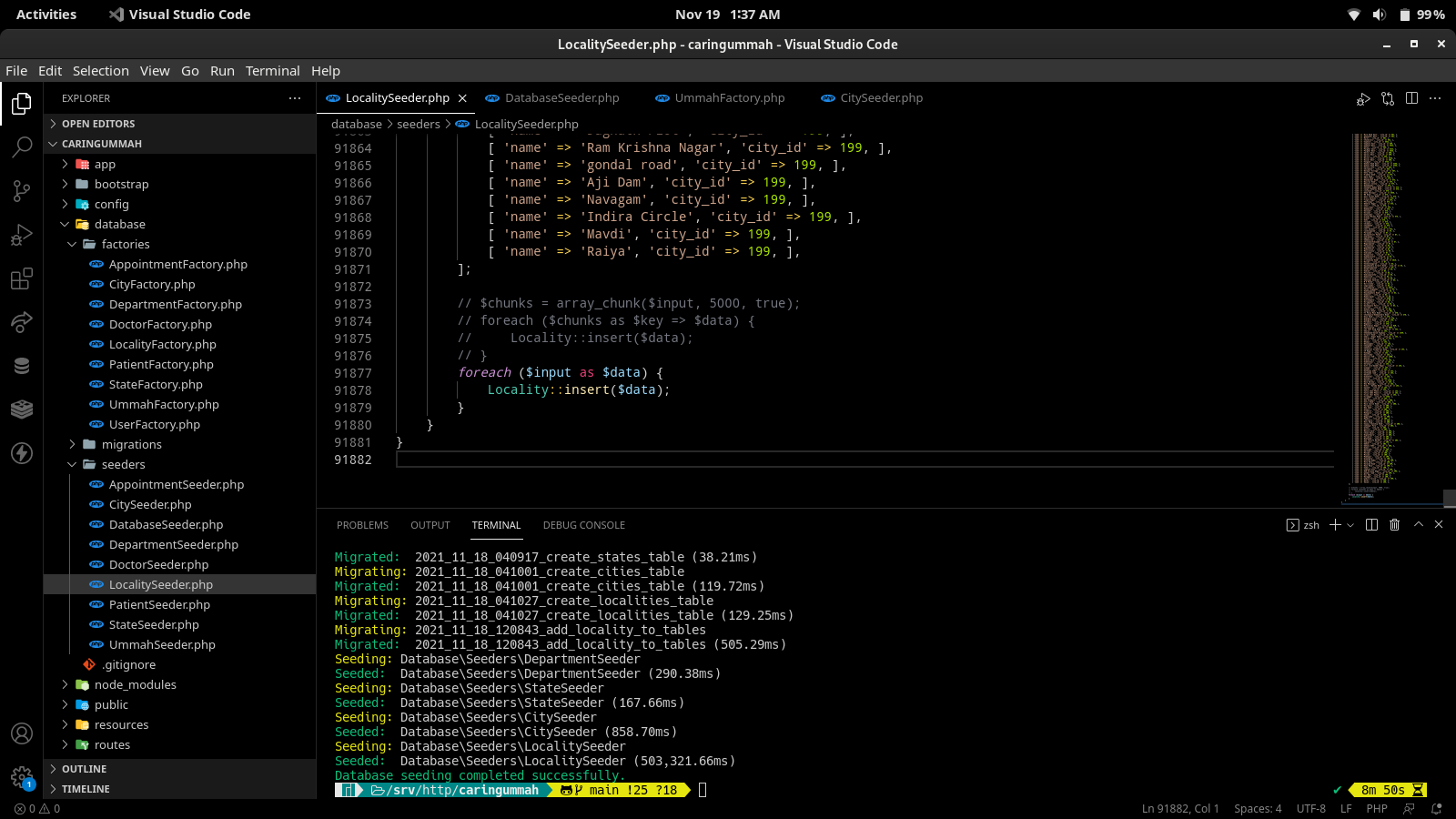

It was taking longer time when I was using create instead of using insert in seeder.

So, I changed my code by replacing create to insert. Then got to know about the chunk by

Thanks in Advance.

CodePudding user response:

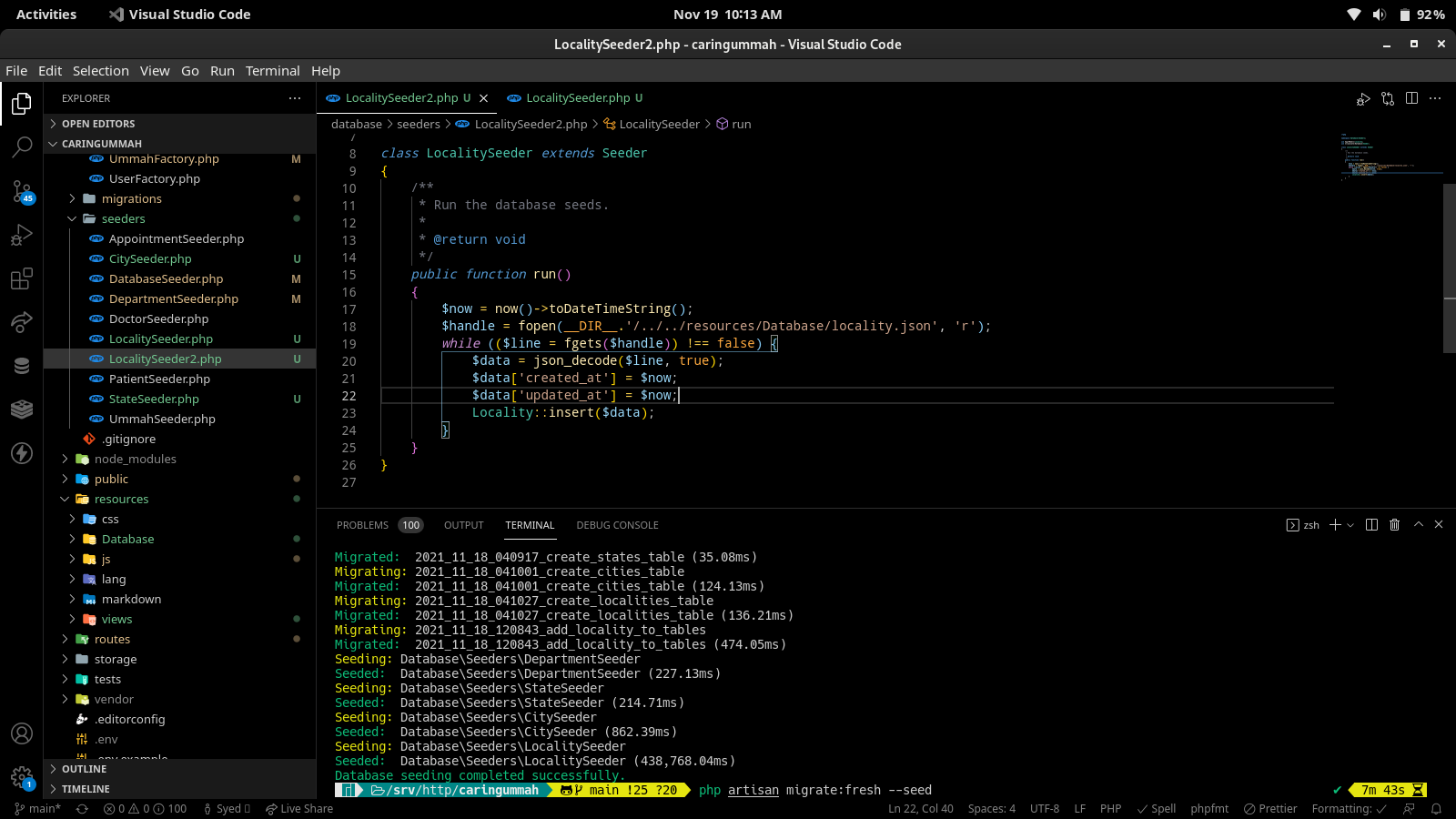

You're trying to load an array of close to 92K entries ($input), and then copying that into a chunked array with array_chunk. The memory limits you've configured (or were set during installation) do not allow you to use so much RAM. One way to fix this, as Daniel pointed out, is to increase your memory limit, but another might be to read this data from a seed file. I'll try to illustrate this below, but you may need to adapt it a little.

In localities.json, note that each line is an individual JSON object

{"name": "Adilabad", "city_id": 5487}

[...]

{"name": "Nalgonda", "city_id": 5476}

Then in the seeder class, you can implement the run method as follows:

$now = now()->toDateTimeString();

$handle = fopen(__DIR__."/localities.json");

while(($line = fgets($handle)) !== FALSE){

$data = json_decode($line, TRUE);

$data["created_at"] = $now;

$data["updated_at"] = $now;

Locality::insert($data);

}

EDIT: With regards to the time this will take, it will always be slow with this much data, but a seeder isn't meant to be run often anyway.

CodePudding user response:

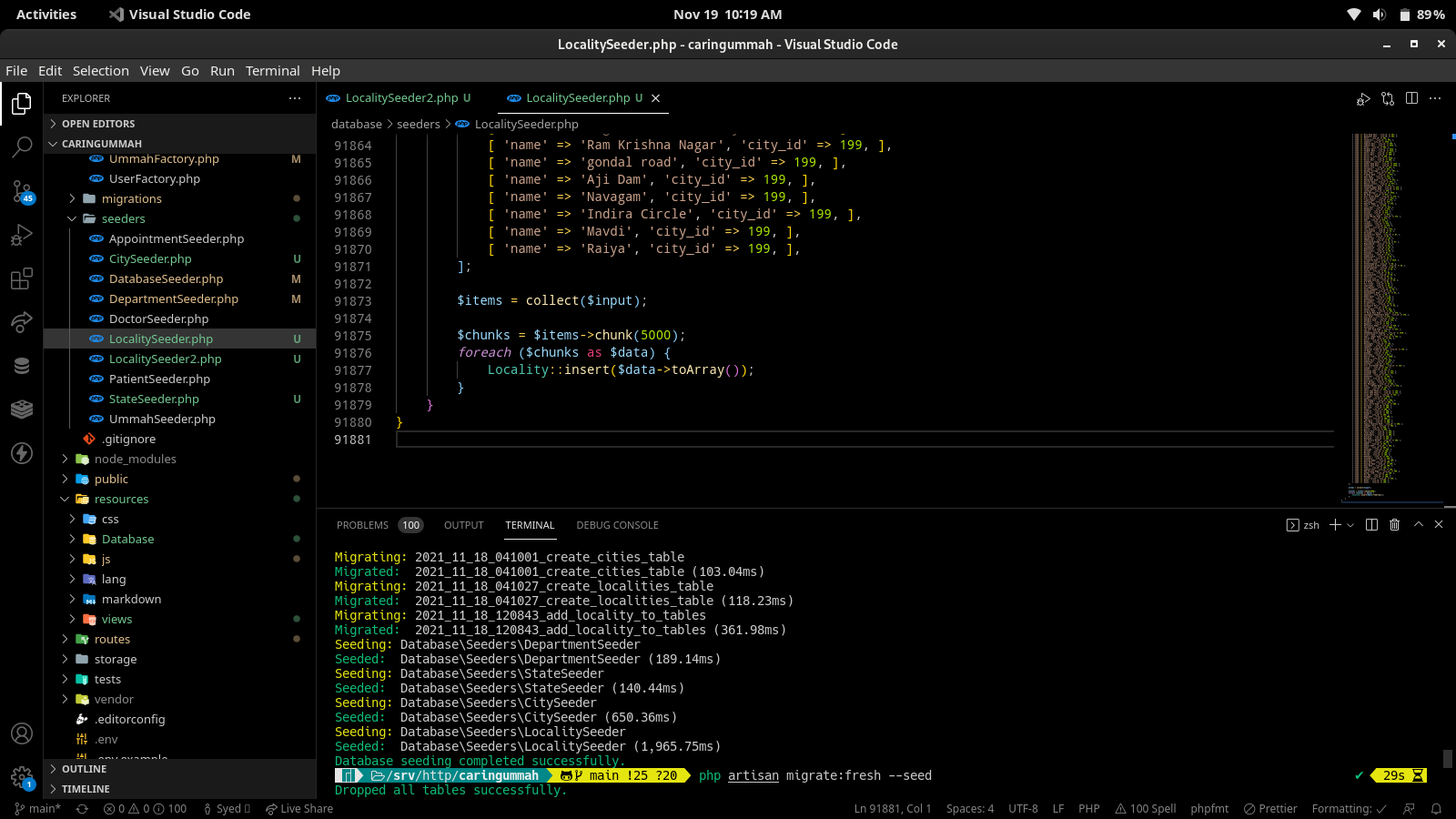

I had made the changes as the above-mentioned by @cerebralfart, but was not satisfied with it because of the seeding time it was talking.

So, I have again done some research on chunk and found an

If there are any improvements, I can perform, Please Let me know.

Thanks @CerebralFart