I have troubles writing a file on my Databricks cluster's driver (as a temp file). I have a scala notebook on my company's Azure Databricks which contains those lines of code :

val xml: String = Controller.requestTo(url)

val bytes: Array[Byte] = xml.getBytes

val path: String = "dbfs:/data.xml"

val file: File = new File(path)

FileUtils.writeByteArrayToFile(file, bytes)

dbutils.fs.ls("dbfs:/")

val df = spark.read.format("com.databricks.spark.xml")

.option("rowTag", "generic:Obs")

.load(path)

df.show

file.delete()

however it crashes with org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: dbfs:/data.xml. When I run a ls on the root of the dbfs, it doesn't show the file data.xml so for me FileUtils is not doing it's job. What puts me even more in troubles is that the following code works when running it on the same cluster, same Azure resource group, same instance of Databricks, but in another notebook :

val path: String = "mf-data.grib"

val file: File = new File(path)

FileUtils.writeByteArrayToFile(file, bytes)

I tried to restart the cluster, remove "dbfs:/" from the path, put the file in the dbfs:/tmp/ directory, use FileUtils.writeStringToFile(file, xml, StandardCharsets.UTF_8) instead of FileUtils.writeByteArrayToFile but none of those solutions has worked, even when combining them.

CodePudding user response:

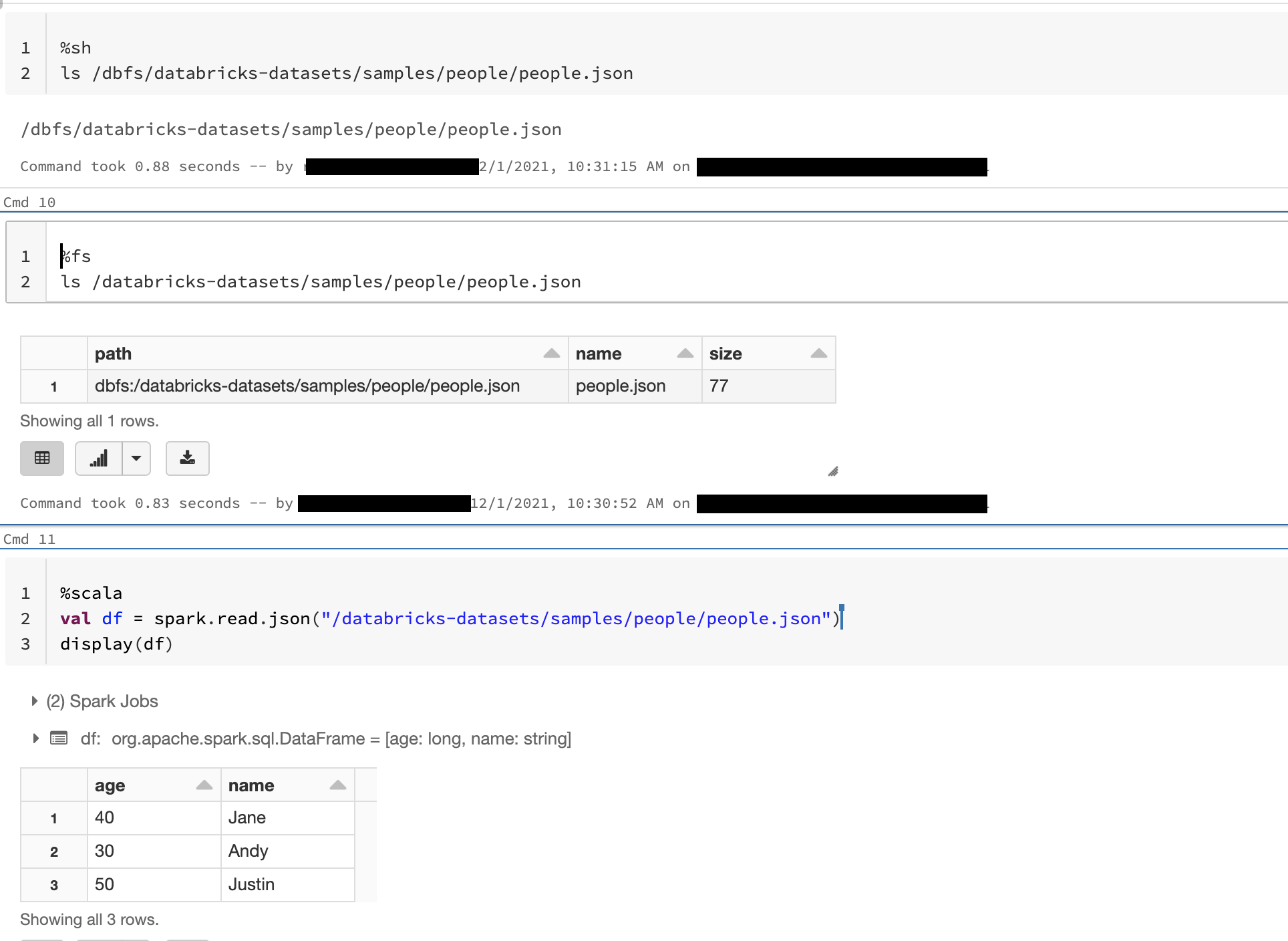

If you're using local APIs, like, File, you need to use corresponding