Scenario

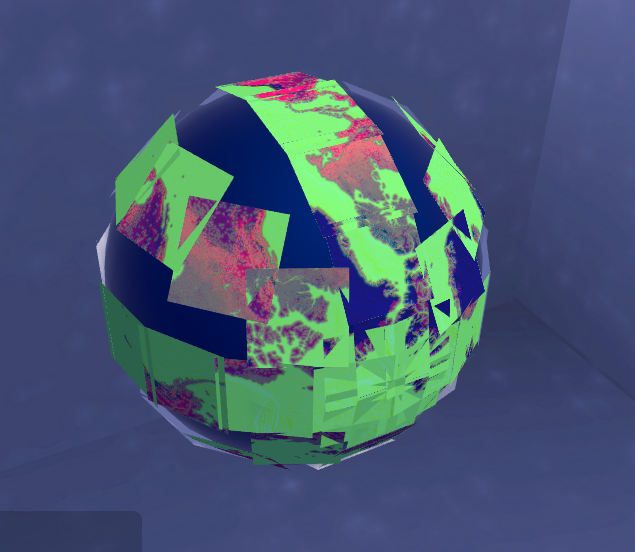

I'm using unity c# to re-invent a google-earth like experience as a project. New tiles are asynchronously loaded in from the web while a user pans the camera around the globe. So far I'm able to load in all the

** EDIT **

I've figured out how to get the tile position correct. Now I'm stuck on the correct rotation of the tiles.

The code that helped me the most was found with a question about

** EDIT 2 **

after adding @Ruzihm's answer to compute normals

CodePudding user response:

For the positioning and rotation of the planes, you can do that in c#:

float x,y,z;

// ...

plane.transform.position = new Vector3(x,y,z);

// negative needed according to comments

Vector3 planeUp = new Vector3(x,y,-z);

Vector3 planeRight = Vector3.Cross(planeUp, Vector3.up);

Vector3 planeForward = Vector3.Cross(planeRight, planeUp);

plane.transform.rotation = Quaternion.LookRotation(planeForward, planeUp);

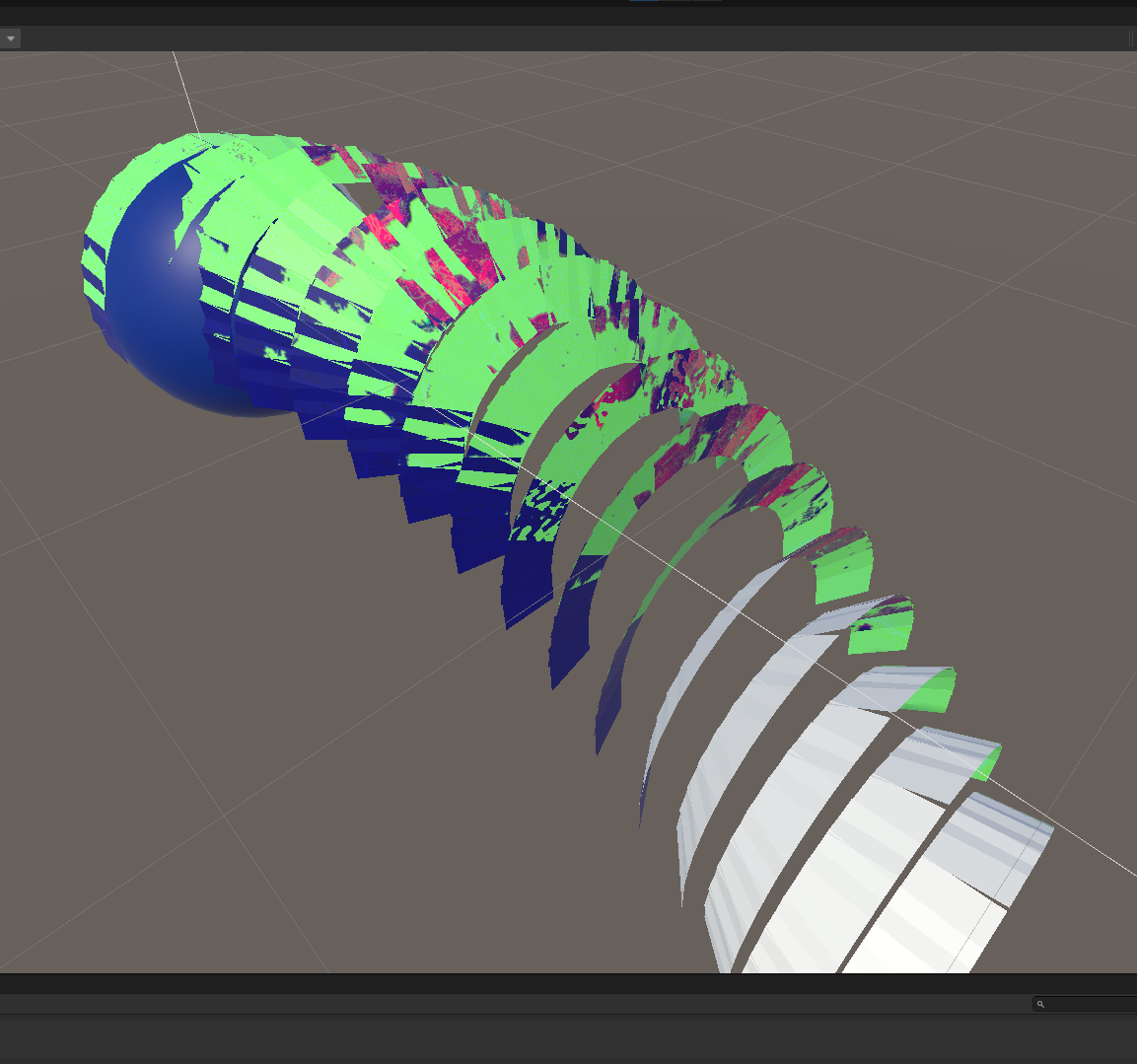

To make them bend into position is a lot harder, since it brings in the question of how to project each square onto a curved surface... How do you manage overlaps? Gaps? How can the edges of each plane be aligned?

Anyway, until that is decided, here's something to help visualize the issues. You can trace a line from each vertex of the quad towards the middle of the sphere and find the point along that line that's the same distance from the center as the center of the plane. Luckily this is doable in a shader you can attach to the plane. For the sake of brevity, this assumes the center of the sphere is at the world origin (0,0,0):

Shader "Custom/SquareBender" {

Properties{

_MainTex("Tex", 2D) = "" {}

}

SubShader {

Pass {

Tags {"LightMode" = "Always"}

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata {

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float4 pos : SV_POSITION;

float2 uv : TEXCOORD0;

};

v2f vert(appdata v)

{

v2f o;

// everything in obj space

float4 worldOrigin = mul(unity_WorldToObject,

float4(0,0,0,1));

float4 fromOriginToObj = float4(0,0,0,1) - worldOrigin;

float4 fromOriginToPos = v.vertex - worldOrigin;

float4 dirPos = normalize(fromOriginToPos);

float r = distance(fromOriginToObj);

o.pos = UnityObjectToClipPos(r*dirPos worldOrigin);

o.uv = v.uv

return o;

}

sampler2D _MainTex;

float4 frag(v2f IN) : COLOR

{

fixed4 col = tex2D(_MainTex, IN.uv);

}

ENDCG

}

}

FallBack "VertexLit"

}

CodePudding user response:

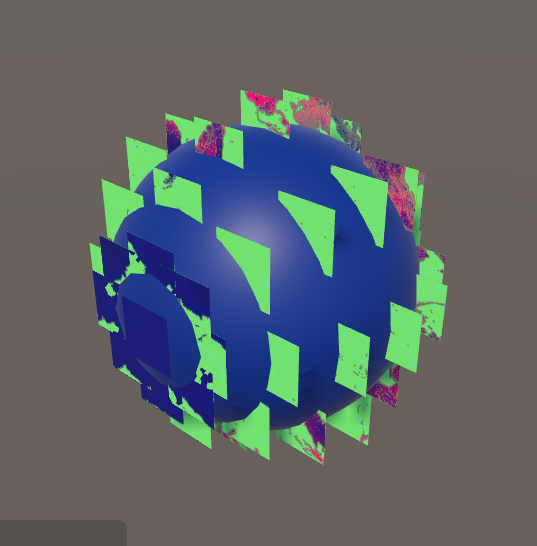

Instead of positioning and orienting the planes in C#, you can have the shader assign their position and orientation if you assign the latitude and longitude to each vertex, and also assign the sphere center and radius:

Shader "Custom/SquareBender" {

Properties{

_MainTex("Tex", 2D) = "" {}

_SphereCenter("SphereCenter", Vector) = (0, 0, 0, 1)

_SphereRadius("SphereRadius", Float) = 5

}

SubShader {

Pass {

Tags {"LightMode" = "Always"}

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata {

float2 uv : TEXCOORD0;

float2 latLon : TEXCOORD1;

};

struct v2f

{

float4 pos : SV_POSITION;

float2 uv : TEXCOORD0;

};

float4 _SphereCenter;

float4 _SphereRadius;

v2f vert(appdata v)

{

v2f o;

float lat = v.latLon.x

float lon = v.latLon.y

float4 posOffsetWorld = float4(

_SphereRadius*cos(lat)*cos(lon),

_SphereRadius*cos(lat)*sin(lon),

_SphereRadius*sin(lat), 0);

float4 posObj = mul(unity_WorldToObject,

posOffsetWorld _SphereCenter);

o.pos = UnityObjectToClipPos(posObj);

o.uv = v.uv;

return o;

}

sampler2D _MainTex;

float4 frag(v2f IN) : COLOR

{

fixed4 col = tex2D(_MainTex, IN.uv);

}

ENDCG

}

}

FallBack "VertexLit"

}

And you can assign data to the vertices like this:

Vector2 GetLatLonOfVertex(Vector2Int tileIndex, Vector2 uv)

{

float lat, lon;

// use tileIndex and uv to calculate lat, lon

// uv = (0,0) for bottom left, (1,1) top right

// Exactly how you could do this depends on your tiling API...

return new Vector2(lat, lon);

}

// Call after mesh is created, and any additional vertices/uvs are set

void SetUpTileLatLons(Mesh mesh, Vector2Int tileIndex)

{

// reference to the current plane's mesh

Mesh mesh;

// index of tile

Vector2Int tileIndex;

Vector3[] uvs = mesh.uv;

Vector2[] latLons= new Vector2[uvs.Length];

for (int i = 0; i < latLons.Length; i )

{

latLons[i] = GetLatLonOfVertex(tileIndex, uvs[i]);

}

mesh.uv2 = latLons;

}

The more vertices your plane has, the rounder your sphere will appear, although it will cause more distortion to the textures on the tiles. The tradeoff is up to you. Just be sure that if you procedurally add more vertices/triangles, you assign appropriate uvs to them.

Note that the positions of the vertices are assigned in the shader based on the lat/lon and have nothing to do with the object's Transform.