I'm not quite sure to understand clearly how non-linear regression can be analysed in R.

I found that I can still do a linear regression with lm by using the log of my functions.

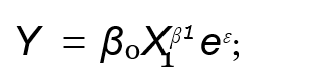

My first function is this one, where β1 is the intercept, β2 is the slope and ε is the error term :

I think that the following command gives me what I want:

result <- lm(log(Y) ~ log(X1), data=dataset)

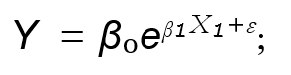

The problem is with the following function:

I don't know what should I put inside the lm in order to perform a linear regression on my function... Any idea ?

CodePudding user response:

The following math shows how to transform your equation into a linear regression:

Y = b0*exp(b1*X1 epsilon)

log(Y) = log(b0) b1*X1 epsilon

log(Y) = c0 b1*X1 epsilon

So in R this is just

lm(log(Y) ~ X, data = your_data)

You won't get a direct estimate for b0, though, just an estimate of log(b0). But you can back-transform the intercept and its confidence intervals by exponentiating them.

b0_est <- exp(coef(fitted_model)["(Intercept)"])

b0_ci <- exp(confint(fitted_model)["(Intercept)", ])