I'm trying to insert the entirety of a numpy 2d array into a single pyspark row... does anyone know how to achieve this?

Ultimately I would like to be able to achieve the below.. where my numpy array is in a single row

I have tried to use higher order function to do this, but haven't been able to get this working so far. Does anyone have any advice?

import pyspark.sql.functions as f

import numpy as np

df = spark.createDataFrame(np.array([[0. , 0.67235401, 0.35767577],

[0.67235401, 0. , 0.2981656 ],

[0.35767577, 0.2981656 , 0. ]]))

expr = "TRANSFORM(arrays_zip(*), x -> struct(*))"

df = sms.withColumn('array', f.expr(expr))

df.show(truncate=False)

CodePudding user response:

Given a numpy.array, it can converted into PySpark Dataframe after converting array into a python list.

Working Example

import numpy as np

np_array = np.array([[0. , 0.67235401, 0.35767577],

[0.67235401, 0. , 0.2981656 ],

[0.35767577, 0.2981656 , 0. ]])

df = spark.createDataFrame([(np_array.tolist(), )], ("array", ))

df.show(truncate=False)

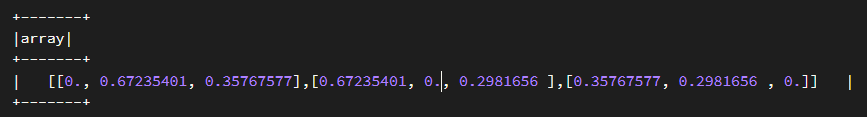

Output

-------------------------------------------------------------------------------------------

|array |

-------------------------------------------------------------------------------------------

|[[0.0, 0.67235401, 0.35767577], [0.67235401, 0.0, 0.2981656], [0.35767577, 0.2981656, 0.0]]|

-------------------------------------------------------------------------------------------