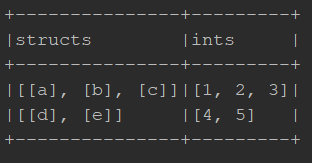

I have a dataframe that looks like this:

val sourceData = Seq(

Row(List(Row("a"), Row("b"), Row("c")), List(1, 2, 3)),

Row(List(Row("d"), Row("e")), List(4, 5))

)

val sourceSchema = StructType(List(

StructField("structs", ArrayType(StructType(List(StructField("structField", StringType))))),

StructField("ints", ArrayType(IntegerType))

))

val sourceDF = sparkSession.createDataFrame(sourceData, sourceSchema)

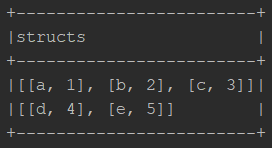

I want to transform it into a dataframe that looks like this:

val targetData = Seq(

Row(List(Row("a", 1), Row("b", 2), Row("c", 3))),

Row(List(Row("d", 4), Row("e", 5)))

)

val targetSchema = StructType(List(

StructField("structs", ArrayType(StructType(List(

StructField("structField", StringType),

StructField("value", IntegerType)))))

))

val targetDF = sparkSession.createDataFrame(targetData, targetSchema)

My best idea so far is to zip the two columns then run a UDF that puts the int value into the struct.

Is there an elegant way to do this, namely without UDFs?

CodePudding user response:

Using zip_with function:

sourceDF.selectExpr(

"zip_with(structs, ints, (x, y) -> (x.structField as structField, y as value)) as structs"

).show(false)

// ------------------------

//|structs |

// ------------------------

//|[[a, 1], [b, 2], [c, 3]]|

//|[[d, 4], [e, 5]] |

// ------------------------

CodePudding user response:

You can use array_zip function to zip structs and ints column then you can use transform function on zipped column to get required output.

sourceDF.withColumn("structs", arrays_zip('structs, 'ints))

.withColumn("structs",

expr("transform(structs, s-> struct(s.structs.structField as structField, s.ints as value))"))

.select("structs")

.show(false)

------------------------

|structs |

------------------------

|[{a, 1}, {b, 2}, {c, 3}]|

|[{d, 4}, {e, 5}] |

------------------------