This is my XML file

<Lieferantenbestellungen>

<Lieferantenbestellung>

<Jahr>2021</Jahr>

<Nummer>1</Nummer>

<Datum>2021-01-07</Datum>

<Lieferant_ID>459</Lieferant_ID>

<Positionen>

<Position Artikel="MCGPXO96" Bezeichnung="Refined Rubber Tuna" Menge="1" />

<Position Artikel="IYTAFI28" Bezeichnung="Generic Cotton Chicken" Menge="8" />

</Positionen>

</Lieferantenbestellung>

</Lieferantenbestellungen>

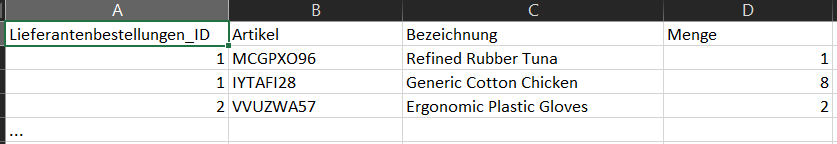

I want to extract the Lieferant_ID, Artikel, Bezeichnung, Bezeichnung and Menge from the file.

I have this python code:

with open('Lieferantenbestellungen.xml', 'r') as f:

soup = BeautifulSoup(f.read(), 'lxml')

# Get the data you want

df = pd.DataFrame(list(zip(

[el.text for el in soup.find_all('Lieferant_ID')],

[el.text for el in soup.find_all('Artikel')],

[el.text for el in soup.find_all('Bezeichnung')],

[el.text for el in soup.find_all('Menge')]

)), columns=['Lieferant_ID', 'Artikel', 'Bezeichnung', 'Menge'])

# Dump to csv

df.to_csv('out.csv', index=False)

When I run the code it generates the CSV file but it's empty. Does anyone have any idea of what am I doing wrong?

Thanks in advance

CodePudding user response:

pandas now has .read_xml() which you can use directly.

>>> pd.read_xml('Lieferantenbestellungen.xml', xpath='.//Lieferant_ID | .//Position')

Lieferant_ID Artikel Bezeichnung Menge

0 459.0 None None NaN

1 NaN MCGPXO96 Refined Rubber Tuna 1.0

2 NaN IYTAFI28 Generic Cotton Chicken 8.0

You can .ffill() and .dropna() to "align" the results.

>>> df = pd.read_xml('Lieferantenbestellungen.xml', xpath='.//Lieferant_ID | .//Position')

>>> df['Lieferant_ID'] = df['Lieferant_ID'].ffill()

>>> df.dropna()

Lieferant_ID Artikel Bezeichnung Menge

1 459.0 MCGPXO96 Refined Rubber Tuna 1.0

2 459.0 IYTAFI28 Generic Cotton Chicken 8.0

CodePudding user response:

To parse xml, you need to do soup = BeautifulSoup(markup, "xml"), not "lxml".

When you do this, soup.find_all('Lieferant_ID') returns ['459'].

However, doing find_all() a bunch of times isn't a great idea because you have multiple Positionen tags per Lieferantenbestellung. Instead, I suggest you find_all("Lieferantenbestellung"), and then process each of these tags.

Also, for debugging, it's easier if you split your code over multiple lines instead of stuffing everything into a single line.

with open('Lieferantenbestellungen.xml', 'r') as f:

soup = BeautifulSoup(f.read(), 'lxml')

rows = []

for tag in soup.find_all("Lieferantenbestellung")

lief_id = tag.findChild("Lieferant_ID")

if lief_id is None:

continue

for ptag in tag.findChild("Positionen").findChildren("Position"):

row = dict() # Make a new row

row["Lieferant_ID"] = lief_id.text

row["Artikel"] = ptag.attrs["Artikel"]

row["Bezeichnung"] = ptag.attrs["Bezeichnung"]

row["Menge"] = ptag.attrs["Menge"]

rows.append(row)

Now, you can create your dataframe using pd.DataFrame.from_records()

pd.DataFrame.from_records(rows)

Lieferant_ID Artikel Bezeichnung Menge

0 459 MCGPXO96 Refined Rubber Tuna 1

1 459 IYTAFI28 Generic Cotton Chicken 8

CodePudding user response:

import xml.etree.ElementTree as ET

import csv

tree = ET.parse('your_file.xml')

root = tree.getroot()

returnitem = {}

for i in root.findall('Lieferantenbestellung'):

id = i.get('Lieferant_ID')

returnitem["Lieferant_ID"] = id

num = 0

for i2 in i.find("Positionen").findall("Position"):

Artikel = i2.find("Artikel").text

Bezeichnung = i2.find("Bezeichnung").text

Menge = i2.find("Menge").text

num = 1

returnitem["Data",str(num)] = {"Artikel":Artikel,"Bezeichnung":Bezeichnung,"Menge":Menge}

with open('result.csv', 'w') as f:

for key in returnitem.keys():

f.write("%s, %s\n" % (key, returnitem[key]))

This program should able to solve your problem But there maybe some data in json/dictonary form