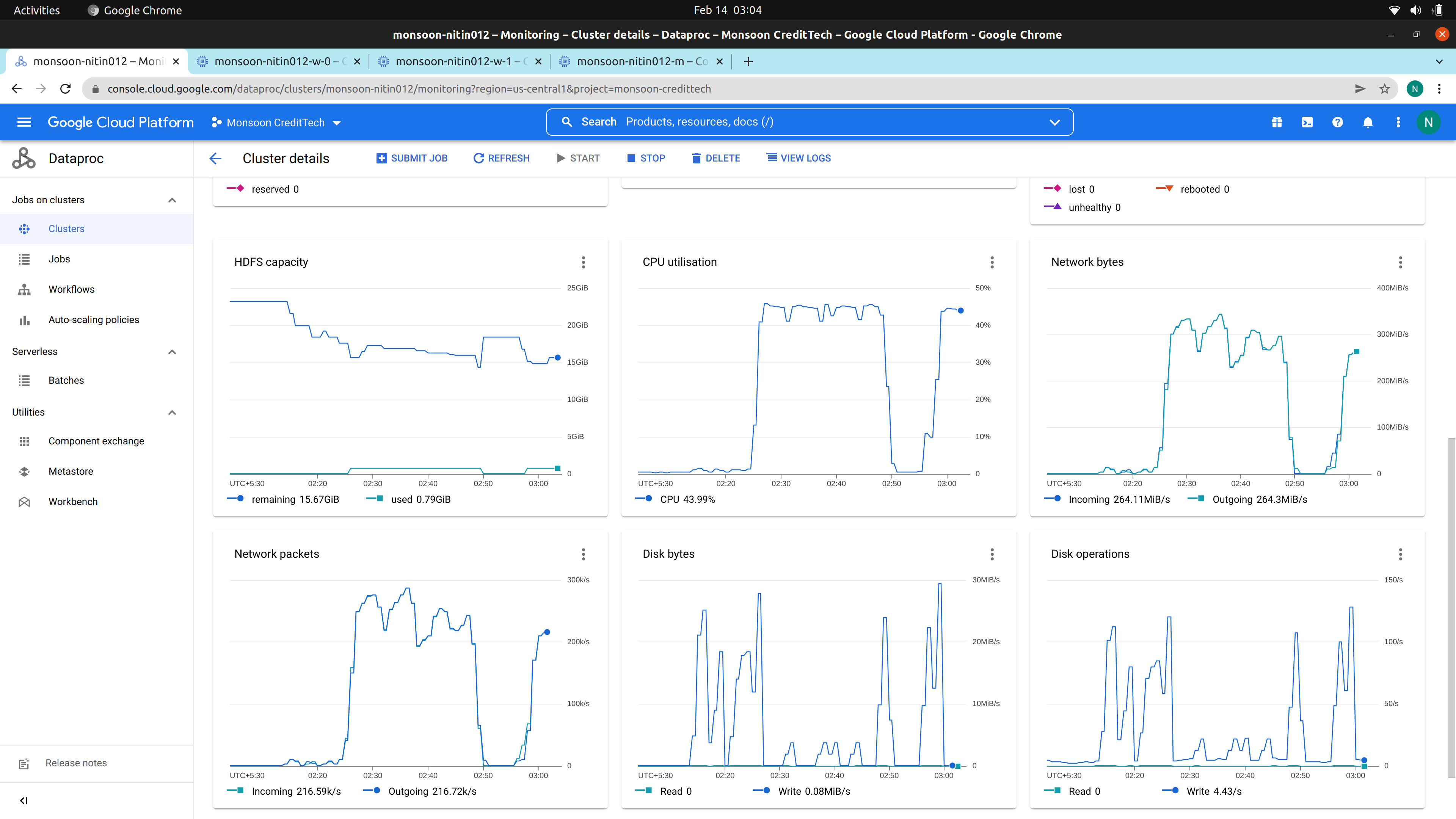

I am running a pyspark job on dataproc and my total hdfs capacity is not remaining constant.

As you can see in the first chart that the remaining hdfs capacity is falling even though the used hdfs capacity is minimal. Why is remaining used not constant?

CodePudding user response:

The "used" in the monitoring graph is actually "DFS used", and it didn't show "non-DFS used". If you open the HDFS UI in the component gateway web interfaces you should be able to see something like:

Configured Capacity : 232.5 GB

DFS Used : 38.52 GB

Non DFS Used : 45.35 GB

DFS Remaining : 148.62 GB

DFS Used% : 16.57 %

DFS Remaining% : 63.92 %

The formula is :

DFS Remaining = Total Disk Space - max(Reserved Space, Non-DFS Used) - DFS Used

Configured capacity = Total Disk Space - Reserved Space

Reserved Space is controlled by the dfs.datanode.du.reserved 1 property which defaults to 0. So in your case it is the non-DFS used that gets deducted. Here is a similar question.