I am using tf.keras.utils.image_dataset_from_directory to load a dataset of 4575 images. While this function allows to split the data into two subsets (with the validation_split parameter), I want to split it into training, testing, and validation subsets.

I have tried using dataset.skip() and dataset.take() to further split one of the resulting subsets, but these functions return a SkipDataset and a TakeDataset respectively (by the way, contrary to

But when using a SkipDataset, they are not:

CodePudding user response:

The issue is that you are not taking and skipping samples when you do test_val_ds.take(686) and test_val_ds.skip(686), but actually batches. Try running print(val_dataset.cardinality()) and you will see how many batches you really have reserved for validation. I am guessing val_dataset is empty, because you do not have 686 batches for validation. Here is a working example:

import tensorflow as tf

import pathlib

dataset_url = "https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz"

data_dir = tf.keras.utils.get_file('flower_photos', origin=dataset_url, untar=True)

data_dir = pathlib.Path(data_dir)

batch_size = 32

train_ds = tf.keras.utils.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(180, 180),

batch_size=batch_size)

val_ds = tf.keras.utils.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(180, 180),

batch_size=batch_size)

test_dataset = val_ds.take(5)

val_ds = val_ds.skip(5)

print('Batches for testing -->', test_dataset.cardinality())

print('Batches for validating -->', val_ds.cardinality())

model = tf.keras.Sequential([

tf.keras.layers.Rescaling(1./255, input_shape=(180, 180, 3)),

tf.keras.layers.Conv2D(16, 3, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(32, 3, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(64, 3, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(5)

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

epochs=1

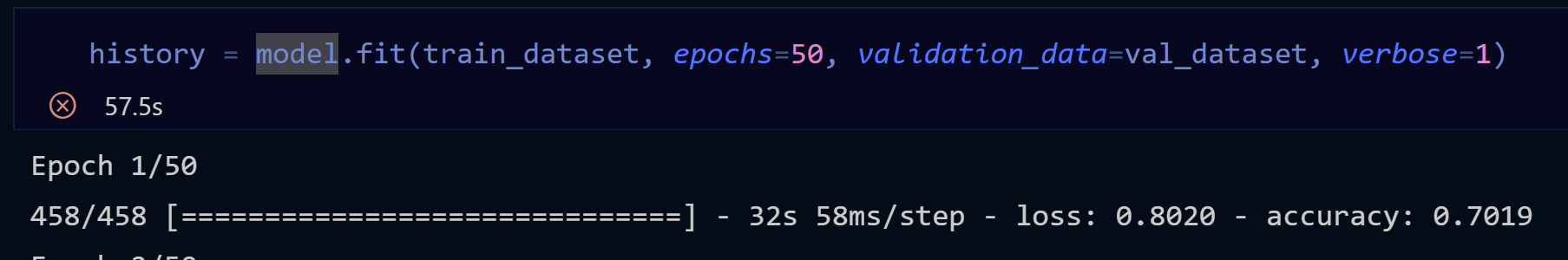

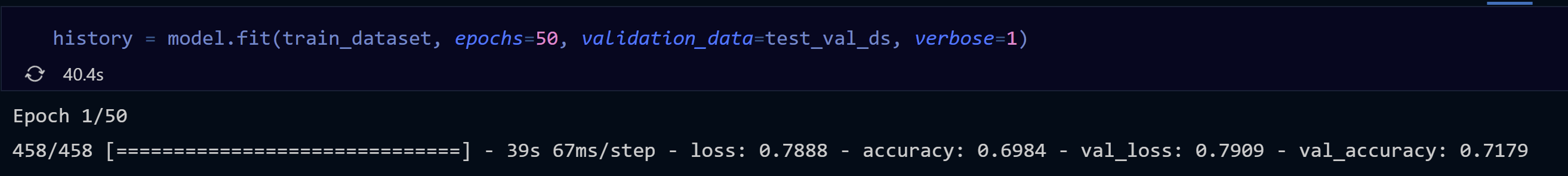

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=1

)

Found 3670 files belonging to 5 classes.

Using 2936 files for training.

Found 3670 files belonging to 5 classes.

Using 734 files for validation.

Batches for testing --> tf.Tensor(5, shape=(), dtype=int64)

Batches for validating --> tf.Tensor(18, shape=(), dtype=int64)

92/92 [==============================] - 96s 1s/step - loss: 1.3516 - accuracy: 0.4489 - val_loss: 1.1332 - val_accuracy: 0.5645

In this example, with a batch_size of 32, you can clearly see that the validation set reserved 23 batches. Afterwards, 5 batches were given to the test set and 18 batches remained for the validation set.