I've been web-scraping a website that has information on many chemical compounds. The problem is that despite all the pages having some information that is the same, it's not consistent. So that means I'll have different amount of columns with each extraction. I want to organize everything in an Excel file so that it's easier for me to filter the information that I want but I've been having a lot of trouble with it.

Examples (there's way more than only 3 dataframes being extracted though): DF 1 - From web-scraping the first page

| Compound Name | Study Type | Cas Number | EC Name | Remarks | Conclusions |

|---|---|---|---|---|---|

| Aspirin | Specific | 3439-73-9 | Aspirin | Repeat | Approved |

DF 2 - From web-scraping

| Compound Name | Study Type | Cas Number | EC Name | Remarks | Conclusions | Summary |

|---|---|---|---|---|---|---|

| EGFR | Specific | 738-9-8 | EGFR | Repeat | Not Approved | None Conclusive |

DF 3 - From web-scraping

| Compound Name | Study Type | Cas Number | Remarks | Conclusions |

|---|---|---|---|---|

| Benzaldehyde | Specific | 384-92-2 | Repeat | Not Approved |

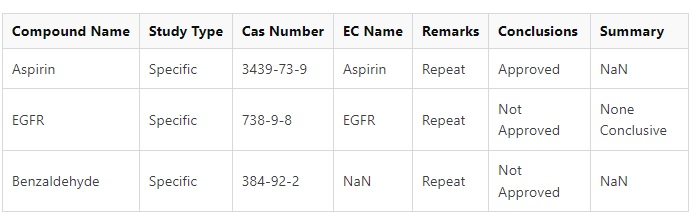

What I want is something like this:

FINAL DF (image)

I've tried so many things with pd.concat but all attempts were unsucessful.

The closest I've gotten was something similar to this, repeating the columns:

| Compound Name | Study Type | Cas Number | EC Name | Remarks | Conclusions |

|---|---|---|---|---|---|

| Aspirin | Specific | 3439-73-9 | Aspirin | Repeat | Approved |

| Compound Name | Study Type | Cas Number | Remarks | Conclusions | |

| Benzaldehyde | Specific | 384-92-2 | Repeat | Not Approved | |

| Compound Name | Study Type | Cas Number | EC Name | Remarks | Conclusions |

| EGFR | Specific | 738-9-8 | EGFR | Repeat | Not Approved |

Here's a little bit of the current code I'm trying to write:

compound_info = []

descriptor_info = []

df_list = []

df = pd.DataFrame()

df_final = pd.DataFrame(columns=['Compound Name',

'Study Type',

'CAS Number',

'EC Name',

'Remarks',

'Conclusions'])

for i in range(1,num_btn_selecionar 1):

time.sleep(10)

driver.find_element_by_xpath('//*[@id="SectionHeader"]/div[3]/select/option[' str(i) ']').click()

page_source = driver.page_source

soup = BeautifulSoup(page_source, "html.parser")

info = soup.find_all("dl", {'class':'HorDL'})

lista_info = len(info)

all_info_compound = []

all_descrip_compound = []

for y in range(0, lista_info):

for z in info[y].find_all('dd'):

all_info_compound.append(z.text)

for w in info[y].find_all('dt'):

all_descrip_compound.append(w.text)

compound_info.append(all_info_compound)

descriptor_info.append(all_descrip_compound)

data_tuples = list(zip(all_descrip_compound[1:],all_info_compound[1:]))

temp_df = pd.DataFrame(data_tuples)

data_transposed = temp_df.T

#df_list.append(data_transposed)

pd.concat([df_final,data_transposed], ignore_index=True, axis=0)

The error I get is:

InvalidIndexError: Reindexing only valid with uniquely valued Index objects

I would highly appreciate the help!

CodePudding user response:

pd.concat should do the job. The reason for that error is that one of the dataframes in concat, which is very likely to be data_transposed, has two columns sharing the same name. To see this, you can replace your last line with

try:

df_final = pd.concat([df_final,data_transposed], ignore_index=True, axis=0)

except Exception as e:

print('Error:', e)

print('df_final:', df_final.columns)

print('data_transposed:', data_transposed.columns)

Also in your last line you never overwrite your df_final with the new, concatenated one, you need df_final = in the front as I have added to my code above.