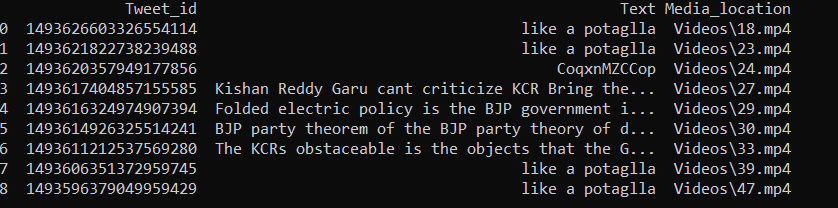

I have a dataframe which looks like this:

I'm building a model which takes text and video as input. So, my aim is to load the Text and Media_location (which contains video files path) from the dataframe, so that it is iterable when I feed df['Text'] and the video (loaded from path df['Media_location']) together.

I couldn't find any implemenations in tensorflow that would do this sort of thing, so drop any suggestions you may have.

CodePudding user response:

You can use tensorflow.keras.utils.Sequence.

import math

from tensorflow.keras.utils import Sequence

class Dataloader(Sequence):

def __init__(self, df, y_array, batch_size):

self.df, self.y_array = df, y_array

self.batch_size = batch_size

def __len__(self):

return math.ceil(len(self.df) / self.batch_size)

def __getitem__(self, idx):

slices = slice(idx*self.batch_size, (idx 1)*self.batch_size, None)

return [(tuple(a), b) for a, b in zip(self.df[['Text', 'Media_location']].iloc[slices].values, self.y_array[slices])]

example:

import numpy as np

for batch in Dataloader(df, np.random.randint(0, 2, size=10), 3):

for (text, video), label in batch:

print((text, video), label)

print()

output:

('E DDC', 'Videos\\17.mp4') 0

('CBAD ', 'Videos\\80.mp4') 1

('EBBBBB E', 'Videos\\07.mp4') 1

('ABB B ', 'Videos\\68.mp4') 0

('BCDADDA A', 'Videos\\73.mp4') 1

('CDECECADE', 'Videos\\04.mp4') 1

('EADBDBC', 'Videos\\85.mp4') 1

('ABCCBC AA', 'Videos\\50.mp4') 1

('DEBCA', 'Videos\\32.mp4') 1

('DD CCCB', 'Videos\\24.mp4') 0

CodePudding user response:

You can try using tensorflow-io, which will run in graph mode. Just run pip install tensorflow-io and then try:

import tensorflow as tf

import tensorflow_io as tfio

import pandas as pd

df = pd.DataFrame(data={'Text': ['some text', 'some more text'],

'Media_location': ['/content/sample-mp4-file.mp4', '/content/sample-mp4-file.mp4']})

dataset = tf.data.Dataset.from_tensor_slices((df['Text'], df['Media_location']))

def decode_videos(x, y):

video = tf.io.read_file(y)

video = tfio.experimental.ffmpeg.decode_video(video)

return x, video

dataset = dataset.map(decode_videos)

for x, y in dataset:

print(x, y.shape)

tf.Tensor(b'some text', shape=(), dtype=string) (1889, 240, 320, 3)

tf.Tensor(b'some more text', shape=(), dtype=string) (1889, 240, 320, 3)

In this example, each video contains 1889 frames.