I am training the following NN with tensorflow:

def build_model():

inputs_layers = []

concat_layers= []

for k in range(k_i, k_f 1):

kmers = train_datasets[k].shape[1]

unique_kmers = train_datasets[k].shape[2]

input = Input(shape=(kmers, unique_kmers))

inputs_layers.append(input)

x = Dense(4, activity_regularizer=tf.keras.regularizers.l2(0.2))(input)

x = Dropout(0.4)(x)

x = Flatten()(x)

concat_layers.append(x)

inputs = keras.layers.concatenate(concat_layers, name='concat_layer')

x = Dense(4, activation='relu',activity_regularizer=tf.keras.regularizers.l2(0.2))(inputs)

x = Dropout(0.3)(x)

x = Flatten()(x)

outputs = Dense(1, activation='sigmoid')(x)

return inputs_layers, outputs

I used the for loop for creating the input layers because I need them to be flexible.

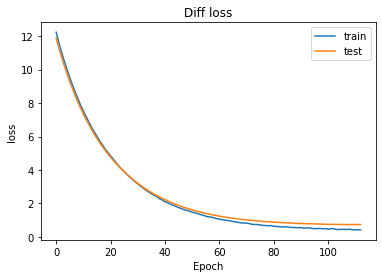

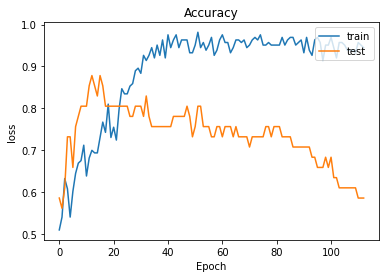

The problem is that when I train this NN, at the beginning the validation loss starts going down, as the accuracy goes up. But after some point, the validation accuracy starts to go down while the loss keeps going down.

I understand that this might be possible because the accuracy is mesured when the proablilites of the output are converted into 1 or 0, but I expect this to be an exception when I am not "lucky" with a particular validation set. However, I shuffled my dataset and obtained different validation sets several times, but the output is always the same: loss and accuracy go down together.

I understand that the model is overfitting. Desipite that, I would still excpect to obtain a correlation between accuracy and loss. I am using a stop_early callback monitoring val_loss. I dont like the idea to change it to monitor val_accuracy, because I feel I would be loosing fitness (because I would prevent val_loss to reach the lowest value)

CodePudding user response:

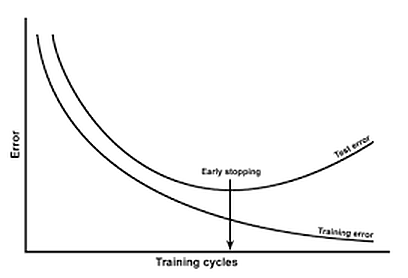

One technique to stop training in such a case is early stopping (see also the Keras Early Stopping callback).

Avoiding overfitting is tricky. You are already employing one method which is using the Dropout layer. You can try increasing the probability but there is a sweetspot and "wrong" values will just hurt the model quality and performance.

The holy grale here is usually "more data". If not available, you can try data-augmentation.

CodePudding user response:

This is not so unusual, especially when you're using a "regularizer".

Your loss is a sum of the "actual loss" (the one you defined in compile) and the regularization losses.

So, it's perfectly possible that your weights/activations are going down, thus your regularization loss is going down too, while the actual loss may be going up invisibly.

Also, accuracy and loss are not always well connected. Although your case seems extreme, sometimes the accuracy might stop improving while the loss keeps improving (especially in a case where the loss follows a strange logic).

So, some hints:

- Try this model without regularization and see what happens

- If you see the regularization was the problem and you still want it, decrease the regularization coefficients

- Create a callback to stop training a few epochs after the maximum validation accuracy

- Use losses that follow the accuracy relatively well (usually the stardard losses do)

- Don't forget to check whether your validation data is in the "same scale" as the training data.