I am trying to run a code for defect detection of gear, whether the bolt in the gear are present or not but facing difficulty to do same.

The code which I am running is facing problem where the SSIM value is coming incorrect for good gear as well as for bad gear.

Moreover if possible, pls tell how it can detect the no. of teeth in gear.

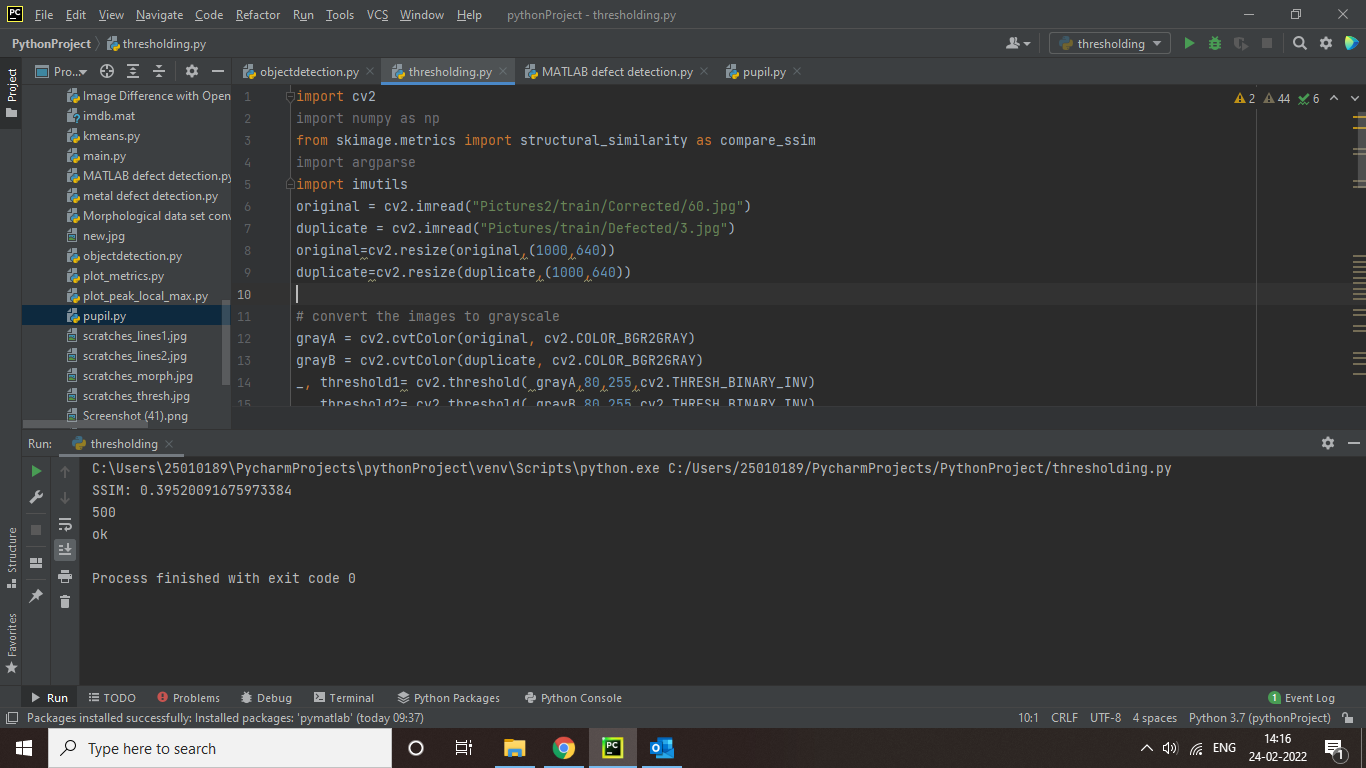

please find the code

import cv2

import numpy as np

from skimage.metrics import structural_similarity as compare_ssim

import argparse

import imutils

original = cv2.imread("Pictures2/train/Corrected/60.jpg")

duplicate = cv2.imread("Pictures/train/Defected/3.jpg")

original=cv2.resize(original,(1000,640))

duplicate=cv2.resize(duplicate,(1000,640))

# convert the images to grayscale

grayA = cv2.cvtColor(original, cv2.COLOR_BGR2GRAY)

grayB = cv2.cvtColor(duplicate, cv2.COLOR_BGR2GRAY)

_, threshold1= cv2.threshold( grayA,80,255,cv2.THRESH_BINARY_INV)

_, threshold2= cv2.threshold( grayB,80,255,cv2.THRESH_BINARY_INV)

# compute the Structural Similarity Index (SSIM) between the two

# images, ensuring that the difference image is returned

(score, diff) = compare_ssim(threshold1, threshold2, full=True)

diff = (diff * 255).astype("uint8")

print("SSIM: {}".format(score))

# threshold the difference image, followed by finding contours to

# obtain the regions of the two input images that differ

thresh = cv2.threshold(diff, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

# loop over the contours

#checking similarities using ORB Algorithm

orb= cv2.ORB_create()

kp_1, desc_1= orb.detectAndCompute(threshold1,None)

kp_2, desc_2= orb.detectAndCompute(threshold2,None)

matcher= cv2.BFMatcher(cv2.NORM_HAMMING)

matches=matcher.knnMatch(desc_1,desc_2,k=2)

good=[]

for m,n in matches:

if m.distance<0.7*n.distance:

good.append([m])

final_image=cv2.drawMatchesKnn(threshold1,kp_1,threshold2,kp_2,good,None)

final_image=cv2.resize(final_image,(1000,640))

print(len(matches))

cv2.imshow("matches",final_image)

if score>0.20:

print("ok")

else:

print("not ok")

#cv2.imshow("Original", original)

#cv2.imshow("Duplicate", duplicate)

#cv2.imshow("Difference", difference)

cv2.waitKey(0)

cv2.destroyAllWindows()

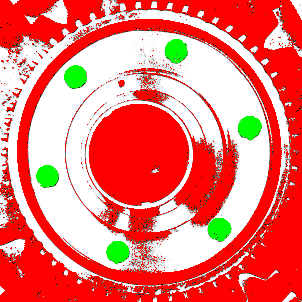

i/p image:

Corrected

Defected

The ss of output

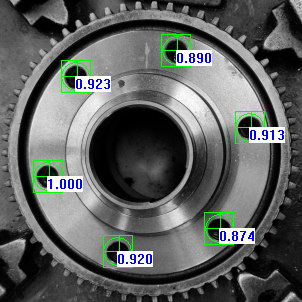

CodePudding user response:

On a binarized image, the missing bolds appear as nice circular shapes that are easy to detect by area and by circularity.

You can also detect the central hole and check the distance.

Another option is template matching with similarity score assessment.

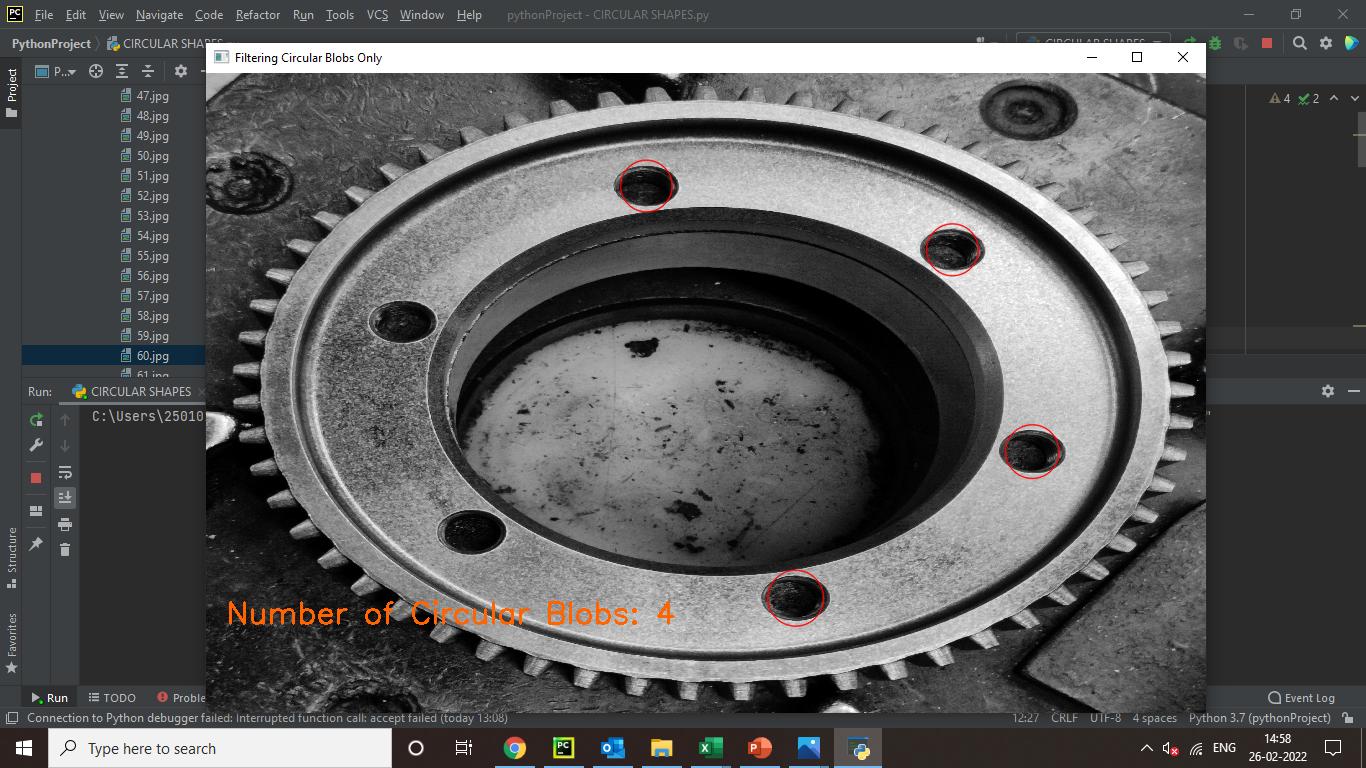

CodePudding user response:

I applied circular matching

import cv2

import numpy as np

# Load image

image = cv2.imread('Pictures2/train/Defected/30.jpg', 0)

image=cv2.resize(image,(1000,640))

# Set our filtering parameters

# Initialize parameter settiing using cv2.SimpleBlobDetector

params = cv2.SimpleBlobDetector_Params()

# Set Area filtering parameters

params.filterByArea = True

params.minArea = 100

# Set Circularity filtering parameters

params.filterByCircularity = True

params.minCircularity = 0.80

# Set Convexity filtering parameters

params.filterByConvexity = True

params.minConvexity = 0.1

# Set inertia filtering parameters

params.filterByInertia = True

params.minInertiaRatio = 0.01

# Create a detector with the parameters

detector = cv2.SimpleBlobDetector_create(params)

# Detect blobs

keypoints = detector.detect(image)

# Draw blobs on our image as red circles

blank = np.zeros((1, 1))

blobs = cv2.drawKeypoints(image, keypoints, blank, (0, 0, 255),

cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

number_of_blobs = len(keypoints)

text = "Number of Circular Blobs: " str(len(keypoints))

cv2.putText(blobs, text, (20, 550),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 100, 255), 2)

# Show blobs

cv2.imshow("Filtering Circular Blobs Only", blobs)

cv2.waitKey(0)

cv2.destroyAllWindows()

But not getting the desired output.

input image

Please find the ss of output image

here it should give 6 as output but returned 4. pls help me out

CodePudding user response:

If you use the same setup as the images in the question:

- camera,

- image resolution,

- illumination

Hough Circular Transform might be sufficient after proper smoothing considering reasonable holes dimensions

the code as follows:

#!/usr/bin/python3

# -*- coding: utf-8 -*-

import cv2

import numpy as np

def find_circles(img, min=85, max=100):

equ = cv2.equalizeHist(img)

k_smooth=9 # 9

gauss = cv2.GaussianBlur(equ, (k_smooth, k_smooth), 0) # equ

ret, otsu = cv2.threshold(gauss,0,255,cv2.THRESH_BINARY cv2.THRESH_OTSU)

blur = cv2.medianBlur(otsu, 21)

circles = cv2.HoughCircles(blur,cv2.HOUGH_GRADIENT,1,100,

param1=200,param2=15,minRadius=min,maxRadius=max)

return circles

def draw(img, circles, counter):

res = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

if circles is not None:

idx = 1

for i in circles[0,:]:

# draw the outer circle

cv2.circle(res,(i[0],i[1]),i[2],(0, 0, 255),3)

# draw the center of the circle

cv2.circle(res,(i[0],i[1]),2,(255, 0, 0), 15)

res = cv2.putText(res, str(idx), (i[0] 100,i[1] 100), cv2.FONT_HERSHEY_COMPLEX, 4, (255, 255, 255), 3, cv2.LINE_AA)

idx =1

cv2.namedWindow("There are {} holes without bolts.\n".format(counter), cv2.WINDOW_NORMAL)

cv2.imshow("There are {} holes without bolts.\n".format(counter),res)

cv2.waitKey(0)

cv2.destroyAllWindows()

empty = cv2.imread("empty.jpg", 0)

full = cv2.imread("full.jpg", 0)

img = full

circles = find_circles(img)

if circles is not None:

circles = np.uint16(np.around(circles))

counter = len(circles[0,:])

else:

counter = 0

draw(img, circles, counter)