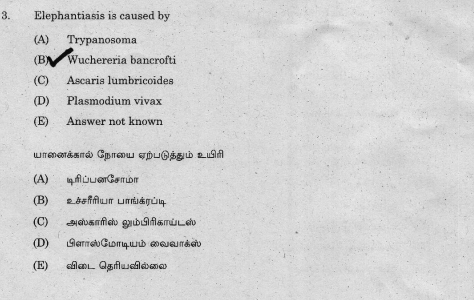

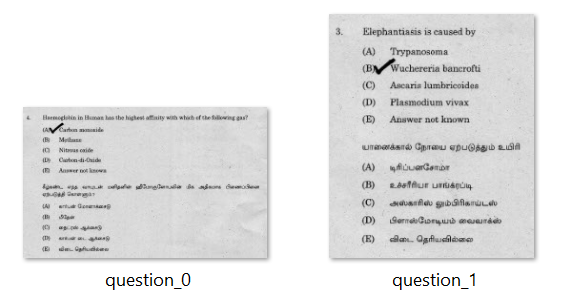

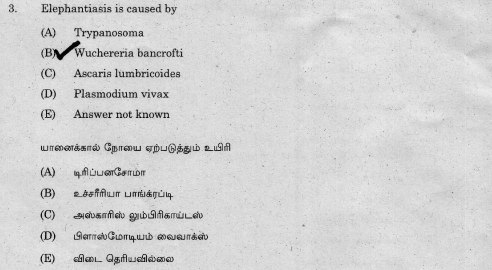

I am planning to split the questions from

and split into individual components like this?

CodePudding user response:

This is a classic situation for

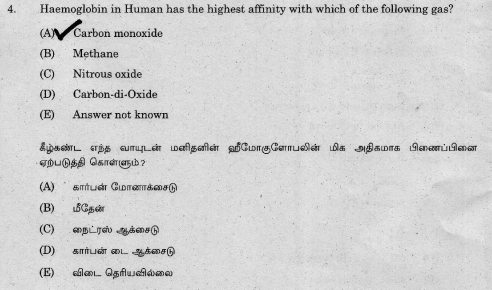

Here's where the interesting section happens. We assume that adjacent text/characters are part of the same question so we merge individual words into a single contour. A question is a section of words that are close together so we dilate to connect them all together.

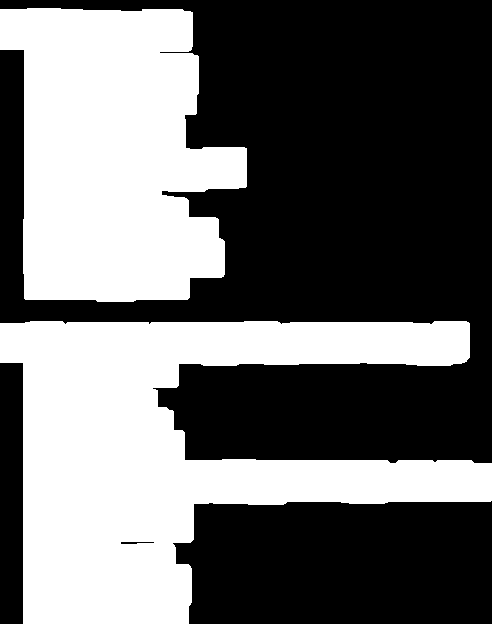

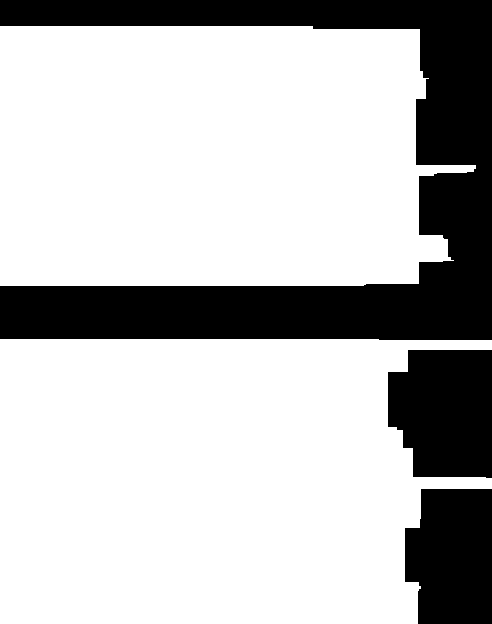

Individual questions highlighted in green

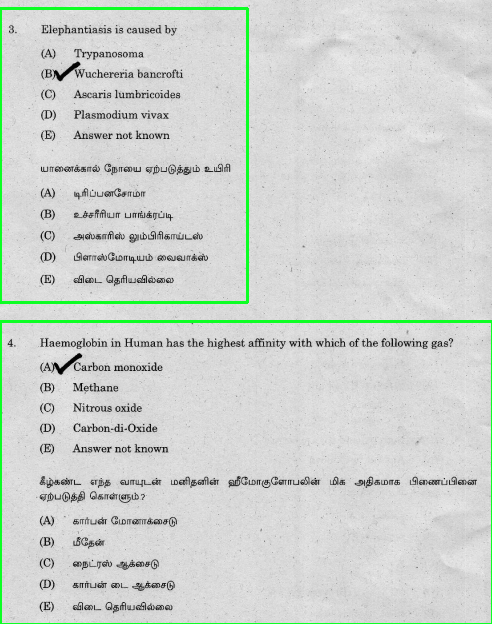

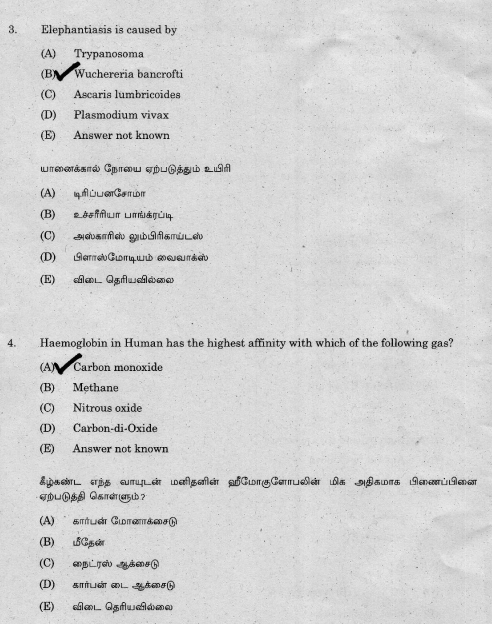

Extracted ROI questions

Code

import cv2

import numpy as np

# Load image, grayscale, Gaussian blur, Otsu's threshold

image = cv2.imread('1.png')

original = image.copy()

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (7,7), 0)

thresh = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

# Create rectangular structuring element and dilate

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9,9))

dilate = cv2.dilate(thresh, kernel, iterations=4)

# Find contours and extract each question

cnts = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

question_number = 0

for c in cnts:

# Get bounding box of each question, crop ROI, and save

x,y,w,h = cv2.boundingRect(c)

cv2.rectangle(image, (x, y), (x w, y h), (36,255,12), 2)

question = original[y:y h, x:x w]

cv2.imwrite('question_{}.png'.format(question_number), question)

question_number = 1

cv2.imshow('thresh', thresh)

cv2.imshow('dilate', dilate)

cv2.imshow('image', image)

cv2.waitKey()

CodePudding user response:

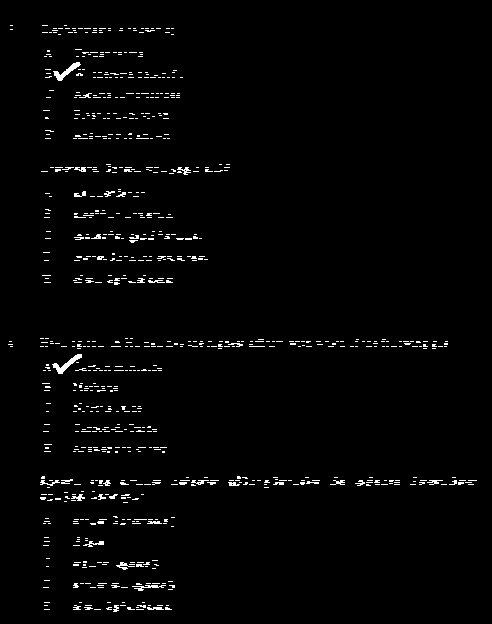

We may solve it using (mostly) morphological operations:

- Read the input image as grayscale.

- Apply thresholding with inversion.

Automatic thresholding usingcv2.THRESH_OTSUis working well. - Apply opening morphological operation for removing small artifacts (using the kernel

np.ones(1, 3)) - Dilate horizontally with very long horizontal kernel - make horizontal lines out of the text lines.

- Apply closing vertically - create two large clusters.

The size of the vertical kernel should be tuned according to the typical gap. - Finding connected components with statistics.

- Iterate the connected components and crop the relevant area in the vertical direction.

Complete code sample:

import cv2

import numpy as np

img = cv2.imread('scanned_image.png', cv2.IMREAD_GRAYSCALE) # Read image as grayscale

thesh = cv2.threshold(img, 0, 255, cv2.THRESH_OTSU cv2.THRESH_BINARY_INV)[1] # Apply automatic thresholding with inversion.

thesh = cv2.morphologyEx(thesh, cv2.MORPH_OPEN, np.ones((1, 3), np.uint8)) # Apply opening morphological operation for removing small artifacts.

thesh = cv2.dilate(thesh, np.ones((1, img.shape[1]), np.uint8)) # Dilate horizontally - make horizontally lines out of the text.

thesh = cv2.morphologyEx(thesh, cv2.MORPH_CLOSE, np.ones((50, 1), np.uint8)) # Apply closing vertically - create two large clusters

nlabel, labels, stats, centroids = cv2.connectedComponentsWithStats(thesh, 4) # Finding connected components with statistics

parts_list = []

# Iterate connected components:

for i in range(1, nlabel):

top = int(stats[i, cv2.CC_STAT_TOP]) # Get most top y coordinate of the connected component

height = int(stats[i, cv2.CC_STAT_HEIGHT]) # Get the height of the connected component

roi = img[top-5:top height 5, :] # Crop the relevant part of the image (add 5 extra rows from top and bottom).

parts_list.append(roi.copy()) # Add the cropped area to a list

cv2.imwrite(f'part{i}.png', roi) # Save the image part for testing

cv2.imshow(f'part{i}', roi) # Show part for testing

# Show image and thesh testing

cv2.imshow('img', img)

cv2.imshow('thesh', thesh)

cv2.waitKey()

cv2.destroyAllWindows()

Results: