How to replace incorrect column values (values with characters like * or #) with null?

CodePudding user response:

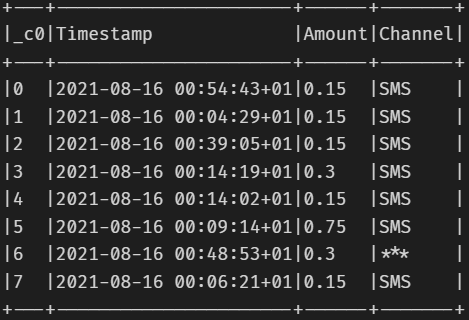

Test dataset:

df = spark.createDataFrame(

[(10, '2021-08-16 00:54:43 01', 0.15, 'SMS'),

(11, '2021-08-16 00:04:29 01', 0.15, '*'),

(12, '2021-08-16 00:39:05 01', 0.15, '***')],

['_c0', 'Timestamp', 'Amount','Channel']

)

df.show(truncate=False)

# --- ---------------------- ------ -------

# |_c0|Timestamp |Amount|Channel|

# --- ---------------------- ------ -------

# |10 |2021-08-16 00:54:43 01|0.15 |SMS |

# |11 |2021-08-16 00:04:29 01|0.15 |* |

# |12 |2021-08-16 00:39:05 01|0.15 |*** |

# --- ---------------------- ------ -------

Script:

from pyspark.sql import functions as F

df = df.withColumn('Channel', F.when(~F.col('Channel').rlike(r'[\*#] '), F.col('Channel')))

df.show(truncate=False)

# --- ---------------------- ------ -------

# |_c0|Timestamp |Amount|Channel|

# --- ---------------------- ------ -------

# |10 |2021-08-16 00:54:43 01|0.15 |SMS |

# |11 |2021-08-16 00:04:29 01|0.15 |null |

# |12 |2021-08-16 00:39:05 01|0.15 |null |

# --- ---------------------- ------ -------

CodePudding user response:

So You have multiple choices:

First option is the use the when function to condition the replacement for each character you want to replace:

example: when function

Second option is to use the replace function.

example: replace function

third option is to use regex_replace to replace all the characters with null value

example: regex_replace function