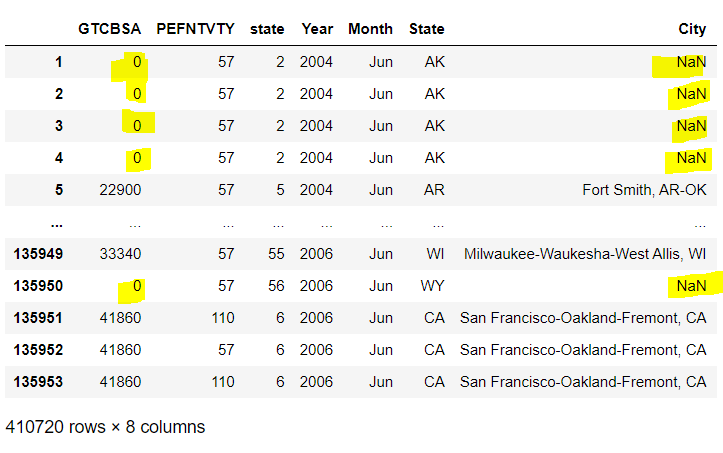

How does everyone deal with missing values in dataframe? I created a dataframe by using a Census Web Api to get the data. The 'GTCBSA' variable provides the City information which is required for me to use it for (plotly and dash) and I found that there is a lot of missing values in the data. Do I just leave it blank and continue with my data visualization? The following is my variable

CodePudding user response:

There are different ways of dealing with missing data depending on the use case and the type of data that is missing. For example, for a near-continuous stream of timeseries signals data with some missing values, you can attempt to fill the missing values based on nearby values by performing some type of interpolation.

However, in your case, the missing values are cities and the rows are all independent (e.g. each row is a different respondent). As far as I can tell, you don't have any way to reasonably infer the city for the rows where the city is missing so you'll have to drop these rows from consideration.

I am not an expert in the data collection method(s) used by the US census, but from this source, it seems like there are multiple methods used so I can see how it might be possible that the city of the respondent isn't known (the online tool might not be able to obtain the city of the respondent, or perhaps the respondent declined to state their city). Missing data is a very common issue.

However, before dropping all of rows with missing cities, you might do a brief check to see if there is any pattern (e.g. are the rows with missing cities predominantly from one state, for example?). If you are doing any state-level analysis, you could keep the rows with missing cities.