I have collection which contains more than 5 million records. I need to query and pass on the data to client as a csv file. Initially when the records used to be low, we have just looped and write it in to a file and sent to the client. But now as the record keeps growing, it consumes memory and we are facing issue. So in order to use stream I tried below,

const cursor = Model.find(query)

const transformer = (doc) => {

return {

"Creator": doc.Entity_Creator,

"Action Date" : doc.E_Approve_Time,

"Remarks": doc.Entity_Comment

}

}

let pat = path.join(__dirname, '../')

var csvFilePath = '././downloads/' ' Approved.csv'

const filename = pat csvFilePath

res.setHeader('Content-disposition',`attachment;filename=${filename}`);

res.writeHead(200,{ 'Content-Type': 'text/csv'})

res.flushHeaders();

var csvStream = fastCsv.format({headers: true}).transform(transformer)

cursor.stream().pipe(csvStream).pipe(res)

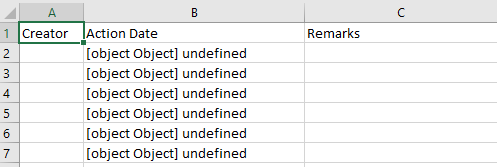

But after downloading, I'm getting files with Object Object in a single columns. How to resolve this issue?

CodePudding user response:

use this

const transformer = (doc) => {

return {

Creator: doc.Entity_Creator,

"Action Date": doc.E_Approve_Time,

Remarks: doc.Entity_Comment,

};

};

let pat = path.join(__dirname, "../");

var csvFilePath = "././downloads/" " Approved.csv";

const filename = pat csvFilePath;

res.setHeader("Content-disposition", `attachment;filename=${filename}`);

res.writeHead(200, { "Content-Type": "text/csv" });

res.flushHeaders();

var csvStream = fastCsv.format({ headers: true }).transform(transformer);

csvStream.pipe(process.stdout).on("end", () => process.exit());

Model.find(query).exec(function (err, cursor) {

if (err) {

console.log(err);

console.log('error returned');

return false;

}

if (!data) {

console.log('Authentication Failed');

return false;

}

cursor.forEach((e) => {

csvStream.write({

Creator: e.Entity_Creator,

"Action Date": e.E_Approve_Time,

Remarks: e.Entity_Comment,

});

});

})

csvStream.end();