I am using PostgreSQL 13 and has intermediate level experience with PostgreSQL.

I have a table named tbl_employee. it stores employee details for number of customers.

Below is my table structure, followed by datatype and index access method

Column | Data Type | Index name | Idx Access Type

------------- ----------------------------- --------------------------- ---------------------------

id | bigint | |

name | character varying | |

customer_id | bigint | idx_customer_id | btree

is_active | boolean | idx_is_active | btree

is_delete | boolean | idx_is_delete | btree

I want to delete employees for specific customer by customer_id.

In my table i have total 18,00,000 records.

When i execute below query for customer_id 1001 it will returns 85,000

SELECT COUNT(*) FROM tbl_employee WHERE customer_id=1001;

And when i perform delete operation on that customer_id then it will take 2:45 Hours to delete records for that customer_id.

Below is the query which i execute for delete records for that customer

DELETE FROM tbl_employee WHERE customer_id=1001

Problem

My concern is that this query should take less than 1 min for customer_id 1001. So is there any way we can optimize and reduce the execution time?

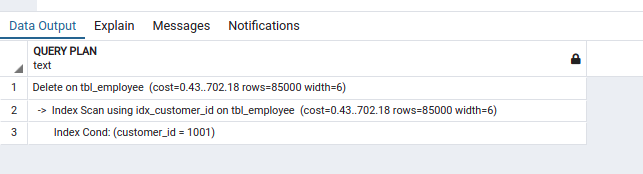

Below is Explain the query plan

UPDATE

I also have checked show seq_page_cost; and it is returning

seq_page_cost

1 (1 row)

And also show random_page_cost ; random_page_cost

4 (1 row)

Please guide. Thanks

CodePudding user response:

During :

DELETE FROM tbl_employee WHERE customer_id=1001

Is there any other operation accessing this table? If only this SQL accessing this table, I don't think it will take so much time.

CodePudding user response:

In RDBMS systems each SQL statement is also a transaction, unless it's wrapped in BEGIN; and COMMIT; to make multi-statement transactions.

It's possible your multirow DELETE statement is generating a very large transaction that's forcing PostgreSQL to thrash -- to spill its transaction logs from RAM to disk.

You can try repeating this statement until you've deleted all the rows you need to delete:

DELETE FROM tbl_employee WHERE customer_id=1001 LIMIT 1000;

Doing it this way will keep your transactions smaller, and may avoid the thrashing.

CodePudding user response:

SQL: DELETE FROM tbl_employee WHERE customer_id=1001 LIMIT 1000;

will not work then.

To make the batch delete smaller, you can try this:

DELETE FROM tbl_employee WHERE ctid IN (SELECT ctid FROM tbl_employee where customer_id=1001 limit 1000)

Until there is nothing to delete. Here the "ctid" is an internal column of Postgresql Tables. It can locate the rows.