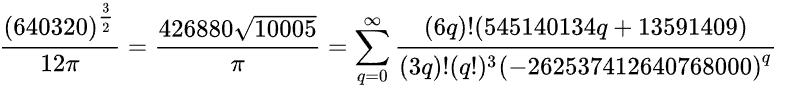

I am trying to teach myself Chudnovsky's algorithm using Python and this wikipedia page:

I tried to code up the equation on the far right that is using the Sigma symbol. I am familiar with Python but am not that great at math. The goal I set for myself is to see if I can accurately print out at least 100 digits of pi.

There are 5 sets of parentheses in the formula so I tried to code up each of the 5 different components. I also wrote a function that does factorials because factorials are used in 3 of the 5 components/parentheses.

Here's my 23 lines of working code, can someone please help me understand why it does not ACCURATELY go to 100 digits? It accurately goes to the 28th digit: 3.1415926535897932384626433832. Then for the 29th digit it says 8 but it should be 7...

import math

from decimal import *

def factorial(n):

if n == 0:

return 1

memory = n

for i in range(1, n):

memory *= i

return memory

iterations = 500

_sum = 0

#here's the Sigma part

for q in range(0, iterations):

a = factorial(6*q)

b = (545140134*q) 13591409

c = factorial(3*q)

d = (factorial(q))**3

e = (-262537412640768000)**q

numerator = (a*b)

denominator = (c*d*e)

_sum = Decimal(numerator / denominator)

#ensures that you get 100 digits for pi

getcontext().prec = 100

sq = Decimal(10005).sqrt()

overPI = Decimal(426880 * sq)

pi = (overPI) * (Decimal(1 / _sum))

print("Pi is", pi)

Thank you for any assistance that you're able to provide.

CodePudding user response:

Set the decimal precision at the start of the file so that it is applied to all subsequent operations:

from decimal import * getcontext().prec = 100Convert either or both operands to

Decimalbefore dividing or multiplying:# bad, float to decimal -> loss of precision _sum = Decimal(numerator / denominator) # better, precision preserved _sum = Decimal(numerator) / Decimal(denominator)

Result - accurate to 98 d.p. (100 s.f. minus rounding error):

# 3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679

Pi is 3.141592653589793238462643383279502884197169399375105820974944592307816406286208998628034825342117069