I am using OpenCv homography to stitch images together. How can I remove the negative space between the images, as below?

CodePudding user response:

If you are willing to use a non-OpenCV solution, you can use Imagemagick 7 -trim (or Python Wand, which uses Imagemagick). You can call the Imagemagick command line below from Python using a subprocess.call

Input:

magick stitched_panorama.png -background black -define trim:percent-background=0% -fuzz 5% -trim repage stitched_panorama_trimmed.png

CodePudding user response:

Suggested solution using OpenCV:

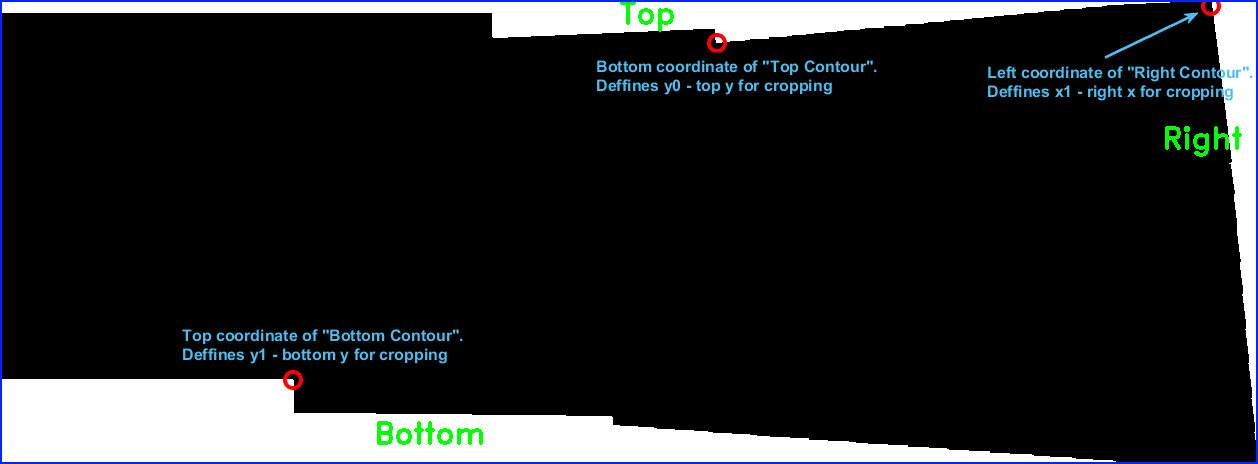

Assume (x0, y0), (x1, y1) are the top left and bottom right coordinates of the region to crop (target rectangle without black margins).

Split the black margins into 4 (or less) contours:

- "Top contour" - upper black area.

The "Top contour" definesy0coordinate. - "Bottom contour" - bottom black area.

The "Top contour" definesy1coordinate. - "Right contour" - right black area.

The "Right contour" definesx1coordinate. - "Left contour" - not exist in the above image (so we have only 3 contours).

The "Left contour" (if exists) definesx0coordinate.

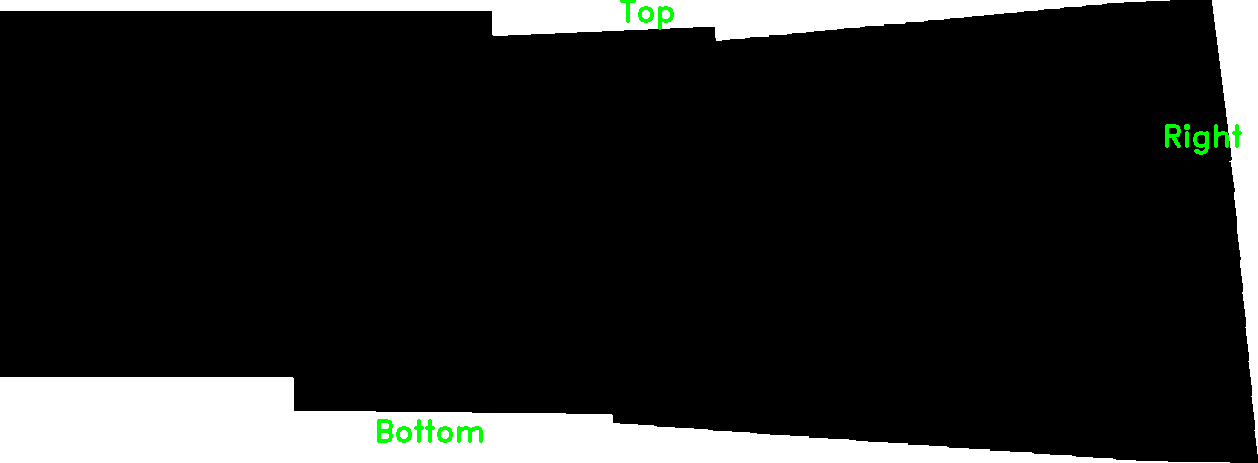

Building a binary "mask" with zeros at the margins and white at the "image part":

We want to build the following binary image:

The sample image you have posted makes it a bit challenging.

There is no "clear cut" for separating the black margins from the image.

There are artifacts that looks like JPEG compression artifacts that makes the procedure more difficult.

Suggestion for making it simpler:

Instead of stitching images in BGR pixel format, stitch images in BGRA pixel format.

Convert the pixels (of the original set of images) from BGR to BGRA, where "A" is an alpha (transparency) channel, filled with 255 (fully opaque).

After stitching the images, the alpha channel of the output supposed to be 255 where pixels are part of an image, and zero in the "black" margins.

The alpha channel of the output gives us the desired binary mask "for free".

For the above sample image, we may use the following stages:

- Convert from BGR to Grayscale and apply threshold.

The threshold was manually set to10by trial an error (with no artifacts it supposed to be1). - Apply opening morphological operation for removing remaining artifacts.

- Find the contour and fill it with white (255) color (fill "holes" - pixels inside the "active area" that are below the threshold).

Cropping rectangle with minimum area:

For separating the background into 4 (or less) contours, we have to make sure that the "black regions" are well split (not connected).

For that purpose we may crop the rectangle with minimum area:

- Find contours (there should be only one).

- Find rectangle with minimum area.

- Crop the rectangle with minimum area.

Finding and analyzing the 4 (or less) contours:

- Get the inverse threshold:

inv_thresh = 255 - thresh. - Find contours once more...

Now we have up to 4 contours (3 in our case) - "Top", "Bottom" and "Right". - Iterate the contours, and identify the left, tight, top and bottom:

For each contour, find the bounding rectangle(x, y, w, h) = cv2.boundingRect(c).

ifx == 0and(h > w)we found the "Left Contour".

ifx w == image_witdhand(h > w)we found the "Right Contour".

ify == 0and(w > h)we found the "Top Contour".

ify h == image_heightand(w > h)we found the "Bottom Contour".

Note: There may be rare cases when the above heuristics fails - for prefect solution we may need to compare each contour with all other contours (kind of sorting).

Code sample:

The following code sample also writes "Left", "Right", "Top", "Bottom" as text on a sketch image for testing).

import numpy as np

import cv2

img = cv2.imread('stitched.png') # Read input image

# Build a mask with zeros where pixels are black borders, and 255 otherwise

# Use threshold=10 instead of 1, and use opening operation due to JPEG compression artifacts.

thresh = cv2.threshold(cv2.cvtColor(img, cv2.COLOR_BGR2GRAY), 10, 255, cv2.THRESH_BINARY)[1]

thresh = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, np.ones((3, 3), np.uint8))

# Find contours (there should be only one).

cnts = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)[-2] # Use index -2 for OpenCV 3 and 4 compatibility.

c = cnts[0] # Get the first contour (get the single contour).

cv2.drawContours(thresh, [c], 0, 255, -1) # Fill the contour with 255 (fill dark pixels under threshold inside the contour).

# Find rectangle with minimum area

rect = cv2.minAreaRect(c)

box = np.int0(cv2.boxPoints(rect)) # Find 4 corners all convert from floating point values to int

# Crop the rectangle with minimum area (crop both img and thresh)

(topy, topx) = (np.min(box[:,1]), np.min(box[:,0])) # https://stackoverflow.com/questions/28759253/how-to-crop-the-internal-area-of-a-contour

(boty, botx) = (np.max(box[:,1]), np.max(box[:,0]))

img = img[topy:boty 1, topx:botx 1, :]

thresh = thresh[topy:boty 1, topx:botx 1]

inv_thresh = 255 - thresh # Inverse of thresh.

cnts = cv2.findContours(inv_thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)[-2]

inv_thresh_bgr = cv2.cvtColor(inv_thresh, cv2.COLOR_GRAY2BGR) # inv_thresh_bgr is used for testing.

# Initialize top left and bottom right coordinates to be cropped (values are going apply area without black borders).

x0 = 0

y0 = 0

x1 = img.shape[1]

y1 = img.shape[0]

# Iterate contours:

for c in cnts:

(x, y, w, h) = cv2.boundingRect(c) # Get contour bounding box.

M = cv2.moments(c);cx = int(M["m10"] / M["m00"]);cy = int(M["m01"] / M["m00"]) # Compute the center of the contour (for testing)

if (x == 0) and (h > w):

# Enter here if "c" is the "Left contour" (for the given sample input, code should not reach here)

x0 = w # x0 is deffined by the left contour (x0 is the extreme right of the left contour).

cv2.putText(inv_thresh_bgr, 'Left', (cx, cy), cv2.FONT_HERSHEY_DUPLEX, 1, (0, 255, 0), 2) # Draw text for testing

elif ((x w) == img.shape[1]) and (h > w):

# Enter here if "c" is the "Right contour"

x1 = x # x1 is deffined by the right contour (x1 is the extreme left coordinate of the right contour)

cv2.putText(inv_thresh_bgr, 'Right', (cx-80, cy), cv2.FONT_HERSHEY_DUPLEX, 1, (0, 255, 0), 2) # Draw text for testing

elif (y == 0) and (w > h):

# Enter here if "c" is the "Top contour"

y0 = h # y0 is deffined by the to contour (y0 is the extreme bottom of the top contour).

cv2.putText(inv_thresh_bgr, 'Top', (cx, cy 10), cv2.FONT_HERSHEY_DUPLEX, 1, (0, 255, 0), 2) # Draw text for testing

elif ((y h) == img.shape[0]) and (w > h):

# Enter here if "c" is the "Bottom contour"

y1 = y # y1 is deffined by the bottom contour (y1 is the extreme top of the bottom contour).

cv2.putText(inv_thresh_bgr, 'Bottom', (cx, cy 10), cv2.FONT_HERSHEY_DUPLEX, 1, (0, 255, 0), 2) # Draw text for testing

cv2.imwrite('inv_thresh_bgr.png', inv_thresh_bgr) # Save for testing

# Crop the part without black margins.

img = img[y0:y1, x0:x1, :]

cv2.imshow('thresh', thresh) # Show for testing

cv2.imshow('inv_thresh', inv_thresh) # Show for testing

cv2.imshow('inv_thresh_bgr', inv_thresh_bgr) # Show for testing

cv2.imshow('img', img) # Show for testing

cv2.waitKey()

cv2.destroyAllWindows()

Results: