Context: I'm trying to create a multi-index dataframe from a nested dictionary. Using an example is easier to explain:

This is a minimal reproducible example of the dictionary I have:

{

'naive_bayes': {

'classifier': MultinomialNB(),

'count_vect': {

'consumer ': {

'precision': 0.8888888888888888,

'recall': 0.4444444444444444,

'f1-score': 0.5925925925925926,

'support': 18

},

'deal_sites': {

'precision': 0.7241379310344828,

'recall': 0.7664233576642335,

'f1-score': 0.7446808510638298,

'support': 137

},

'activist': {

'precision': 1.0,

'recall': 0.5,

'f1-score': 0.6666666666666666,

'support': 4

},

'news_outlet': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'retailer': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'influencer': {

'precision': 0.717948717948718,

'recall': 0.8,

'f1-score': 0.7567567567567569,

'support': 35

},

'horeca': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'own_brand': {

'precision': 0.5638297872340425,

'recall': 0.726027397260274,

'f1-score': 0.6347305389221557,

'support': 73

},

'reviewers': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 1

},

'recipe_provider': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 2

},

'wellness_community': {

'precision': 0.6,

'recall': 0.21428571428571427,

'f1-score': 0.3157894736842105,

'support': 14

},

'accuracy': 0.6768707482993197,

'macro avg': {

'precision': 0.40861866591873924,

'recall': 0.3137437194231515,

'f1-score': 0.3373833526987466,

'support': 294

},

'weighted avg': {

'precision': 0.6595057011837224,

'recall': 0.6768707482993197,

'f1-score': 0.6550934639063294,

'support': 294

}

},

'tfidf_vect': {

'consumer ': {

'precision': 1.0,

'recall': 0.3333333333333333,

'f1-score': 0.5,

'support': 18

},

'deal_sites': {

'precision': 0.5485232067510548,

'recall': 0.948905109489051,

'f1-score': 0.6951871657754011,

'support': 137

},

'activist': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'news_outlet': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'retailer': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'influencer': {

'precision': 1.0,

'recall': 0.02857142857142857,

'f1-score': 0.05555555555555556,

'support': 35

},

'horeca': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'own_brand': {

'precision': 0.7,

'recall': 0.4794520547945205,

'f1-score': 0.5691056910569106,

'support': 73

},

'reviewers': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 1

},

'recipe_provider': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 2

},

'wellness_community': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 14

},

'accuracy': 0.5850340136054422,

'macro avg': {

'precision': 0.2953202915228232,

'recall': 0.16275108419893938,

'f1-score': 0.1654407647625334,

'support': 294

},

'weighted avg': {

'precision': 0.6096859840982807,

'recall': 0.5850340136054422,

'f1-score': 0.5024823183769689,

'support': 294

}

},

'ngram_tfidf_vect': {

'consumer ': {

'precision': 1.0,

'recall': 0.3888888888888889,

'f1-score': 0.56,

'support': 18

},

'deal_sites': {

'precision': 0.5972222222222222,

'recall': 0.9416058394160584,

'f1-score': 0.7308781869688386,

'support': 137

},

'activist': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'news_outlet': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'retailer': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'influencer': {

'precision': 0.8,

'recall': 0.34285714285714286,

'f1-score': 0.48000000000000004,

'support': 35

},

'horeca': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'own_brand': {

'precision': 0.6785714285714286,

'recall': 0.5205479452054794,

'f1-score': 0.5891472868217054,

'support': 73

},

'reviewers': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 1

},

'recipe_provider': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 2

},

'wellness_community': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 14

},

'accuracy': 0.6326530612244898,

'macro avg': {

'precision': 0.27961760461760465,

'recall': 0.19944543785159724,

'f1-score': 0.2145477703445949,

'support': 294

},

'weighted avg': {

'precision': 0.603248839218227,

'recall': 0.6326530612244898,

'f1-score': 0.5782927331725013,

'support': 294

}

},

'char_ngram_tfidf_vect': {

'consumer ': {

'precision': 1.0,

'recall': 0.2777777777777778,

'f1-score': 0.4347826086956522,

'support': 18

},

'deal_sites': {

'precision': 0.5315315315315315,

'recall': 0.8613138686131386,

'f1-score': 0.6573816155988857,

'support': 137

},

'activist': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'news_outlet': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'retailer': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 3

},

'influencer': {

'precision': 1.0,

'recall': 0.02857142857142857,

'f1-score': 0.05555555555555556,

'support': 35

},

'horeca': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 4

},

'own_brand': {

'precision': 0.5606060606060606,

'recall': 0.5068493150684932,

'f1-score': 0.5323741007194245,

'support': 73

},

'reviewers': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 1

},

'recipe_provider': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 2

},

'wellness_community': {

'precision': 0.0,

'recall': 0.0,

'f1-score': 0.0,

'support': 14

},

'accuracy': 0.5476190476190477,

'macro avg': {

'precision': 0.2811034174670538,

'recall': 0.15222839909371258,

'f1-score': 0.15273580732450165,

'support': 294

},

'weighted avg': {

'precision': 0.5671566742995315,

'recall': 0.5476190476190477,

'f1-score': 0.47175211595418887,

'support': 294

}

}

}

}

From this, I'm using this code:

result_dataframe = pd.DataFrame.from_dict(all_models, orient="index").stack().to_frame()

To get an output as such:

naive_bayes classifier MultinomialNB()

count_vect {'consumer ': {'precision': 0.8888888888888888...

tfidf_vect {'consumer ': {'precision': 1.0, 'recall': 0.3...

ngram_tfidf_vect {'consumer ': {'precision': 1.0, 'recall': 0.3...

char_ngram_tfidf_vect {'consumer ': {'precision': 1.0, 'recall': 0.2...

However, this is the output I'd like to achieve:

naïve_bayes classifier Unnamed: 2 Unnamed: 3 Unnamed: 4

0 count_vect consumer precision 0.39

1 recall 0.56

2 f1-score 0.8

3 … …

4 reviewer precision 0.39

5 recall 0.56

6 f1-score 0.8

7 … …

8 site_deal precision 0.39

9 recall 0.56

10 f1-score 0.8

11 … …

12 own_brand precision 0.39

13 recall 0.56

14 f1-score 0.8

15 … …

16 … … …

17 tfidf_vect consumer precision 0.39

18 recall 0.56

19 f1-score 0.8

34 ngram_tfidf_vect consumer precision 0.39

35 recall 0.56

36 f1-score 0.8

37 … …

38 reviewer precision 0.39

39 recall 0.56

40 f1-score 0.8

41 … …

42 site_deal precision 0.39

43 recall 0.56

44 f1-score 0.8

45 … …

46 own_brand precision 0.39

47 recall 0.56

48 f1-score 0.8

49 … …

50 … … …

Note: This is just an example of an output, the values are not the same as the minimal data example I provided with

Is there any way I could achieve this result? It doesn't have to be necessarily like I've shown (can also be column-based instead of row-based like I did here)

Thank you all for your time! Any help is very welcome

CodePudding user response:

You can use pd.json_normalize to flatten levels of dicts.

data = # your given dictionary

df = pd.json_normalize(data)

# flatten the dicts but then split

# all columns on `.` into different column levels

df.columns = pd.MultiIndex.from_tuples([tuple(col.split(".")) for col in df.columns])

df

From here we can transpose or unstack

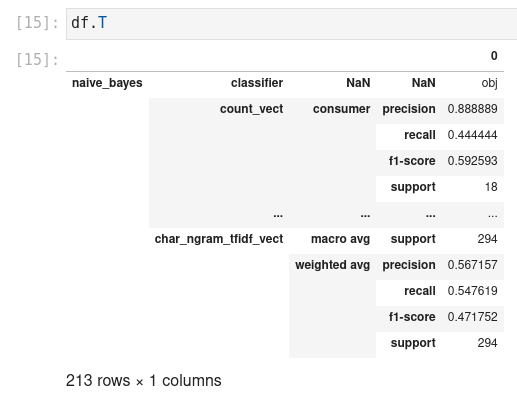

df.T

# or df.unstack(-1).unstack(-1) # pet peeve: unstack sorts, unfortunately

This might be closing in towards the result you're looking for:

It remains to name the index levels and columns.