I would like to extract only the strike-out text from a .pdf file. I have tried the below code, it is working with a sample pdf file I have. But it is not working with another pdf file which I think is a scanned one. Is there any standard way to extract only strike-out text from a pdf file using python? Any help would be really appreciated.

This is the code I was using:

from pydoc import doc

from pdf2docx import parse

from typing import Tuple

from docx import Document

def convert_pdf2docx(input_file: str, output_file: str, pages: Tuple = None):

"""Converts pdf to docx"""

if pages:

pages = [int(i) for i in list(pages) if i.isnumeric()]

result = parse(pdf_file=input_file,

docx_with_path=output_file, pages=pages)

summary = {

"File": input_file, "Pages": str(pages), "Output File": output_file

}

if __name__ == "__main__":

pdf_file = 'D:/AWS practice/sample_striken_out.pdf'

doc_file = 'D:/AWS practice/sample_striken_out.docx'

convert_pdf2docx(pdf_file, doc_file)

document = Document(doc_file)

with open('D:/AWS practice/sample_striken_out.txt', 'w') as f:

for p in document.paragraphs:

for run in p.runs:

if not run.font.strike:

f.write(run.text)

print(run.text)

f.write('\n')

Note: I am converting PDF to DOCX first and then trying to identify the strike-out text. This code is working with a sample file. But it is not working with the scanned pdf file. The pdf to doc conversion is taking place, but the strike-through detection does not.

CodePudding user response:

Q.

another pdf file which I think is a scanned one. Is there any standard way to extract only strike-out text from a pdf file using python?

A.

You can use any language including Python but since like many reversal tasks related to decompiling a very complex but dumb compiled page language file it is not one task but many often based on single characters. For one of the better solutions in PDF extraction see

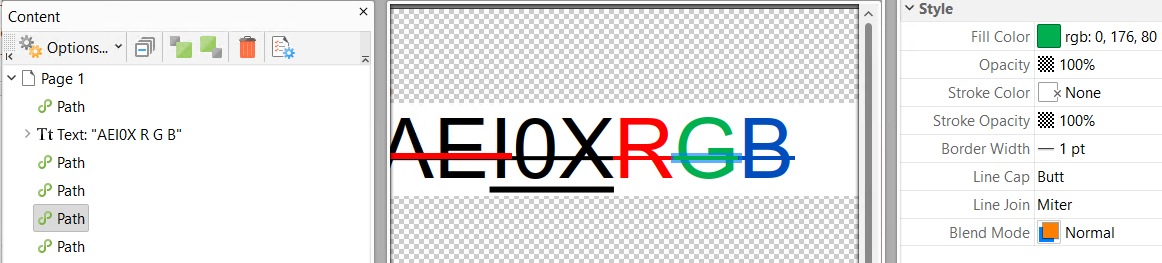

In the docX Common text such as that underlined IOX is grouped differently "in-line".

<w:r>

<w:rPr>

<w:rFonts w:ascii="Verdana" w:hAnsi="Verdana" w:cs="Verdana" w:eastAsia="Verdana"/>

<w:strike w:val="true"/>

<w:color w:val="auto"/>

<w:spacing w:val="0"/>

<w:position w:val="0"/>

<w:sz w:val="50"/>

<w:u w:val="single"/>

<w:shd w:fill="auto" w:val="clear"/>

</w:rPr>

<w:t xml:space="preserve">I0X</w:t>

</w:r>

Thus the monochrome text is grouped first by the line floating under, then grouped as stricken.

For this and many reasons it is not easy for a program to detect how to handle such cases, every library will do it different based on differing inputs. However the one thing they will generally agree is there is not much chance for a basic PDF converter to turn pixels in a line of pixels into OCR strike through.