I have a column called createdtime with datatype as timestamp, I want to find the count of rows where the createdtime is null or empty or nan.

I tried below method but I am getting error and was unable to get through it

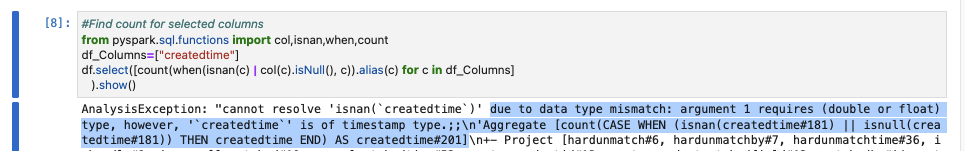

#Find count for selected columns

from pyspark.sql.functions import col,isnan,when,count

df_Columns=["createdtime"]

df.select([count(when(isnan(c) | col(c).isNull(), c)).alias(c) for c in df_Columns]

).show()

CodePudding user response:

Try using isnull and sum functions like in this snippet:

import numpy as np

import pandas as pd

some_data = np.arange(100, 200, 10)

createdtime = np.array([np.nan, *[11, 13, 14, 5], np.nan, *[18, 19, 26, 12]])

df = pd.DataFrame({"some_data": some_data, "createdtime": createdtime})

print(df.createdtime.isnull().sum())

df.createdtime.isnull().sum() can help you with your problem. I think your dataframe is not pandas.DataFrame type, there is a function in pyspark to convert it.

CodePudding user response:

Count of Missing values of all columns in dataframe in pyspark using isnan() Function

Count of null values of dataframe in pyspark using isNull() Function

Count of null values of single column in pyspark using isNull() Function

Count of Missing values of single column in pyspark using isnan() Function .

Suppose data frame name is df1 then could would be to find count of null values would be

Get count of both null and missing values in pyspark.

from pyspark.sql.functions import isnan, when, count, col

df1.select([count(when(isnan(c) | col(c).isNull(), c)).alias(c) for c in

df1.columns]).show()