Is there any possibility to insert 50k datasets into a postgresql database using dbeaver? Locally, it worked fine for me, it took me 1 minute, because I also changed the memory settings of postgresql and dbeaver. But for our development environment, 50k queries did not work.

Is there a way to do this anyway or do I need to split the queries and do for example 10k queries 5 times? Any trick?

EDIT: with "did not work" I mean I got an error after 2500 seconds saying something like "too much data ranges"

CodePudding user response:

If you intend to execute a giant script sql via interface: don't even try.

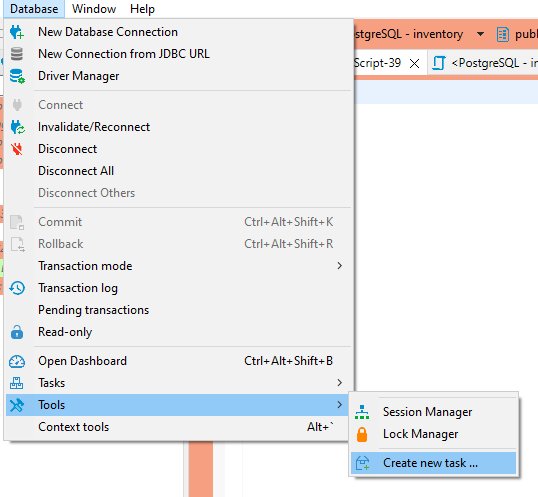

If you have a csv file, DBeaver gives you a tool:

Even better, as described in comments, copy command is the tool.

If you have a giant SQL file you need to use command line, like:

psql -h host -U username -d myDataBase -a -f myInsertFile

Like in this post: Run a PostgreSQL .sql file using command line arguments