I'm running code in R studio. When my script finishes running, I get the orange message There were 50 or more warnings (use warnings() to see the first 50) at the bottom of my result (and in practice, I suspect the number of warnings is in the tens of thousands, at a minimum). Are these warnings slowing down my code because they're I/O operations and therefore expensive? I'm not seeing any error messages, but I'm not sure if the way R studio handles them, if they're still I/O operations, or if whatever way it's handling them deals with the slowdown I would normally expect such a large number of I/O operations to cause.

CodePudding user response:

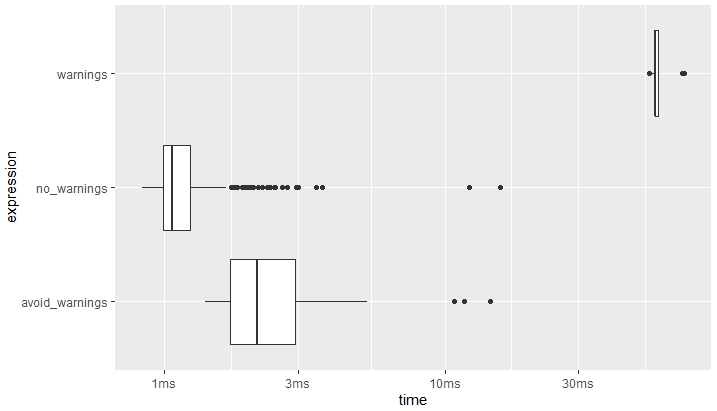

Generally, the answer will be yes, code that generates a lot of warnings will have some negative impact on performance although whether this impact is meaningful is going to vary. To illustrate:

library(bench)

v <- -1000:1000

res <- mark(no_warnings = sapply(abs(v), log),

avoid_warnings = sapply(v, \(x) if (x < 0) NaN else log(x)),

warnings = sapply(v, log), check = FALSE)

There were 50 or more warnings (use warnings() to see the first 50)

Results:

# A tibble: 3 × 13

expression min median `itr/sec` mem_alloc `gc/sec` n_itr n_gc total_time

<bch:expr> <bch:tm> <bch:t> <dbl> <bch:byt> <dbl> <int> <dbl> <bch:tm>

1 no_warnings 833.6µs 1.06ms 832. 78.9KB 4.23 393 2 472ms

2 avoid_warnings 1.4ms 2.13ms 418. 73.2KB 6.46 194 3 464ms

3 warnings 53.9ms 56.37ms 17.8 64KB 5.08 7 2 394ms

# … with 4 more variables: result <list>, memory <list>, time <list>, gc <list>

plot(res, type = "boxplot")

CodePudding user response:

From my understanding, they will slow it down a bit, although it may be slightly more than marginal at most. However, if you don't need to use the info from the warnings, I suggest avoiding warnings, using something like suppressWarnings()