I am trying to read an xml file in dataframe in pyspark.

Code :

df_xml=spark.read.format("com.databricks.spark.xml").option("rootTag","dataset").option("rowTag","AUTHOR").load(FilePath)

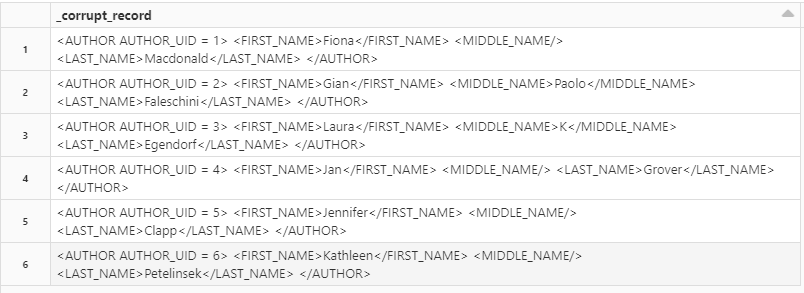

when i display the dataframe, it shows a single column corrupt_records :

below is the xml file content

<?xml version='1.0' encoding='UTF-8'?>

<dataset>

<AUTHOR AUTHOR_UID = 1>

<FIRST_NAME>Fiona</FIRST_NAME>

<MIDDLE_NAME/>

<LAST_NAME>Macdonald</LAST_NAME>

</AUTHOR>

<AUTHOR AUTHOR_UID = 2>

<FIRST_NAME>Gian</FIRST_NAME>

<MIDDLE_NAME>Paolo</MIDDLE_NAME>

<LAST_NAME>Faleschini</LAST_NAME>

</AUTHOR>

<AUTHOR AUTHOR_UID = 3>

<FIRST_NAME>Laura</FIRST_NAME>

<MIDDLE_NAME>K</MIDDLE_NAME>

<LAST_NAME>Egendorf</LAST_NAME>

</AUTHOR>

<AUTHOR AUTHOR_UID = 4>

<FIRST_NAME>Jan</FIRST_NAME>

<MIDDLE_NAME/>

<LAST_NAME>Grover</LAST_NAME>

</AUTHOR>

CodePudding user response:

That XML is not valid:

- The AUTHOR_UID must be defined in quotes

- The dataset tag is not closed

This example below is a valid one:

<?xml version='1.0' encoding='UTF-8'?>

<dataset>

<AUTHOR AUTHOR_UID = '1'>

<FIRST_NAME>Fiona</FIRST_NAME>

<MIDDLE_NAME/>

<LAST_NAME>Macdonald</LAST_NAME>

</AUTHOR>

<AUTHOR AUTHOR_UID = '2'>

<FIRST_NAME>Gian</FIRST_NAME>

<MIDDLE_NAME>Paolo</MIDDLE_NAME>

<LAST_NAME>Faleschini</LAST_NAME>

</AUTHOR>

<AUTHOR AUTHOR_UID = '3'>

<FIRST_NAME>Laura</FIRST_NAME>

<MIDDLE_NAME>K</MIDDLE_NAME>

<LAST_NAME>Egendorf</LAST_NAME>

</AUTHOR>

<AUTHOR AUTHOR_UID = '4'>

<FIRST_NAME>Jan</FIRST_NAME>

<MIDDLE_NAME/>

<LAST_NAME>Grover</LAST_NAME>

</AUTHOR>

</dataset>