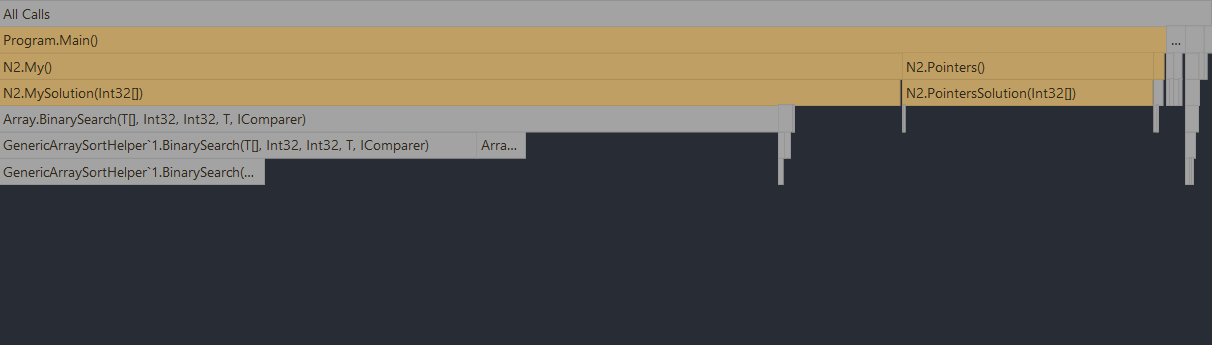

In the scenario I present to you, my solution is supposed to represent O(n^2 * log n), and the "pointers" solution, which I assume is the fastest way to resolve the "3SUM" problem, represents O(n^2 * 1); leaving the question of is O(1) faster than O(log n), exampling it with my code.

Could someone explain why this seems to be the case? Please. My logic tells me that O(log n) should be as fast as O(1), if not faster.

I hope my comments on the code of my solution are clear.

Edit: I know that this does not sound very smart... log(n) counts the input (n -> ∞), while 1... is just 1. BUT, in this case, for finding a number, how is it supposed to be faster to do sums and subtractions instead of using binary search (log n)? It just does not enter my head.

That ought to do it, huh? ;)

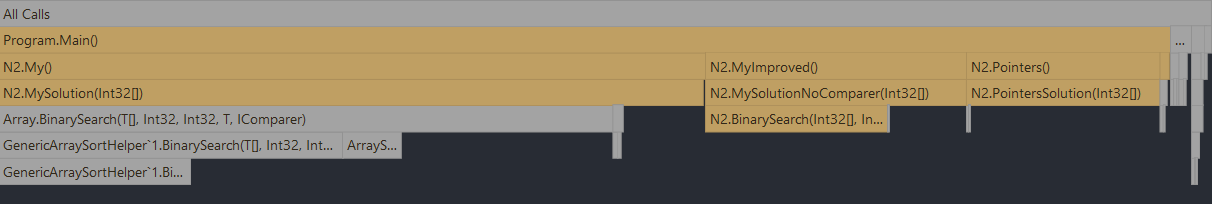

After checking the implementation, I found that BinarySearch internally uses CompareTo which I imagine isn't ideal (but, being a generic for an unmanaged type, it shouldn't be that bad...)

To "Improve" it, I dragged BinarySearch, kicking and screaming, and replaced the CompareTo with actual comparison operators. I named this benchmark MyImproved Here's the results:

Benchmark.NET results:

Interestingly, Benchmark.NET disregards common sense and puts MyImproved over Pointers. This may be due to some optimization which is turned off by the profiler.

| Method | Complexity | Mean | Error | StdDev | Code Size |

|---|---|---|---|---|---|

| Pointers | O(n^2)??? | 76.76 ms | 1.465 ms | 1.628 ms | 1,781 B |

| My | O(n^2 * log n) | 93.08 ms | 1.831 ms | 3.980 ms | 1,999 B |

| MyImproved | O(n^2 * log n) | 62.53 ms | 1.234 ms | 2.226 ms | 1,980 B |

TL;DR:

.CompareTo() seemed to be bogging down the implementation of .BinarySearch(). Removing it and using actual integer comparison seemed to help a lot. Either that, or it's some funky interface stuff that I'm not prepared to investigate :)

CodePudding user response:

It seems that your conceptual misunderstanding comes from the fact that you are missing that Array.BinarySearch does some iterations too (it was indicated by the initial iterations counts in the question which you now have changed).

So while assumption that binary search should be faster than simple iteration trough the collection is pretty valid - you are missing that binary search is basically an extra loop, so you should not compare those two but compare the second for loop binary search in the first solution against the second loop of the second.

P.S.

To argue about time complexity based on runtimes with at least some degree of certainty you need at least to perform several tests with different increasing number of elements (like 100, 1000, 10000, 100000 ...) and see how the runtime changes. Also different inputs for the same number of elements are recommended cause in theory you can hit some optimal cases for one algorithm which can be the worst case scenarios for another.

CodePudding user response:

Two tips:

Use sharplab.io to see your lowered code, it may reveal something (link)

try running these seperate tests through the dotnetBenchmark nuget package, it'll give you more accurate timings, and if the memory usage or allocations is considerably higher in one case, that could be your answer.

Anyway, are you running these tests in debug or release mode? I just had a thought that I haven't tested recently, but I believe that the debugger overhead can significantly affect the performance of a binary search.

Give it a go, and let me know