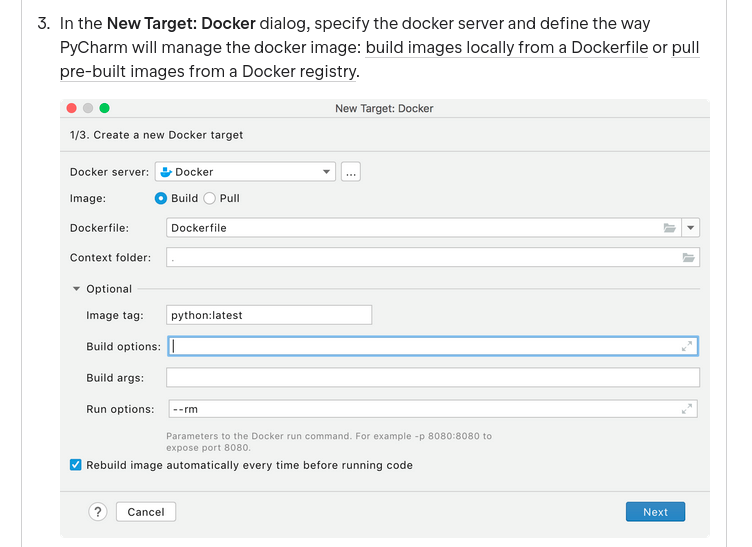

Im working on Ubuntu 20. I've installed docker, nvidia-docker2. On Pycharm, I've followed

in the run options I put additionally --runtime=nvidia and --gpus=all.

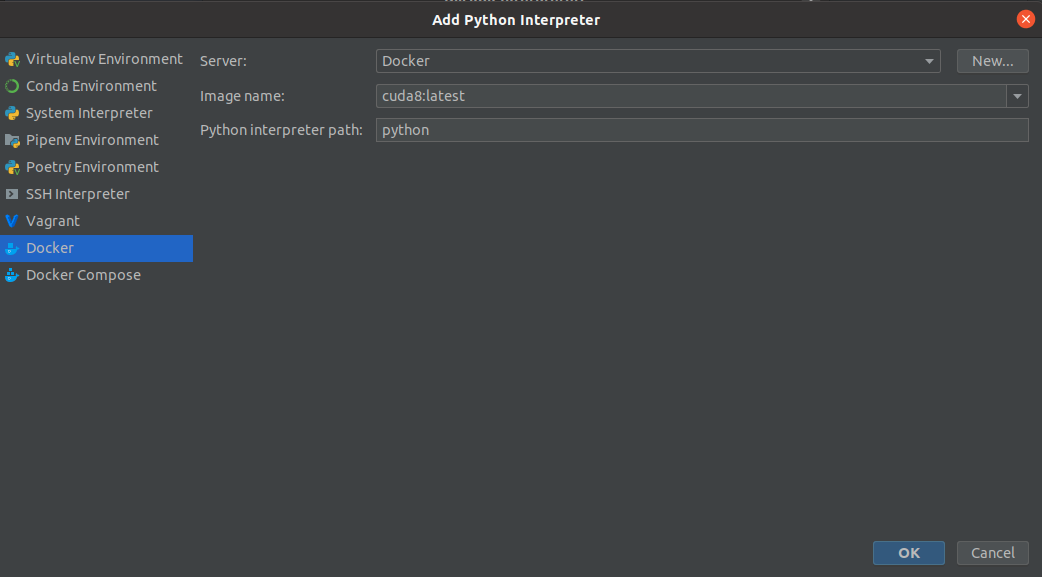

Step 4 finishes as same as in the guide (almost, but it seems that it doesn't bother anything so on that later) and on step 5 I put manually the path to the interpreter in the virtual environment I've created using the Dockerfile.

In that way I am able to run the command of nvidia-smi and see correctly the GPU, but I don't see any packages I've installed during the Dockerfile build.

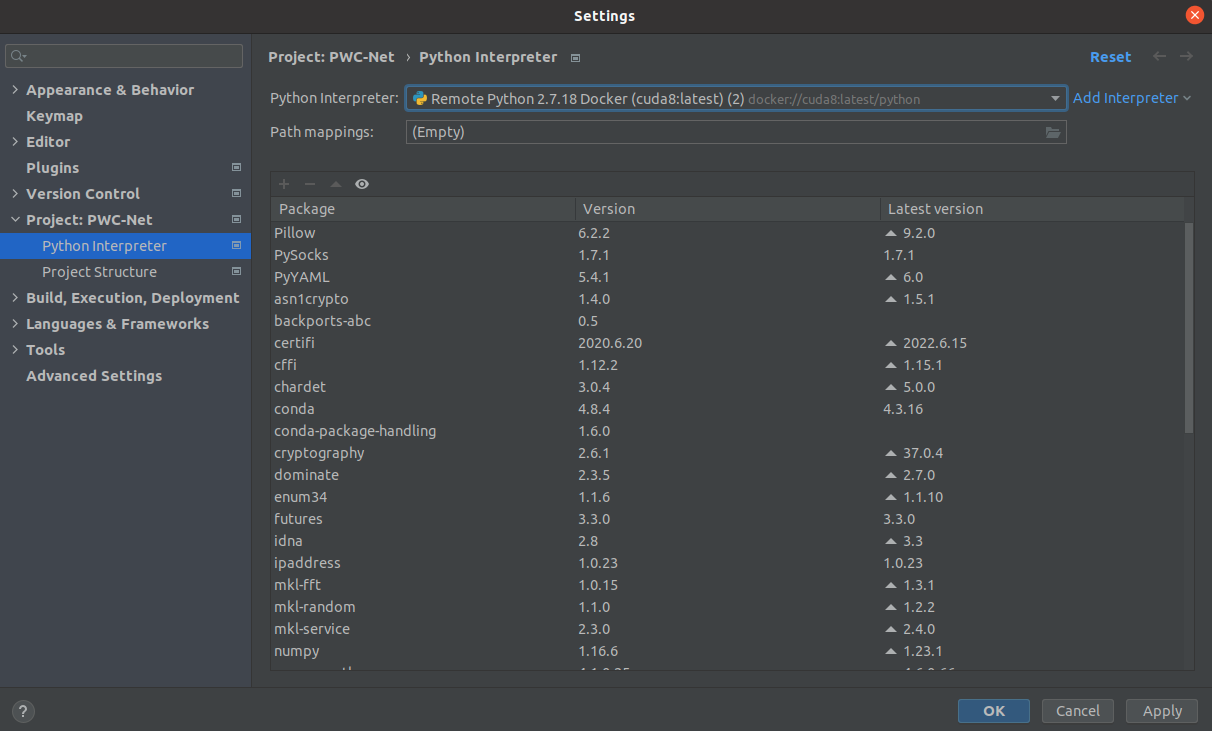

There is another option to connect the interpreter a little bit differently in which I do see the packages, but I can't run the nvidia-smi command and the torch.cuda.is_availble return False.

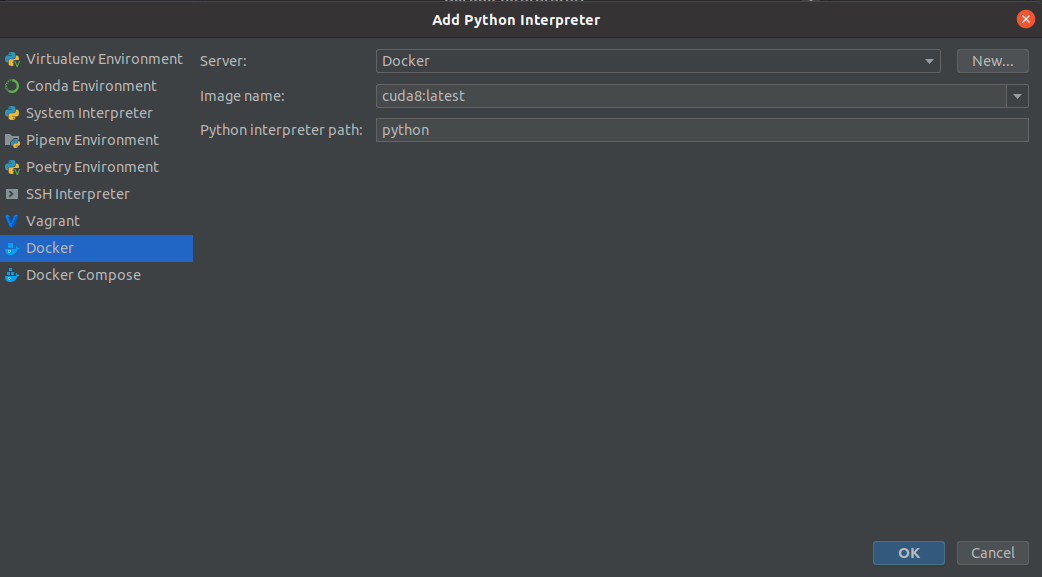

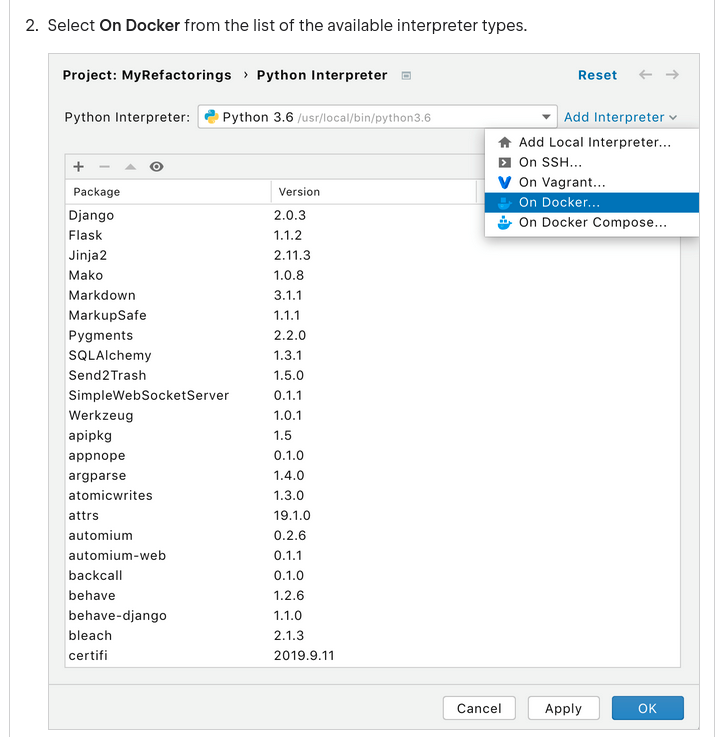

The way is instead of doing this as in the guide:

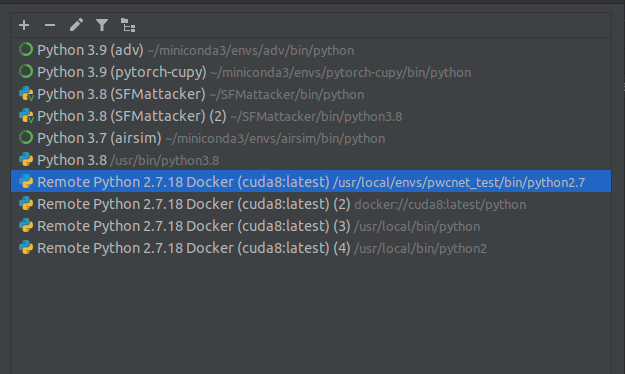

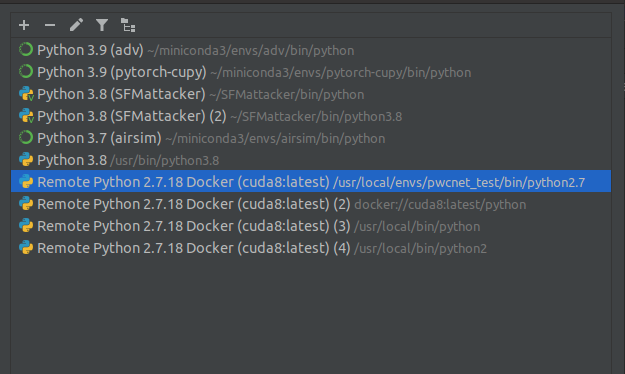

I press on the little down arrow in left of the Add Interpreter button and then click on Show all:

After which I can press the button :

works, so it might be PyCharm "Python Console" issue.

works, so it might be PyCharm "Python Console" issue.

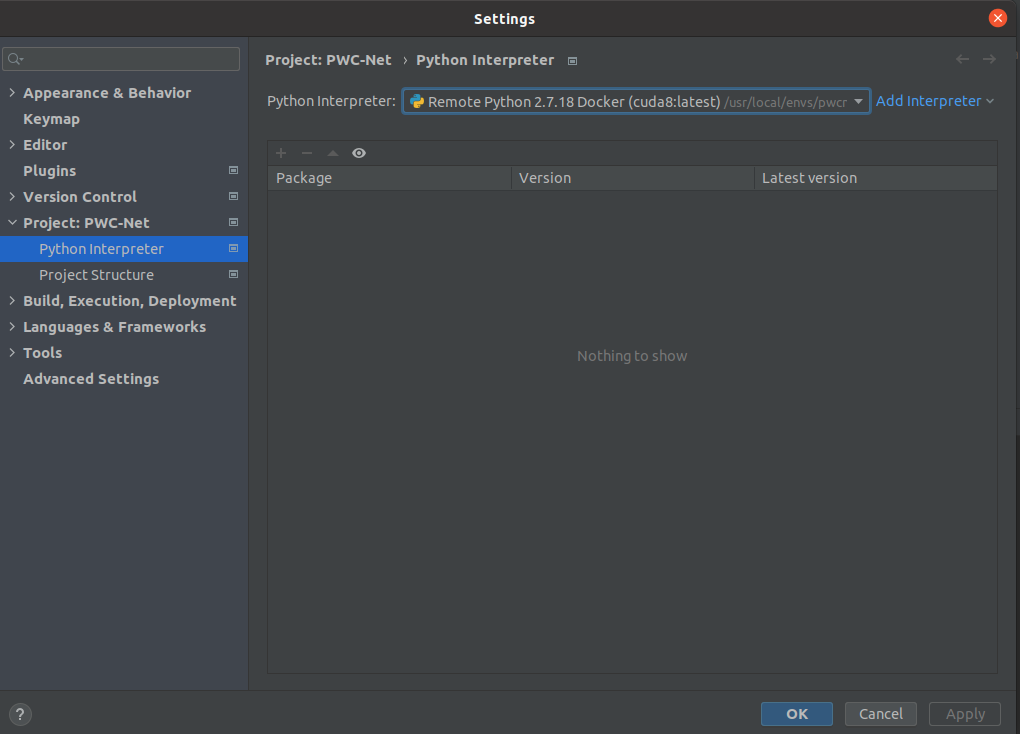

which will result in the difference mentioned above in functionality and also in the path dispalyed (the first one is the first remote interpreter top to bottom direction and the second is the second correspondingly):

Here of course the effect of the first and the second correspondingly:

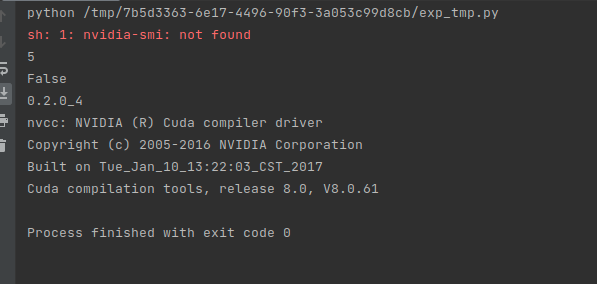

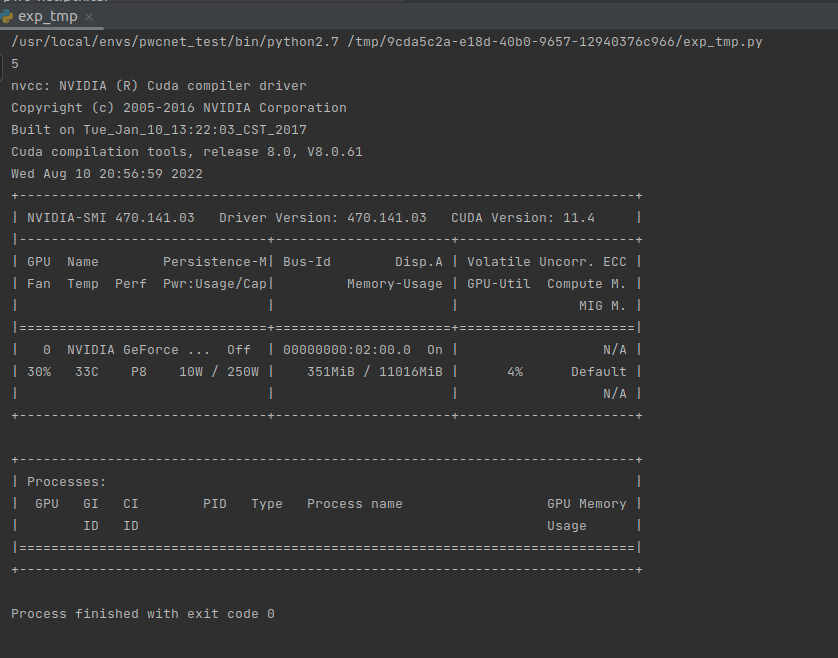

Here is the results of the interpreter run with the first method connected interpreter:

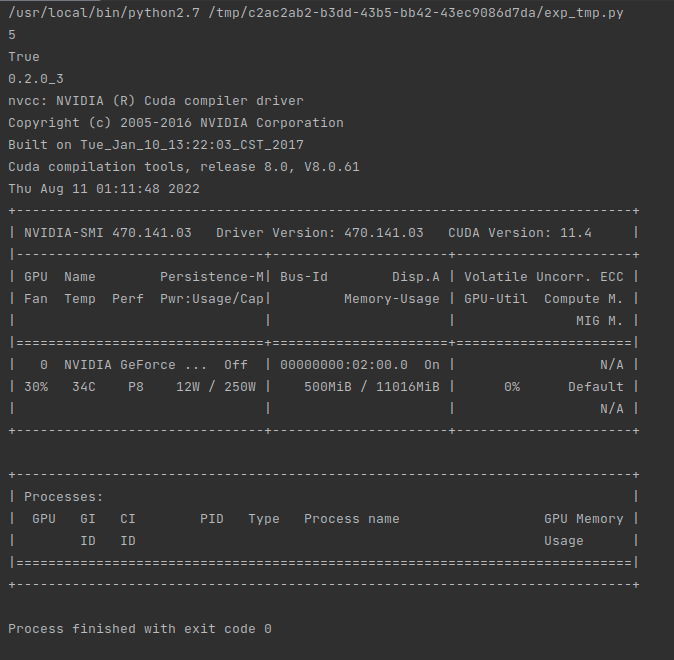

and here is the second:

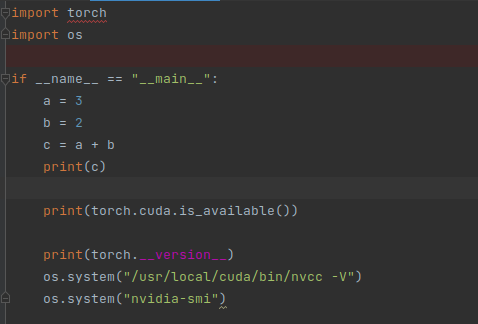

Of the following code:

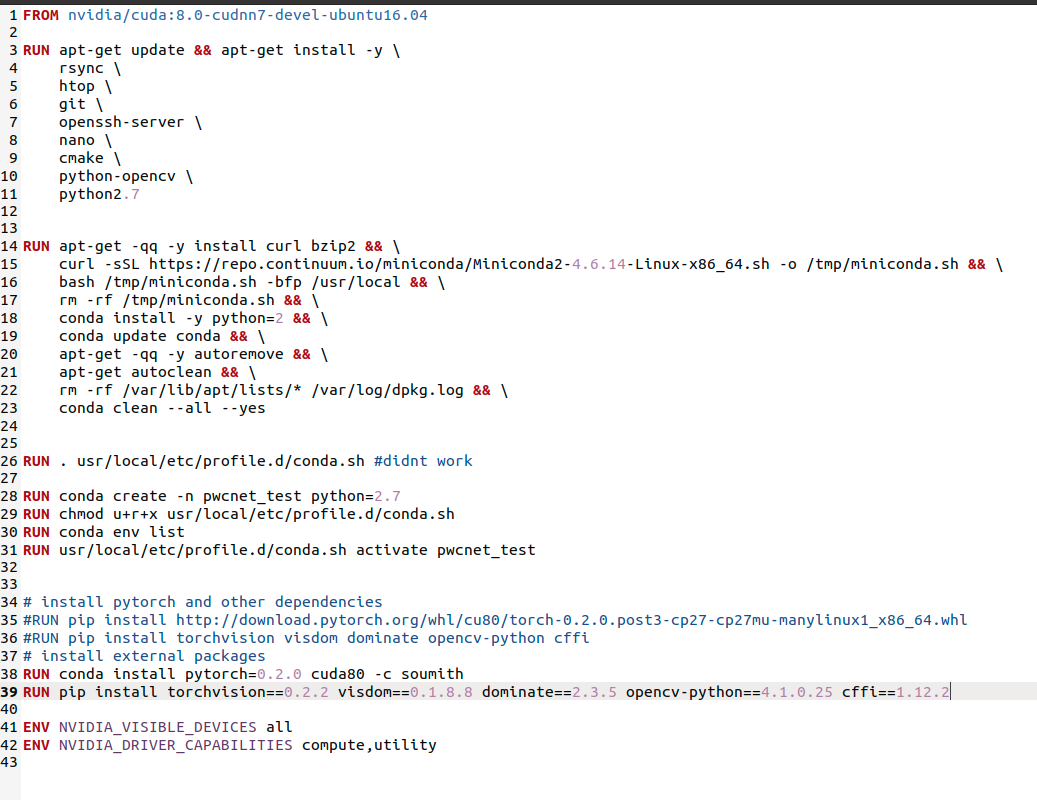

Here is the Dockerfile file if you want to take a look:

Anyone configured it correctly and can help ? Thank you in advance.

P.S: if I run the docker from services and enter the terminal the command nvidia-smi works fine and also the import of torch and the command torch.cuda.is_available return True.

P.S.2: The thing that has worked for me for now is to change the Dockerfile to install directly torch with pip without create conda environement.

Then I set the path to the python2.7 and I can run the code, but not debug it.

for run the result is as expected (the packages list as was shown before is still empty, but it works, I guess somehow my IDE cannot access the packages list of the remote interpreter in that case, I dont know why):

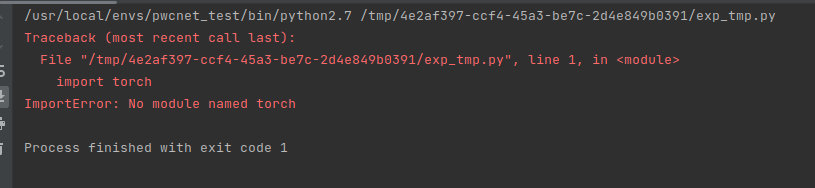

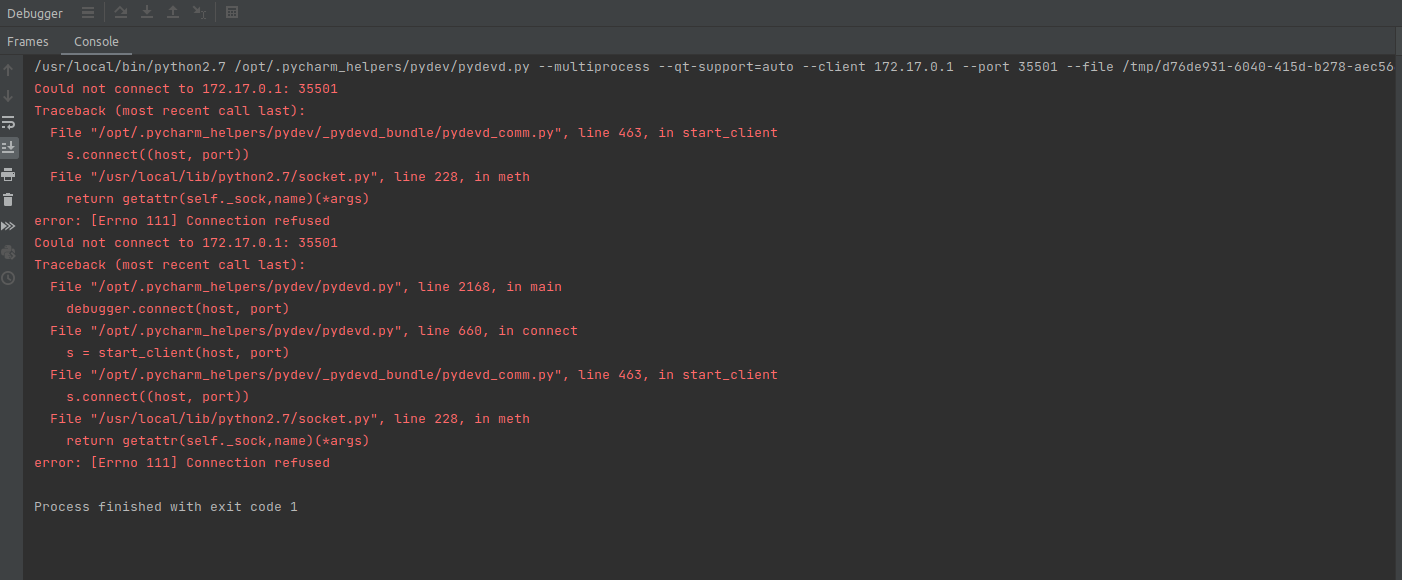

But the debugger outputs the following error:

Any suggestions for the debugger issue also will be welcome, although it is a different issue.

CodePudding user response:

Please update to 2022.2.1 as it looks like a known regression that has been fixed.

Let me know if it still does not work well.