I am trying to teach myself Azure Data Factory and am attempting some "simple" API exercises.

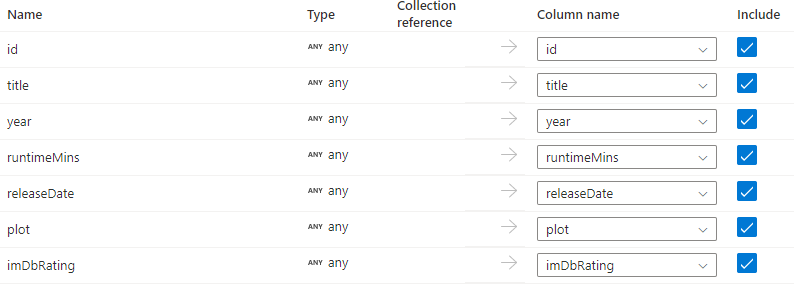

I successfully loaded data from an API call into a SQL database using the copy data activity and adding the simple mapping.

However, this was for an API call that returned a single JSON object as below.

{

"id": "tt0110413",

"title": "Léon: The Professional",

"year": "1994",

"releaseDate": "1994-11-18",

"runtimeMins": "110",

"plot": "12-year-old Mathilda is reluctantly taken in by Léon...",

"imDbRating":"8.5"

}

I would like to progress this to be able to handle an array of JSON objects as below.

{

"items":

[

{

"id": "tt0111161",

"title": "The Shawshank Redemption"

},

{

"id": "tt0068646",

"title": "The Godfather"

},

{

"id": "tt0468569",

"title": "The Dark Knight"

}

],

"errorMessage": ""

}

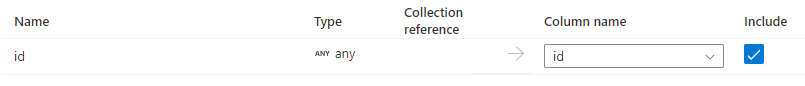

I have naively thought this would be a simple matter pointing the copy data activity at the new JSON and updating the mapping as required.

However I now receive the following error:

Data type of column 'id' can't be inferred from 1st row of data, please specify its data type in mappings of copy activity or structure of DataSet

Would someone be able to advise on how I change the mapping to handle this, or if in fact this is entirely the wrong approach.

As always, any help is greatly appreciated.

CodePudding user response:

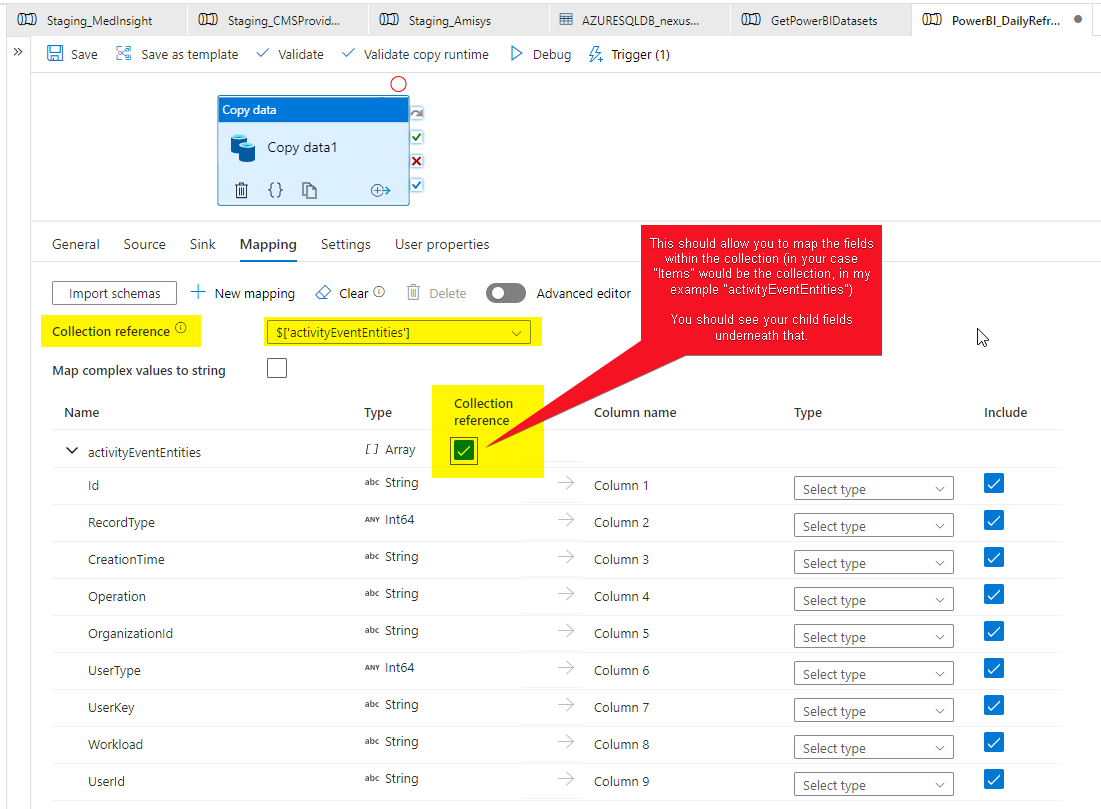

Since you only have a one level array, this shouldn't be hard. You will need to use a collection reference, and in your example that would be "items".

Unfortunately, ADF is very limited on the complexity of collections/arrays in JSON and can only handle a single collection reference. I have found it personally easier to do a WEB API call instead of a copy activity and push that JSON text to a Stored Procedure.

From there you can pretty easily shred the JSON however you would like. Here is an example of what a pretty simple Stored Proc would look like that takes the JSON Output:

ALTER Procedure [Log].[PopulatePowerBIGroups] (

@json NVARCHAR(MAX))

as

select @json j

;

insert into Log.PowerBI_GroupsTmp

SELECT

JSON_VALUE ( j.[value], '$.id' ) AS groupID,

JSON_VALUE ( j.[value], '$.isReadOnly' ) AS isReadOnly,

JSON_VALUE ( j.[value], '$.isOnDedicatedCapacity' ) AS isOnDedicatedCapacity,

JSON_VALUE ( j.[value], '$.capacityId' ) AS capacityId,

JSON_VALUE ( j.[value], '$.capacityMigrationStatus' ) AS capacityMigrationStatus,

JSON_VALUE ( j.[value], '$.description' ) AS description,

JSON_VALUE ( j.[value], '$.type' ) AS type,

JSON_VALUE ( j.[value], '$.state' ) AS state,

JSON_VALUE ( j.[value], '$.name' ) AS groupName,

Stag.CSTReturnDate(getdate()) as ETL_UpdateTimestamp

FROM OPENJSON( @json ) j

where JSON_VALUE ( j.[value], '$.name' ) not like 'DUMMY%'

and JSON_VALUE ( j.[value], '$.state' ) = 'Active'

But of course for very simple web output, a Copy Data activity will work just fine!