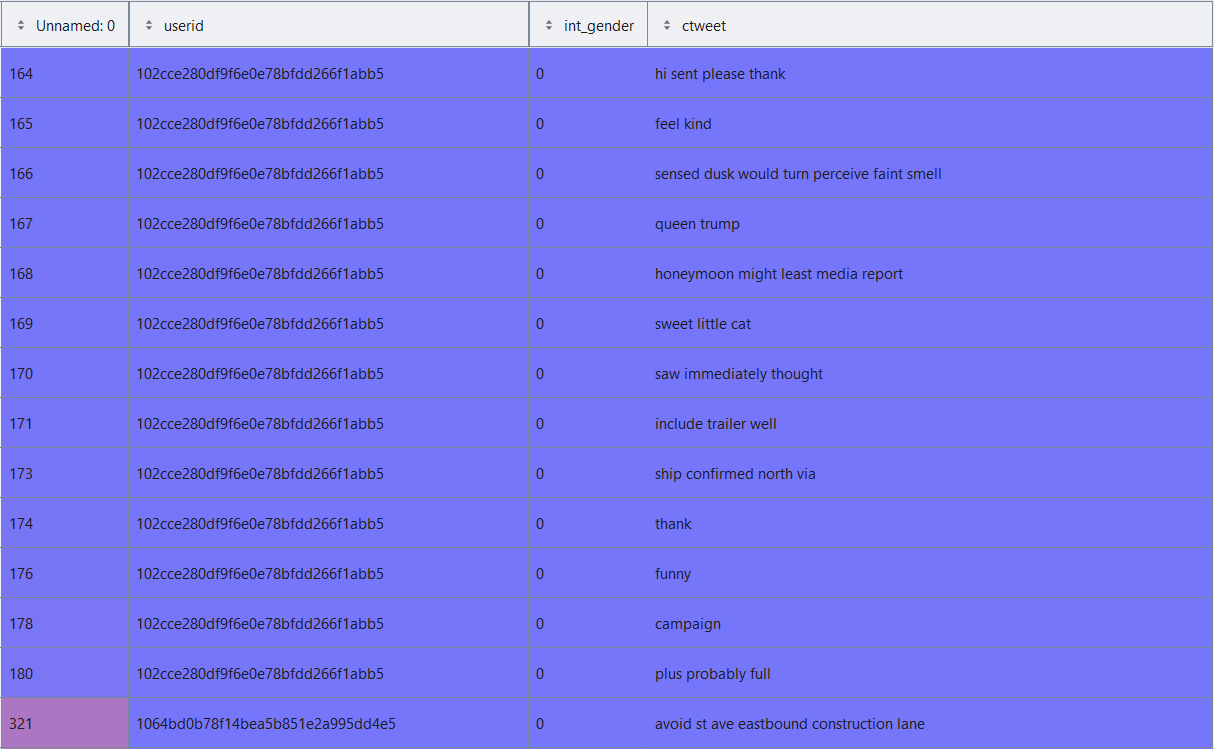

I have a data frame that contains "userid", "gender" and "tweet", each user has 100 tweets:

link to demo dataset: https://drive.google.com/file/d/12FAek_k-8ofHCoR24IxhqkiGa3efrvpA/view?usp=sharing

how can i merge each 5 tweets of user in a new row (each user has 100 tweets so in new data set there will be 20 rows for each user) and keep their user id and gender. so far i managed to group tweets but i need to have user id and gender as well.

dfMerged = df.groupby(df.index // 5)['ctweet'].agg(' '.join).to_frame()

CodePudding user response:

Short answer

data = pd.DataFrame([[1,1,'Hi'],[1,1,'my name is'], [1,1,'Hal'],[1,1,'my name is'], [1,1,'Hal'],[2,0,'Ich bin'], [2,0,'ein kartoffeln'],[2,0,'!'], [2,0,'ein kartoffeln'],[2,0,'!'],[1,1,'my name is'], [1,1,'Obama'],[1,1,'president of USA'], [1,1,'Obama'],[1,1,'president of USA'],[2,0,'Hi'],[2,0,'my name is'], [2,0,'James webb'],[2,0,'my name is'], [2,0,'James webb'],[1,1,'Ich bin'], [1,1,'ein potatoe'], [1,1,'hello human'],[1,1,'ein potatoe'], [1,1,'hello human']])

data.columns = ['id', 'gender', 'tweet']

n = 5

block = int(round(len(data)/n, 0))

data['block'] = np.repeat(range(1, block 1), n)

data_block = data.groupby(['id','gender', 'block'])['tweet'].agg(lambda x: '-'.join(x.dropna())).reset_index()

data_block

Long answer : Full explanations

Creating fake data :

data = pd.DataFrame([

[1,1,'Hi'],[1,1,'my name is'], [1,1,'Hal'],

[2,0,'Ich bin'], [2,0,'ein kartoffeln'],[2,0,'!'],

[1,1,'my name is'], [1,1,'Obama'],[1,1,'president of USA'],

[2,0,'Hi'],[2,0,'my name is'], [2,0,'James webb'],

[1,1,'Ich bin'], [1,1,'ein potatoe'], [1,1,'hello human']

])

data.columns = ['id', 'gender', 'tweet']

Warning : Assuming there is the right number of repetions per id and your data are sorted in the right way.

==> If not you can sort data by user id and isolate it as independent dataframe while truncating it if there is not the right number of row (multiple of what you want). Then you merge it again as one. Care of the context of your analysis/work of course :)

I defined the Number of block of repeated value you want - In your case groups of n=5. I used n=3 for the example.

n = 3

block = int(round(len(data)/n, 0))

data['block'] = np.repeat(range(1, block 1), n)

data_block = data.groupby(['id','gender', 'block'])['tweet'].agg(lambda x: '-'.join(x.dropna())).reset_index()

It gives :

>>> data_block

id gender block tweet

0 1 1 1 Hi-my name is-Hal

1 1 1 3 my name is-Obama-president of USA

2 1 1 5 Ich bin-ein potatoe-hello human

3 2 0 2 Ich bin-ein kartoffeln-!

4 2 0 4 Hi-my name is-James webb

Is it better ?

Straight example with group of 5 it works also well :

data = pd.DataFrame([

[1,1,'Hi'],[1,1,'my name is'], [1,1,'Hal'],[1,1,'my name is'], [1,1,'Hal'],

[2,0,'Ich bin'], [2,0,'ein kartoffeln'],[2,0,'!'], [2,0,'ein kartoffeln'],[2,0,'!'],

[1,1,'my name is'], [1,1,'Obama'],[1,1,'president of USA'], [1,1,'Obama'],[1,1,'president of USA'],

[2,0,'Hi'],[2,0,'my name is'], [2,0,'James webb'],[2,0,'my name is'], [2,0,'James webb'],

[1,1,'Ich bin'], [1,1,'ein potatoe'], [1,1,'hello human'], [1,1,'ein potatoe'], [1,1,'hello human']

])

data.columns = ['id', 'gender', 'tweet']

n = 5

block = int(round(len(data)/n, 0))

data['block'] = np.repeat(range(1, block 1), n)

data_block = data.groupby(['id','gender', 'block'])['tweet'].agg(lambda x: '-'.join(x.dropna())).reset_index()

data_block

>>> data_block

id gender block tweet

0 1 1 1 Hi-my name is-Hal-my name is-Hal

1 1 1 3 my name is-Obama-president of USA-Obama-presid...

2 1 1 5 Ich bin-ein potatoe-hello human-ein potatoe-he...

3 2 0 2 Ich bin-ein kartoffeln-!-ein kartoffeln-!

4 2 0 4 Hi-my name is-James webb-my name is-James webb

CodePudding user response:

you can simply use the .iloc (“location by integer”) attribute: & pass the upper & lower limits by incrementing 5 times in our case

import pandas as pd

df = pd.read_csv(r"matin zarei.csv")

pd.set_option('display.max_rows', 500)

lens =(len(df))

up=0

low=5

df_res=[]

#print(lens)

for i in range(round(lens/5)):

df_new = df.iloc[up:low].groupby(['userid','int_gender'])['ctweet'].agg(lambda x: ','.join(x.dropna())).reset_index()

#print(df_new)

#print('\n')

up= up 5

low = low 5

if df.empty:

pass

else:

df_res.append(df_new)

df_res=pd.concat(df_res)

pd.set_option('display.max_columns', None)

pd.set_option('display.expand_frame_repr', False)

pd.set_option('max_colwidth', -1)

print(df_res)

output:

userid int_gender ctweet

0 102cce280df9f6e0e78bfdd266f1abb5 0 hi sent please thank,feel kind,sensed dusk would turn perceive faint smell,queen trump,honeymoon might least media report

0 102cce280df9f6e0e78bfdd266f1abb5 0 sweet little cat,saw immediately thought,include trailer well,ship confirmed north via,thank

0 102cce280df9f6e0e78bfdd266f1abb5 0 funny,campaign,plus probably full

1 1064bd0b78f14bea5b851e2a995dd4e5 0 avoid st ave eastbound construction lane,way kick party notch

0 1064bd0b78f14bea5b851e2a995dd4e5 0 always writing good turn,tell much love,eat go,one around,great

0 1064bd0b78f14bea5b851e2a995dd4e5 0 police trying save black man lying back air shot,happy,forward,earth game east get,looking forward taking stage

0 1064bd0b78f14bea5b851e2a995dd4e5 0 outstanding highlight every thanks,full team little street making white rock

1 1074ea46e0f2d49b18b4d77b9aa8c9b 1 dope always cool see go,feel like back high school,anything comes sure send

0 1074ea46e0f2d49b18b4d77b9aa8c9b 1 bad tread cautiously,actually lot backlash industry came,yea cause liability,precious,pussy delete tweet

0 1074ea46e0f2d49b18b4d77b9aa8c9b 1 hey still want,famous ben,event back,exactly,starcraft

0 1074ea46e0f2d49b18b4d77b9aa8c9b 1 low point event peak,remember cod hit still huge,yes would grammatically correct response question,actually bought