Statement is:

Select a. *, b.o rder_item_id, b.s ervice_offer_id

The from TEM_KD_XY1 a

The join CRMDB. L_order_item b

On a.o ffer_comp_inst_id=b.o rder_item_obj_id

Where b. inish_time & gt; Sysdate - 365

And b.l an_id=3

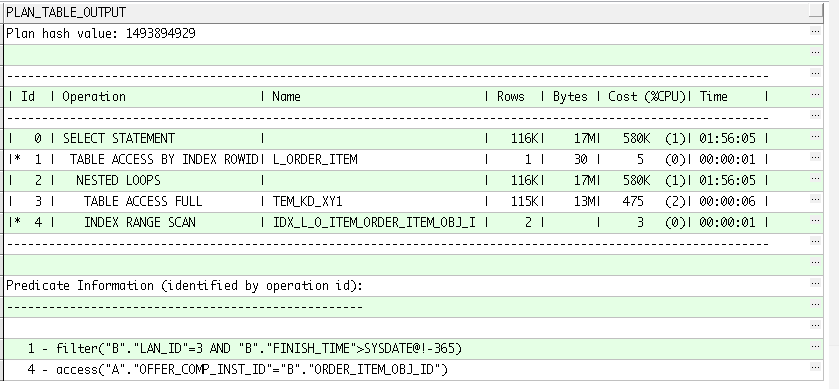

The execution plan is:

In addition a.o ffer_comp_inst_id=b.o rder_item_obj_id conditions of these two fields are indexed, ask everybody to help me analyze! Thank you very much!

CodePudding user response:

The two tables you are how much data is there? In addition, generate a trace file and have a look

CodePudding user response:

reference 1st floor ghx287524027 response: you these two tables have how much data is there? In addition, to generate a trace file and have a look CodePudding user response:

Suggestions: alter session set events' 10053 trace name context forever, level 1; - open the 10053 event CodePudding user response:

Table TEM_KD_XY1 a search all over the table, on the field offer_comp_inst_id indexed? CodePudding user response:

You said you in a.o ffer_comp_inst_id indexed, but execution plan didn't take the index, TEM_KD_XY1 index were analyzed after? The field value repetition rate? CodePudding user response:

reference 5 floor zengjc reply: you said you in a.o ffer_comp_inst_id indexed, but execution plan didn't take the index, TEM_KD_XY1 index were analyzed after? The field value repetition rate? CodePudding user response:

refer to 6th floor zengjc response: Quote: refer to the fifth floor zengjc reply: CodePudding user response:

How long it will take a check now? How to return the result? CodePudding user response:

You returned data also pretty much CodePudding user response:

refer to the eighth floor jycjyc response: how long it will take a check now? How to return the result? CodePudding user response: