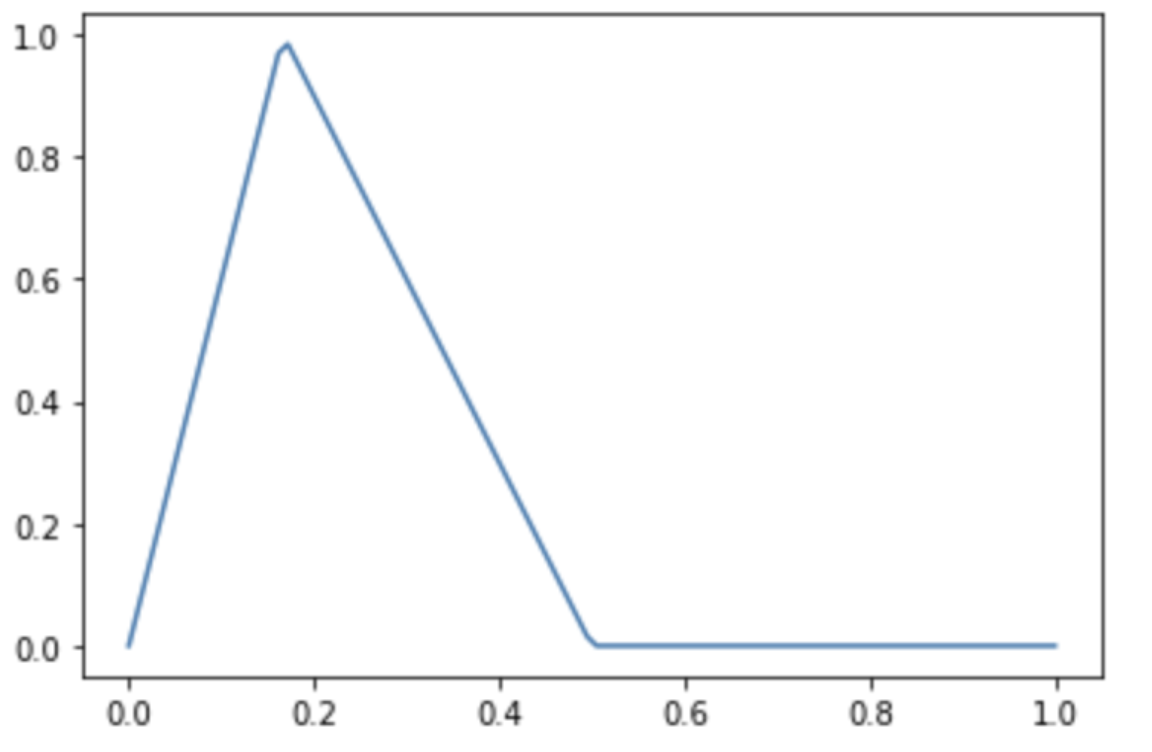

I want to draw a piecewise function. There is the code I implemented in Python.

import numpy as np

import matplotlib.pyplot as plt

def fun(x):

if 0 <= x < 1/6:

return 6*x

elif 1/6 <= x < 1/2:

return 3/2 - 3*x

else:

return 0

return x

vfun = np.vectorize(fun)

x = np.linspace(0, 1, 100)

y = vfun(x)

plt.plot(x, y, '-')

plt.show()

But what if instead of using the numpy.vecrorized, I use the numpy.piecewise or call the fun(x) directly:

x = np.linspace(0, 1, 100)

y = fun(x)

x = np.linspace(0, 1, 100)

y = np.piecewise(x, [x < 1/6, 1/6 <= x < 1/2, x >= 1/2], [lambda x: 6*x, lambda x: 3/2 - 3*x, lambda x: 0])

They all make the same error:

ValueError: The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()

I want to figure out why we need vectorized functions to express piecewise functions exactly. What does it do to the function?

CodePudding user response:

The issue is that x < value where x is a NumPy array produces a boolean array. To combine multiple boolean arrays you would need a boolean operator. To get the same meaning as value1 < x < value2 you would need a bitwise-and operator &: (value1 < x) & (x < value2).

Hence, you can re-write fun() with piecewise like:

def fun_piecewise(

arr,

pieces,

funcs=(lambda x: 0, lambda x: 6 * x, lambda x: 3 / 2 - 3 * x, lambda x: 0)

):

return np.piecewise(x, pieces, funcs)

fun_piecewise(x, (x < 0, (0 <= x) & (x < 1/6), (1/6 <= x) & (x < 1/2), x >= 1/2))

I would recommend writing your own function using masking more efficiently than what np.piecewise() can:

def fun_np(

arr,

thresholds=(0, 1/6, 1/2),

funcs=(lambda x: 0, lambda x: 6 * x, lambda x: 3 / 2 - 3 * x, lambda x: 0)

):

masks = np.array([arr < threshold for threshold in thresholds])

masks = [masks[0]] [x for x in masks[1:] & ~masks[:-1]] [~masks[-1]]

result = np.empty_like(arr)

for mask, func in zip(masks, funcs):

result[mask] = func(arr[mask])

return result

The idea is that the masks are combined pairwise (except the first and the last) so there is no need to recompute them twice.

Note that there is one function more than thresholds, because of the edge/bin effect (to define n bins one needs n 1 edges) combined with the fact that the -∞ and ∞ edges are implicit.

This is typically faster than both vfun() and np.piecewise().

Memory-wise vfun() is more efficient:

x = np.linspace(-1, 1, 1000)

%timeit vfun(x)

# 284 µs ± 10.3 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

%timeit fun_np(x)

# 36.6 µs ± 2.04 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

%timeit fun_piecewise(x, (x < 0, (0 <= x) & (x < 1/6), (1/6 <= x) & (x < 1/2), x >= 1/2))

# 56.2 µs ± 2.04 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

%timeit np.array([fun(i) for i in x])

# 695 µs ± 10.3 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)